Arvind V. Mahankali

Carnegie Mellon University

Low Rank Approximation for General Tensor Networks

Jul 15, 2022

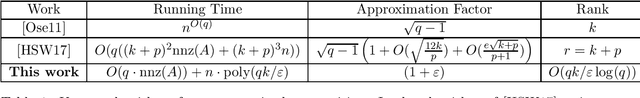

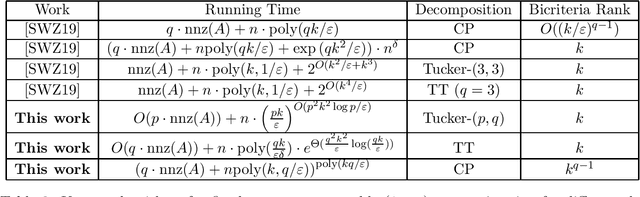

Abstract:We study the problem of approximating a given tensor with $q$ modes $A \in \mathbb{R}^{n \times \ldots \times n}$ with an arbitrary tensor network of rank $k$ -- that is, a graph $G = (V, E)$, where $|V| = q$, together with a collection of tensors $\{U_v \mid v \in V\}$ which are contracted in the manner specified by $G$ to obtain a tensor $T$. For each mode of $U_v$ corresponding to an edge incident to $v$, the dimension is $k$, and we wish to find $U_v$ such that the Frobenius norm distance between $T$ and $A$ is minimized. This generalizes a number of well-known tensor network decompositions, such as the Tensor Train, Tensor Ring, Tucker, and PEPS decompositions. We approximate $A$ by a binary tree network $T'$ with $O(q)$ cores, such that the dimension on each edge of this network is at most $\widetilde{O}(k^{O(dt)} \cdot q/\varepsilon)$, where $d$ is the maximum degree of $G$ and $t$ is its treewidth, such that $\|A - T'\|_F^2 \leq (1 + \varepsilon) \|A - T\|_F^2$. The running time of our algorithm is $O(q \cdot \text{nnz}(A)) + n \cdot \text{poly}(k^{dt}q/\varepsilon)$, where $\text{nnz}(A)$ is the number of nonzero entries of $A$. Our algorithm is based on a new dimensionality reduction technique for tensor decomposition which may be of independent interest. We also develop fixed-parameter tractable $(1 + \varepsilon)$-approximation algorithms for Tensor Train and Tucker decompositions, improving the running time of Song, Woodruff and Zhong (SODA, 2019) and avoiding the use of generic polynomial system solvers. We show that our algorithms have a nearly optimal dependence on $1/\varepsilon$ assuming that there is no $O(1)$-approximation algorithm for the $2 \to 4$ norm with better running time than brute force. Finally, we give additional results for Tucker decomposition with robust loss functions, and fixed-parameter tractable CP decomposition.

Optimal $\ell_1$ Column Subset Selection and a Fast PTAS for Low Rank Approximation

Jul 20, 2020

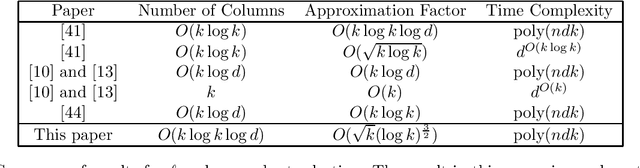

Abstract:We study the problem of entrywise $\ell_1$ low rank approximation. We give the first polynomial time column subset selection-based $\ell_1$ low rank approximation algorithm sampling $\tilde{O}(k)$ columns and achieving an $\tilde{O}(k^{1/2})$-approximation for any $k$, improving upon the previous best $\tilde{O}(k)$-approximation and matching a prior lower bound for column subset selection-based $\ell_1$-low rank approximation which holds for any $\text{poly}(k)$ number of columns. We extend our results to obtain tight upper and lower bounds for column subset selection-based $\ell_p$ low rank approximation for any $1 < p < 2$, closing a long line of work on this problem. We next give a $(1 + \varepsilon)$-approximation algorithm for entrywise $\ell_p$ low rank approximation, for $1 \leq p < 2$, that is not a column subset selection algorithm. First, we obtain an algorithm which, given a matrix $A \in \mathbb{R}^{n \times d}$, returns a rank-$k$ matrix $\hat{A}$ in $2^{\text{poly}(k/\varepsilon)} + \text{poly}(nd)$ running time such that: $$\|A - \hat{A}\|_p \leq (1 + \varepsilon) \cdot OPT + \frac{\varepsilon}{\text{poly}(k)}\|A\|_p$$ where $OPT = \min_{A_k \text{ rank }k} \|A - A_k\|_p$. Using this algorithm, in the same running time we give an algorithm which obtains error at most $(1 + \varepsilon) \cdot OPT$ and outputs a matrix of rank at most $3k$ --- these algorithms significantly improve upon all previous $(1 + \varepsilon)$- and $O(1)$-approximation algorithms for the $\ell_p$ low rank approximation problem, which required at least $n^{\text{poly}(k/\varepsilon)}$ or $n^{\text{poly}(k)}$ running time, and either required strong bit complexity assumptions (our algorithms do not) or had bicriteria rank $3k$. Finally, we show hardness results which nearly match our $2^{\text{poly}(k)} + \text{poly}(nd)$ running time and the above additive error guarantee.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge