Arturo Fredes

Using LLMs for Explaining Sets of Counterfactual Examples to Final Users

Aug 27, 2024

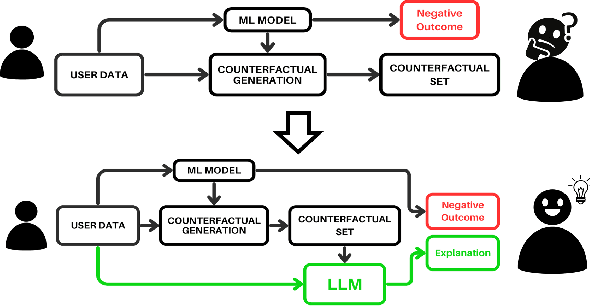

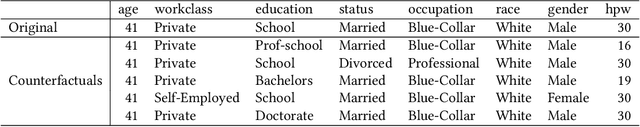

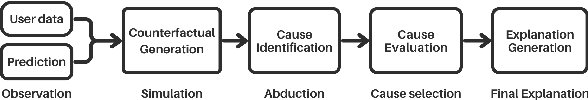

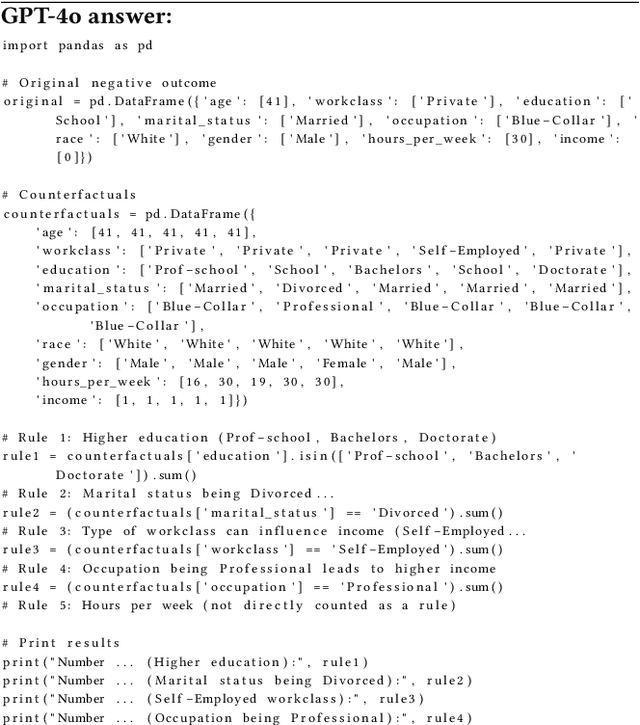

Abstract:Causality is vital for understanding true cause-and-effect relationships between variables within predictive models, rather than relying on mere correlations, making it highly relevant in the field of Explainable AI. In an automated decision-making scenario, causal inference methods can analyze the underlying data-generation process, enabling explanations of a model's decision by manipulating features and creating counterfactual examples. These counterfactuals explore hypothetical scenarios where a minimal number of factors are altered, providing end-users with valuable information on how to change their situation. However, interpreting a set of multiple counterfactuals can be challenging for end-users who are not used to analyzing raw data records. In our work, we propose a novel multi-step pipeline that uses counterfactuals to generate natural language explanations of actions that will lead to a change in outcome in classifiers of tabular data using LLMs. This pipeline is designed to guide the LLM through smaller tasks that mimic human reasoning when explaining a decision based on counterfactual cases. We conducted various experiments using a public dataset and proposed a method of closed-loop evaluation to assess the coherence of the final explanation with the counterfactuals, as well as the quality of the content. Results are promising, although further experiments with other datasets and human evaluations should be carried out.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge