Arpit Sangotra

Learning to Navigate Autonomously in Outdoor Environments : MAVNet

Sep 02, 2018

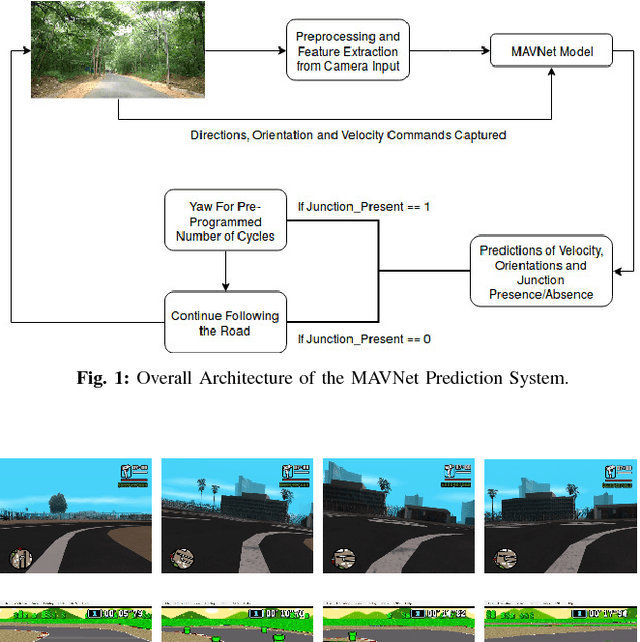

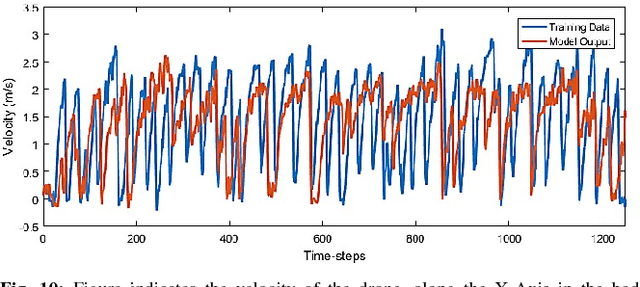

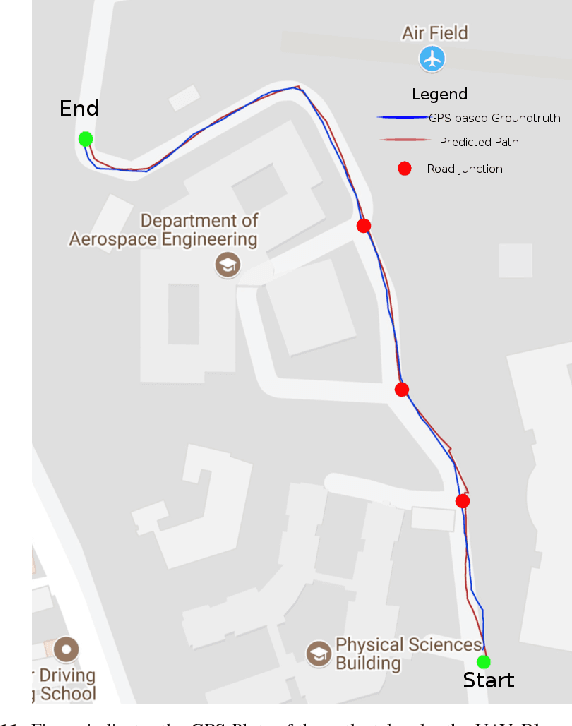

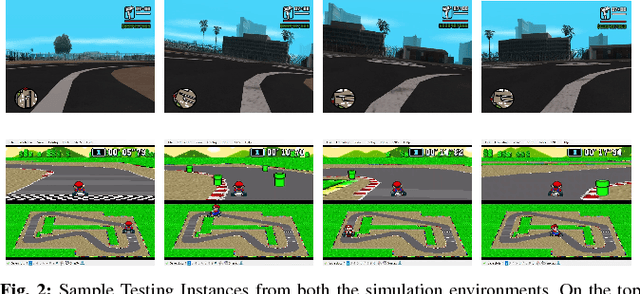

Abstract:In the modern era of automation and robotics, autonomous vehicles are currently the focus of academic and industrial research. With the ever increasing number of unmanned aerial vehicles getting involved in activities in the civilian and commercial domain, there is an increased need for autonomy in these systems too. Due to guidelines set by the governments regarding the operation ceiling of civil drones, road-tracking based navigation is garnering interest . In an attempt to achieve the above mentioned tasks, we propose an imitation learning based, data-driven solution to UAV autonomy for navigating through city streets by learning to fly by imitating an expert pilot. Derived from the classic image classification algorithms, our classifier has been constructed in the form of a fast 39-layered Inception model, that evaluates the presence of roads using the tomographic reconstructions of the input frames. Based on the Inception-v3 architecture, our system performs better in terms of processing complexity and accuracy than many existing models for imitation learning. The data used for training the system has been captured from the drone, by flying it in and around urban and semi-urban streets, by experts having at least 6-8 years of flying experience. Permissions were taken from required authorities who made sure that minimal risk (to pedestrians) is involved in the data collection process. With the extensive amount of drone data that we collected, we have been able to navigate successfully through roads without crashing or overshooting, with an accuracy of 98.44%. The computational efficiency of MAVNet enables the drone to fly at high speeds of upto 6m/sec. We present the same results in this research and compare them with other state-of-the-art methods of vision and learning based navigation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge