Arnas Uselis

Kaunas University of Technology

Does Data Scaling Lead to Visual Compositional Generalization?

Jul 09, 2025Abstract:Compositional understanding is crucial for human intelligence, yet it remains unclear whether contemporary vision models exhibit it. The dominant machine learning paradigm is built on the premise that scaling data and model sizes will improve out-of-distribution performance, including compositional generalization. We test this premise through controlled experiments that systematically vary data scale, concept diversity, and combination coverage. We find that compositional generalization is driven by data diversity, not mere data scale. Increased combinatorial coverage forces models to discover a linearly factored representational structure, where concepts decompose into additive components. We prove this structure is key to efficiency, enabling perfect generalization from few observed combinations. Evaluating pretrained models (DINO, CLIP), we find above-random yet imperfect performance, suggesting partial presence of this structure. Our work motivates stronger emphasis on constructing diverse datasets for compositional generalization, and considering the importance of representational structure that enables efficient compositional learning. Code available at https://github.com/oshapio/visual-compositional-generalization.

Diffusion Classifiers Understand Compositionality, but Conditions Apply

May 23, 2025Abstract:Understanding visual scenes is fundamental to human intelligence. While discriminative models have significantly advanced computer vision, they often struggle with compositional understanding. In contrast, recent generative text-to-image diffusion models excel at synthesizing complex scenes, suggesting inherent compositional capabilities. Building on this, zero-shot diffusion classifiers have been proposed to repurpose diffusion models for discriminative tasks. While prior work offered promising results in discriminative compositional scenarios, these results remain preliminary due to a small number of benchmarks and a relatively shallow analysis of conditions under which the models succeed. To address this, we present a comprehensive study of the discriminative capabilities of diffusion classifiers on a wide range of compositional tasks. Specifically, our study covers three diffusion models (SD 1.5, 2.0, and, for the first time, 3-m) spanning 10 datasets and over 30 tasks. Further, we shed light on the role that target dataset domains play in respective performance; to isolate the domain effects, we introduce a new diagnostic benchmark Self-Bench comprised of images created by diffusion models themselves. Finally, we explore the importance of timestep weighting and uncover a relationship between domain gap and timestep sensitivity, particularly for SD3-m. To sum up, diffusion classifiers understand compositionality, but conditions apply! Code and dataset are available at https://github.com/eugene6923/Diffusion-Classifiers-Compositionality.

Intermediate Layer Classifiers for OOD generalization

Apr 07, 2025

Abstract:Deep classifiers are known to be sensitive to data distribution shifts, primarily due to their reliance on spurious correlations in training data. It has been suggested that these classifiers can still find useful features in the network's last layer that hold up under such shifts. In this work, we question the use of last-layer representations for out-of-distribution (OOD) generalisation and explore the utility of intermediate layers. To this end, we introduce \textit{Intermediate Layer Classifiers} (ILCs). We discover that intermediate layer representations frequently offer substantially better generalisation than those from the penultimate layer. In many cases, zero-shot OOD generalisation using earlier-layer representations approaches the few-shot performance of retraining on penultimate layer representations. This is confirmed across multiple datasets, architectures, and types of distribution shifts. Our analysis suggests that intermediate layers are less sensitive to distribution shifts compared to the penultimate layer. These findings highlight the importance of understanding how information is distributed across network layers and its role in OOD generalisation, while also pointing to the limits of penultimate layer representation utility. Code is available at https://github.com/oshapio/intermediate-layer-generalization

CLIP Behaves like a Bag-of-Words Model Cross-modally but not Uni-modally

Feb 05, 2025

Abstract:CLIP (Contrastive Language-Image Pretraining) has become a popular choice for various downstream tasks. However, recent studies have questioned its ability to represent compositional concepts effectively. These works suggest that CLIP often acts like a bag-of-words (BoW) model, interpreting images and text as sets of individual concepts without grasping the structural relationships. In particular, CLIP struggles to correctly bind attributes to their corresponding objects when multiple objects are present in an image or text. In this work, we investigate why CLIP exhibits this BoW-like behavior. We find that the correct attribute-object binding information is already present in individual text and image modalities. Instead, the issue lies in the cross-modal alignment, which relies on cosine similarity. To address this, we propose Linear Attribute Binding CLIP or LABCLIP. It applies a linear transformation to text embeddings before computing cosine similarity. This approach significantly improves CLIP's ability to bind attributes to correct objects, thereby enhancing its compositional understanding.

Time-Adaptive Recurrent Neural Networks

Apr 11, 2022

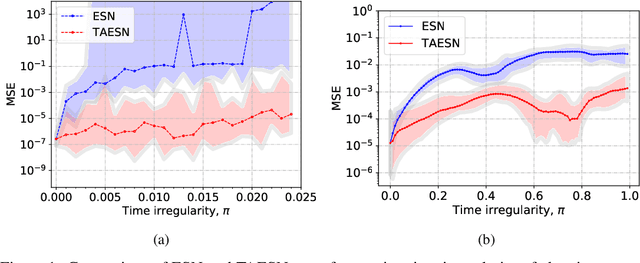

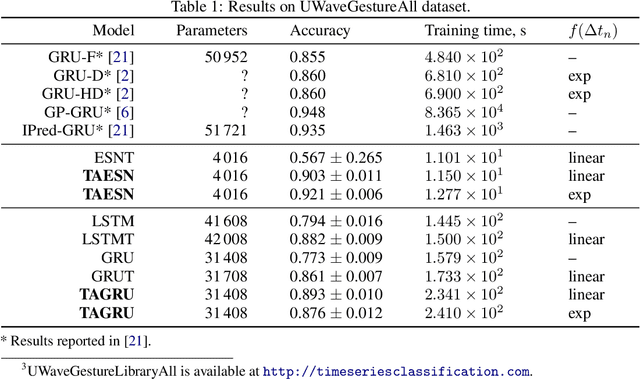

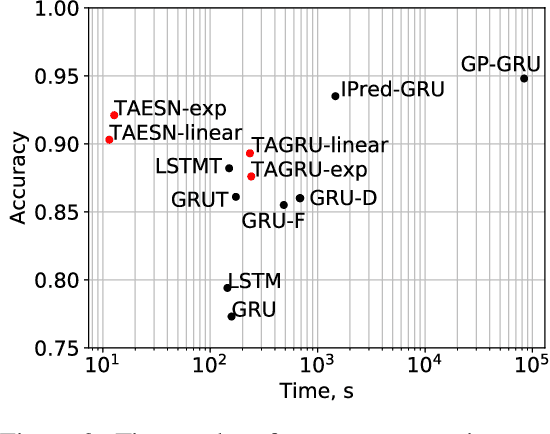

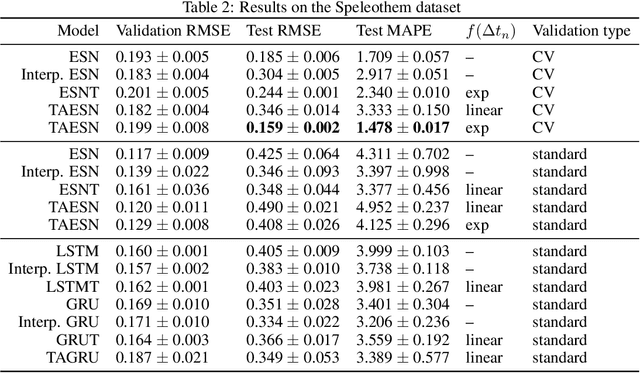

Abstract:Data are often sampled irregularly in time. Dealing with this using Recurrent Neural Networks (RNNs) traditionally involved ignoring the fact, feeding the time differences as additional inputs, or resampling the data. All these methods have their shortcomings. We propose an elegant alternative approach where instead the RNN is in effect resampled in time to match the time of the data. We use Echo State Network (ESN) and Gated Recurrent Unit (GRU) as the basis for our solution. Such RNNs can be seen as discretizations of continuous-time dynamical systems, which gives a solid theoretical ground for our approach. Similar recent observations have been made in feed-forward neural networks as neural ordinary differential equations. Our Time-Adaptive ESN (TAESN) and GRU (TAGRU) models allow for a direct model time setting and require no additional training, parameter tuning, or computation compared to the regular counterparts, thus retaining their original efficiency. We confirm empirically that our models can effectively compensate for the time-non-uniformity of the data and demonstrate that they compare favorably to data resampling, classical RNN methods, and alternative RNN models proposed to deal with time irregularities on several real-world nonuniform-time datasets.

Efficient implementations of echo state network cross-validation

Jun 19, 2020

Abstract:Background/introduction: Cross-validation is still uncommon in time series modeling. Echo State Networks (ESNs), as a prime example of Reservoir Computing (RC) models, are known for their fast and precise one-shot learning, that often benefit from good hyper-parameter tuning. This makes them ideal to change the status quo. Methods: We suggest several schemes for cross-validating ESNs and introduce an efficient algorithm for implementing them. This algorithm is presented as two levels of optimizations of doing $k$-fold cross-validation. Training an RC model typically consists of two stages: (i) running the reservoir with the data and (ii) computing the optimal readouts. The first level of our proposed optimization addresses the most computationally expensive part (i) and makes it remain constant irrespective of $k$. It dramatically reduces reservoir computations in any type of RC system and is enough if $k$ is small. The second level of optimization also makes the (ii) part remain constant irrespective of large $k$, as long as the dimension of the output is low. We discuss when the proposed validation schemes for ESNs could be beneficial, three options for producing the final model and empirically investigate them on six different real-world datasets, as well as do empirical computation time experiments. We provide the code in an online repository. Results: Proposed cross-validation schemes give better and more stable test performance in all the six different real-world datasets, three task types. Empirical run times confirm our complexity analysis. Conclusions: In most situations $k$-fold cross-validation of ESNs and many other RC models can be done for virtually the same time complexity as a simple single-split validation. Space complexity can also remain the same in all the cases. This enables cross-validation to become a standard practice in reservoir computing.

Localized convolutional neural networks for geospatial wind forecasting

May 13, 2020

Abstract:Convolutional Neural Networks (CNN) possess many positive qualities when it comes to spatial raster data. Translation invariance enables CNNs to detect features regardless of their position in the scene. But in some domains, like geospatial, not all locations are exactly equal. In this work we propose localized convolutional neural networks that enable convolutional architectures to learn local features in addition to the global ones. We investigate their instantiations in the form of learnable inputs, local weights, and a more general form. They can be added to any convolutional layers, easily end-to-end trained, introduce minimal additional complexity, and let CNNs retain most of their benefits to the extent that they are needed. In this work we address spatio-temporal prediction: test the effectiveness of our methods on a synthetic benchmark dataset and tackle three real-world wind prediction datasets. For one of them we propose a method to spatially order the unordered data. We compare against the recent state-of-the-art spatio-temporal prediction models on the same data. Models that use convolutional layers can be and are extended with our localizations. In all these cases our extensions improve the results, and thus often the state-of-the-art. We share all the code at a public repository.

Efficient Cross-Validation of Echo State Networks

Aug 22, 2019

Abstract:Echo State Networks (ESNs) are known for their fast and precise one-shot learning of time series. But they often need good hyper-parameter tuning for best performance. For this good validation is key, but usually, a single validation split is used. In this rather practical contribution we suggest several schemes for cross-validating ESNs and introduce an efficient algorithm for implementing them. The component that dominates the time complexity of the already quite fast ESN training remains constant (does not scale up with $k$) in our proposed method of doing $k$-fold cross-validation. The component that does scale linearly with $k$ starts dominating only in some not very common situations. Thus in many situations $k$-fold cross-validation of ESNs can be done for virtually the same time complexity as a simple single split validation. Space complexity can also remain the same. We also discuss when the proposed validation schemes for ESNs could be beneficial and empirically investigate them on several different real-world datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge