Ariunaa Enkhtur

Potential Societal Biases of ChatGPT in Higher Education: A Scoping Review

Nov 24, 2023

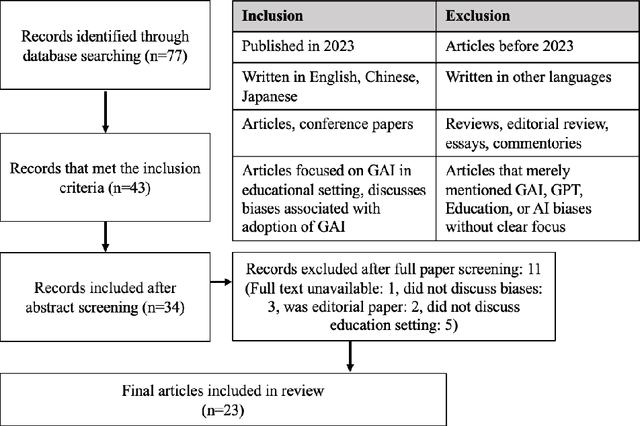

Abstract:ChatGPT and other Generative Artificial Intelligence (GAI) models tend to inherit and even amplify prevailing societal biases as they are trained on large amounts of existing data. Given the increasing usage of ChatGPT and other GAI by students, faculty members, and staff in higher education institutions (HEIs), there is an urgent need to examine the ethical issues involved such as its potential biases. In this scoping review, we clarify the ways in which biases related to GAI in higher education settings have been discussed in recent academic publications and identify what type of potential biases are commonly reported in this body of literature. We searched for academic articles written in English, Chinese, and Japanese across four main databases concerned with GAI usage in higher education and bias. Our findings show that while there is an awareness of potential biases around large language models (LLMs) and GAI, the majority of articles touch on ``bias'' at a relatively superficial level. Few identify what types of bias may occur under what circumstances. Neither do they discuss the possible implications for the higher education, staff, faculty members, or students. There is a notable lack of empirical work at this point, and we call for higher education researchers and AI experts to conduct more research in this area.

Ethical implications of ChatGPT in higher education: A scoping review

Nov 24, 2023

Abstract:This scoping review explores the ethical challenges of using ChatGPT in education, focusing particularly on issues related to higher education. By reviewing recent academic articles written in English, Chinese, and Japanese, we aimed to provide a comprehensive overview of relevant research while identifying gaps for future considerations. Drawing on Arksey and O'Malley's (2005) five-stage scoping review framework, we identified research questions, search terms, and conducted article search from four databases in the target three languages. Each article was reviewed by at least two researchers identifying the main ethical issues of utilizing AI in education, particularly higher education. Our analysis of ethical issues followed the framework developed by DeepMind (Weiginger et al., 2021) to identify six main areas of ethical concern in Language Models. The majority of papers were concerned with misinformation harms (n=25) and/or human-computer interaction related harms (n=24). Given the rapid deployment of Generative Artificial Intelligence (GAI), it is imperative for educators to conduct more empirical studies to develop sound ethical policies for the use of GAI.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge