Ariel Herrera

Hierarchical Sampling based Particle Filter for Visual-inertial Gimbal in the Wild

Jun 22, 2022

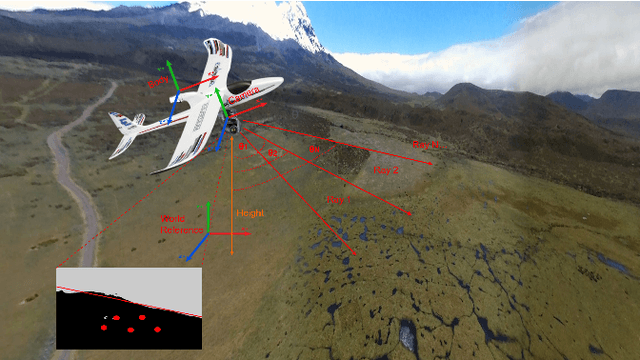

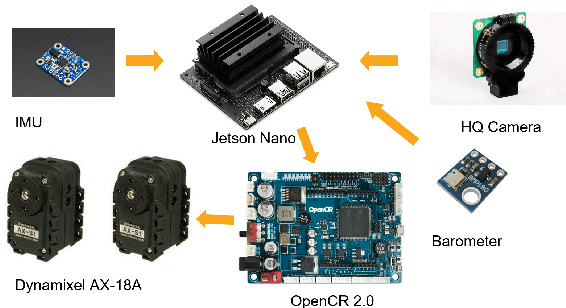

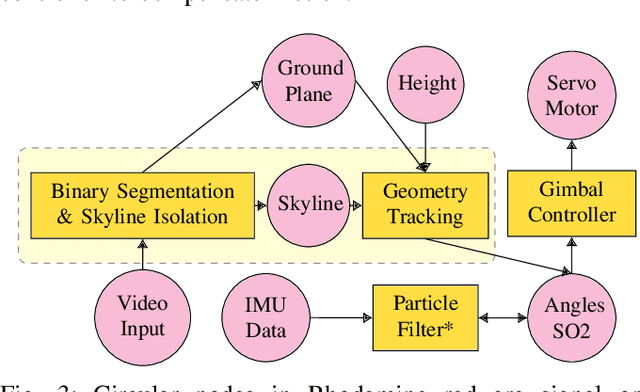

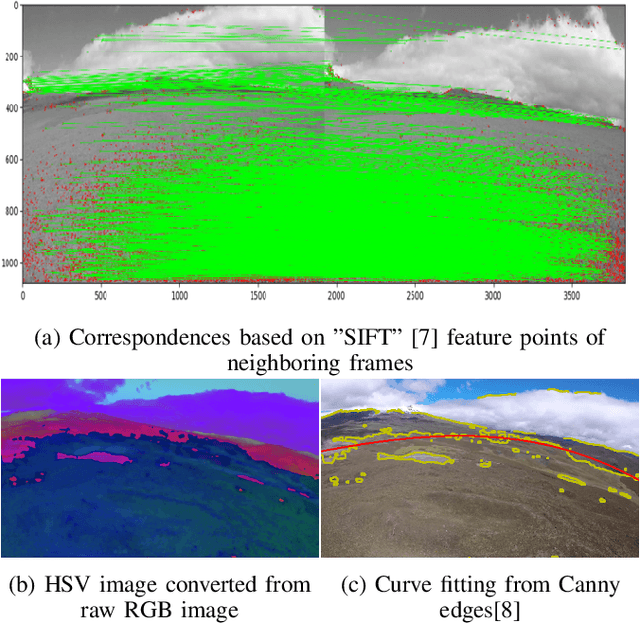

Abstract:The gimbal platform has been widely used in photogrammetry and robot perceptual module to stabilize the camera pose, thereby improving the captured video quality. Usually a gimbal is mainly composed of sensors and actuator parts. The orientation measurements from sensor can be inputted directly to actuator to steer camera towards proper pose. But the off-the-shelf custom product is either quite expensive, or depending on highly precise IMU and Brushless DC motor with hall sensor to estimate angles, which is prone to suffer from accumulative drift over long-term operation. In this paper, a CV based new tracking and fusion algorithm dedicated for gimbal system on drones operating in nature is proposed, main contributions are listed as below: a) a light-weight Resnet -18 backbone based network model was trained from scratch, and deployed onto Jetson Nano platform to segment the image into binary parts (ground and sky). b) geometric primitives tracking of the skyline and ground plane in 3D as cues, along with orientation estimation from IMU can provide multiple guesses for orientation. c) spherical surface based adaptive particle sampling can fuse orientation from aforementioned sensor sources efficiently. The final prototyping algorithm is tested on the real-time embedded system, and with both simulation on ground and real functional tests in the air.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge