Arian Azarang

A Generative Model Method for Unsupervised Multispectral Image Fusion in Remote Sensing

Feb 07, 2021

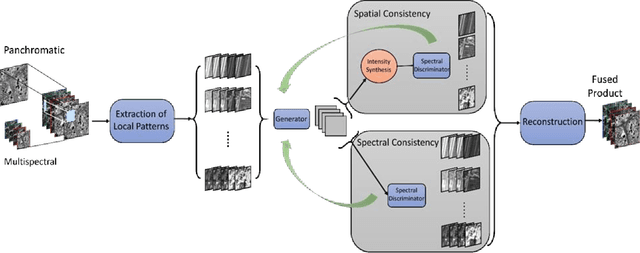

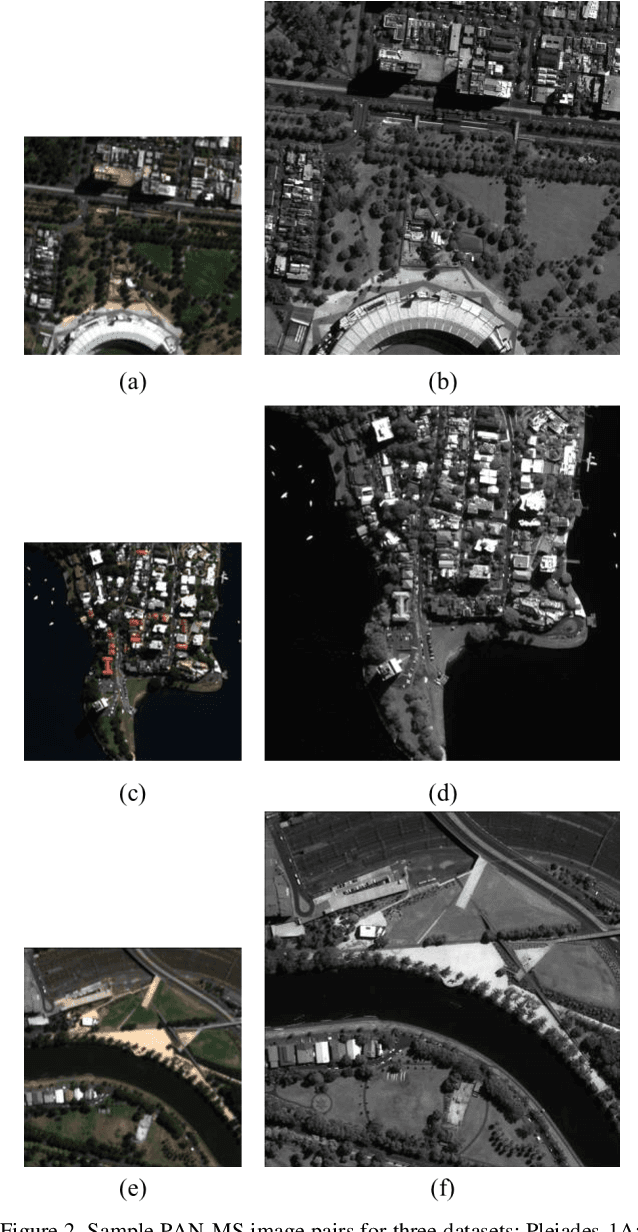

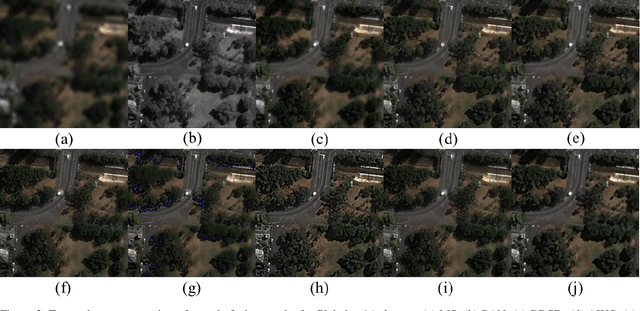

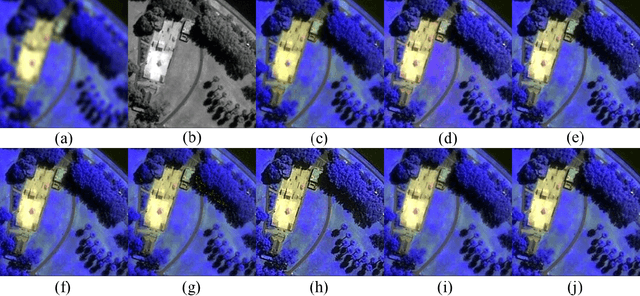

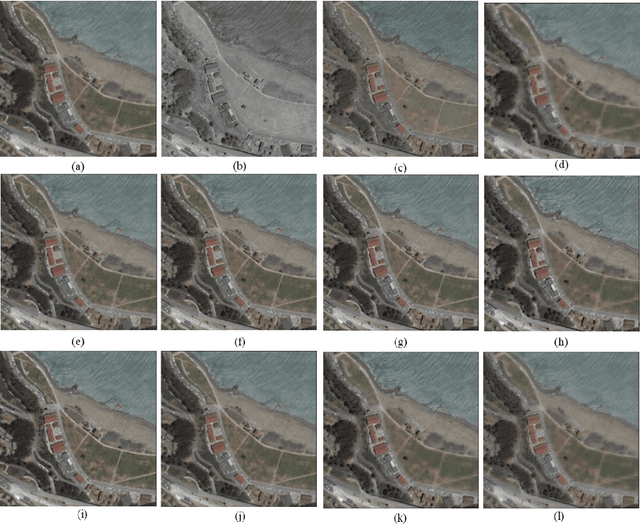

Abstract:This paper presents a generative model method for multispectral image fusion in remote sensing which is trained without supervision. This method eases the supervision of learning and it also considers a multi-objective loss function to achieve image fusion. The loss function incorporates both spectral and spatial distortions. Two discriminators are designed to minimize the spectral and spatial distortions of the generative output. Extensive experimentations are conducted using three public domain datasets. The comparison results across four reduced-resolution and three full-resolution objective metrics show the superiority of the developed method over several recently developed methods.

Deep Learning-Based Detail Map Estimation for MultiSpectral Image Fusion in Remote Sensing

Feb 07, 2021

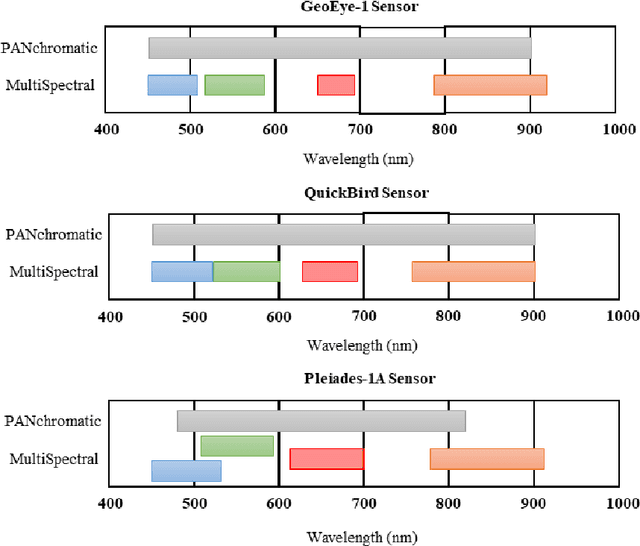

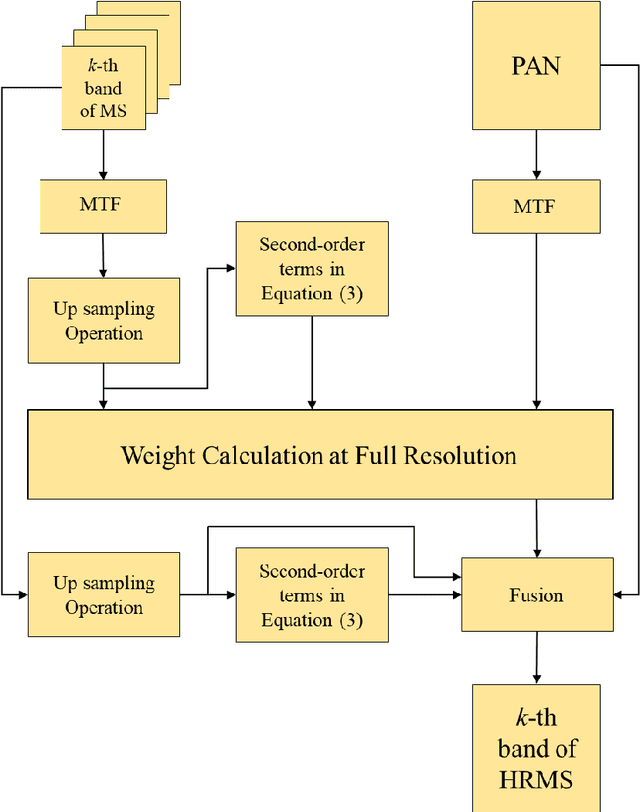

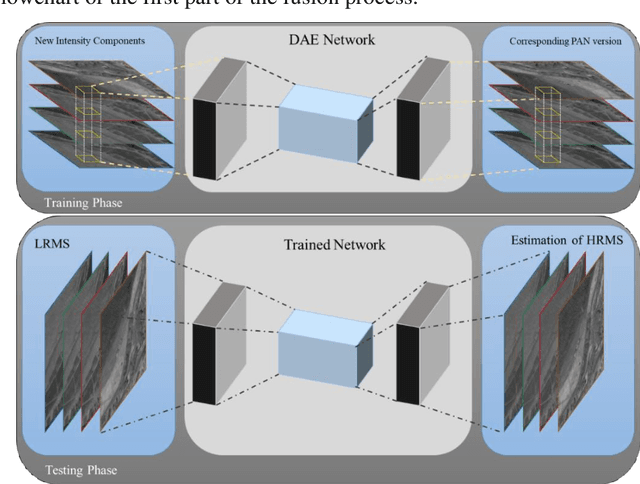

Abstract:This paper presents a deep learning-based estimation of the intensity component of MultiSpectral bands by considering joint multiplication of the neighbouring spectral bands. This estimation is conducted as part of the component substitution approach for fusion of PANchromatic and MultiSpectral images in remote sensing. After computing the band dependent intensity components, a deep neural network is trained to learn the nonlinear relationship between a PAN image and its nonlinear intensity components. Low Resolution MultiSpectral bands are then fed into the trained network to obtain an estimate of High Resolution MultiSpectral bands. Experiments conducted on three datasets show that the developed deep learning-based estimation approach provides improved performance compared to the existing methods based on three objective metrics.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge