Aravind Ravi

Classification of Microscopy Images of Breast Tissue: Region Duplication based Self-Supervision vs. Off-the Shelf Deep Representations

Feb 12, 2022

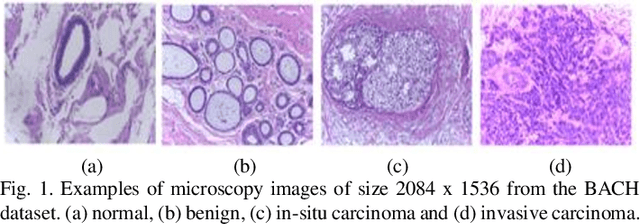

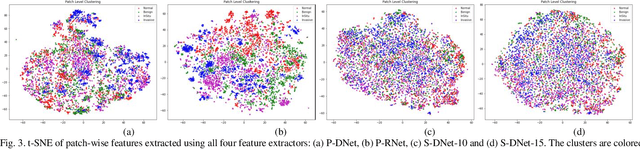

Abstract:Breast cancer is one of the leading causes of female mortality in the world. This can be reduced when diagnoses are performed at the early stages of progression. Further, the efficiency of the process can be significantly improved with computer aided diagnosis. Deep learning based approaches have been successfully applied to achieve this. One of the limiting factors for training deep networks in a supervised manner is the dependency on large amounts of expert annotated data. In reality, large amounts of unlabelled data and only small amounts of expert annotated data are available. In such scenarios, transfer learning approaches and self-supervised learning (SSL) based approaches can be leveraged. In this study, we propose a novel self-supervision pretext task to train a convolutional neural network (CNN) and extract domain specific features. This method was compared with deep features extracted using pre-trained CNNs such as DenseNet-121 and ResNet-50 trained on ImageNet. Additionally, two types of patch-combination methods were introduced and compared with majority voting. The methods were validated on the BACH microscopy images dataset. Results indicated that the best performance of 99% sensitivity was achieved for the deep features extracted using ResNet50 with concatenation of patch-level embedding. Preliminary results of SSL to extract domain specific features indicated that with just 15% of unlabelled data a high sensitivity of 94% can be achieved for a four class classification of microscopy images.

Pre-Trained Convolutional Neural Network Features for Facial Expression Recognition

Dec 16, 2018

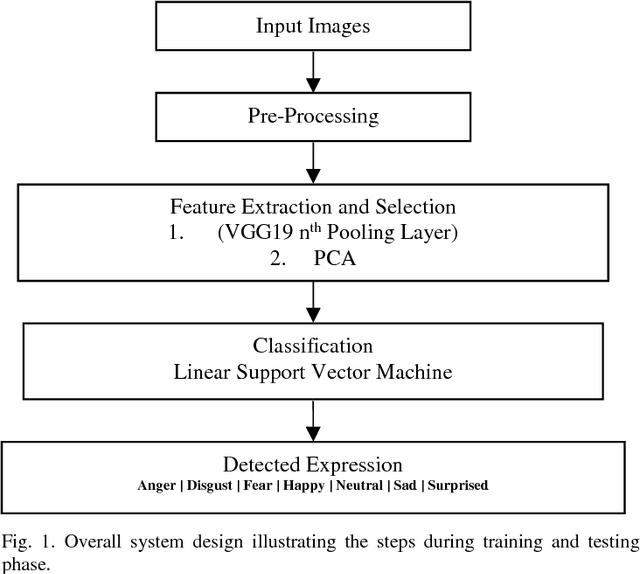

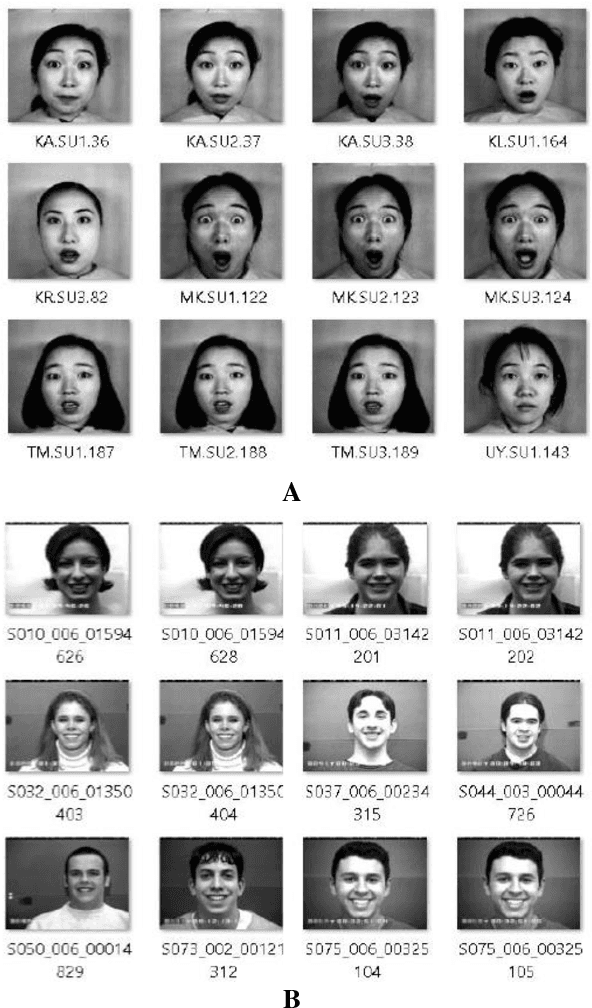

Abstract:Facial expression recognition has been an active area in computer vision with application areas including animation, social robots, personalized banking, etc. In this study, we explore the problem of image classification for detecting facial expressions based on features extracted from pre-trained convolutional neural networks trained on ImageNet database. Features are extracted and transferred to a Linear Support Vector Machine for classification. All experiments are performed on two publicly available datasets such as JAFFE and CK+ database. The results show that representations learned from pre-trained networks for a task such as object recognition can be transferred, and used for facial expression recognition. Furthermore, for a small dataset, using features from earlier layers of the VGG19 network provides better classification accuracy. Accuracies of 92.26% and 92.86% were achieved for the CK+ and JAFFE datasets respectively.

A Dataset and Preliminary Results for Umpire Pose Detection Using SVM Classification of Deep Features

Sep 11, 2018

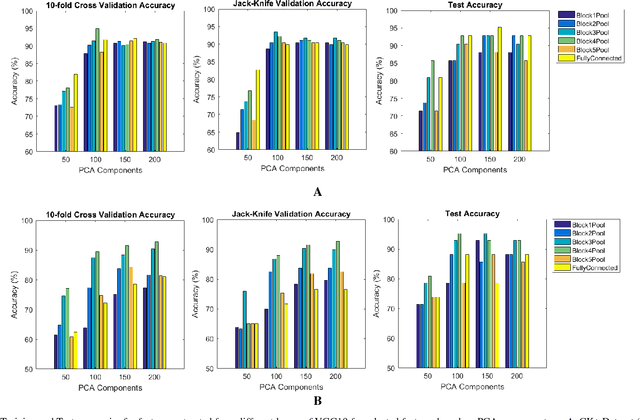

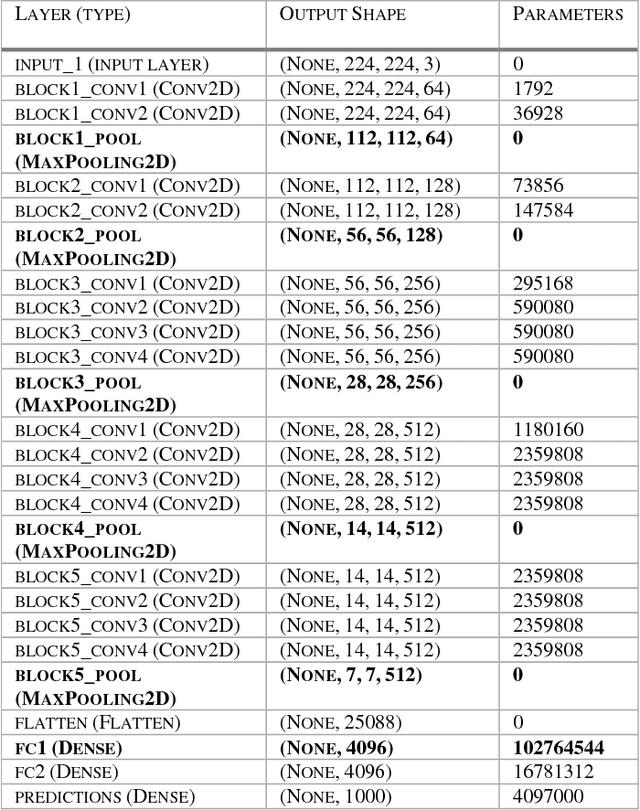

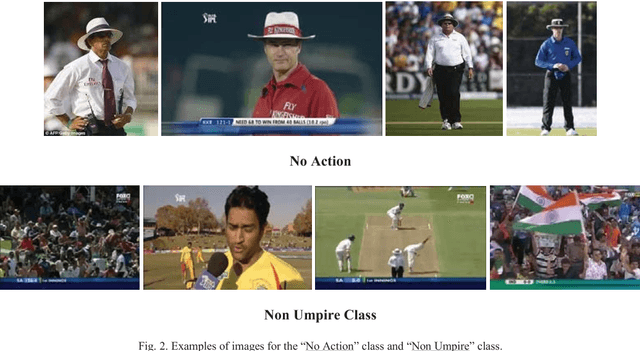

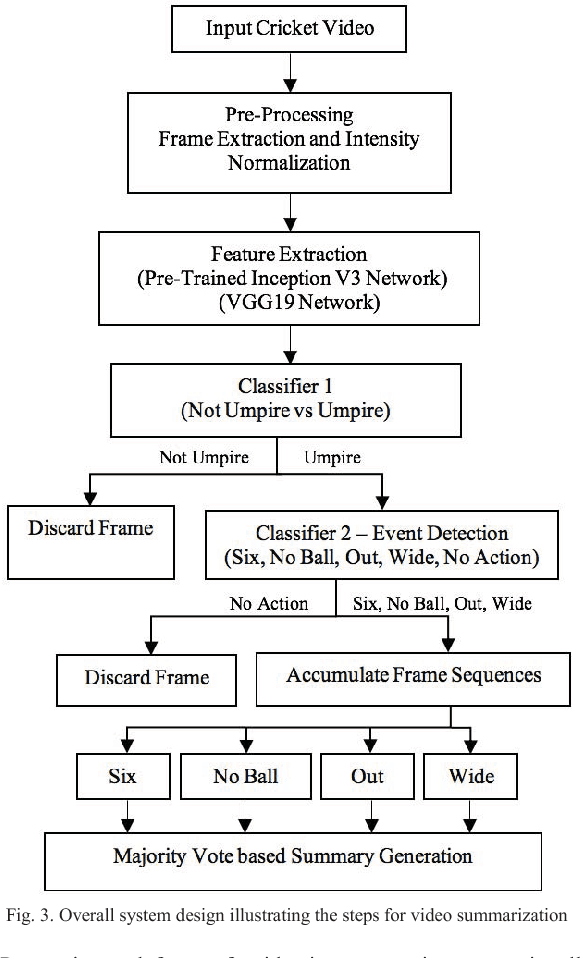

Abstract:In recent years, there has been increased interest in video summarization and automatic sports highlights generation. In this work, we introduce a new dataset, called SNOW, for umpire pose detection in the game of cricket. The proposed dataset is evaluated as a preliminary aid for developing systems to automatically generate cricket highlights. In cricket, the umpire has the authority to make important decisions about events on the field. The umpire signals important events using unique hand signals and gestures. We identify four such events for classification namely SIX, NO BALL, OUT and WIDE based on detecting the pose of the umpire from the frames of a cricket video. Pre-trained convolutional neural networks such as Inception V3 and VGG19 net-works are selected as primary candidates for feature extraction. The results are obtained using a linear SVM classifier. The highest classification performance was achieved for the SVM trained on features extracted from the VGG19 network. The preliminary results suggest that the proposed system is an effective solution for the application of cricket highlights generation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge