Aradhika Guha

Federated Learning with Erroneous Communication Links

Jan 31, 2022

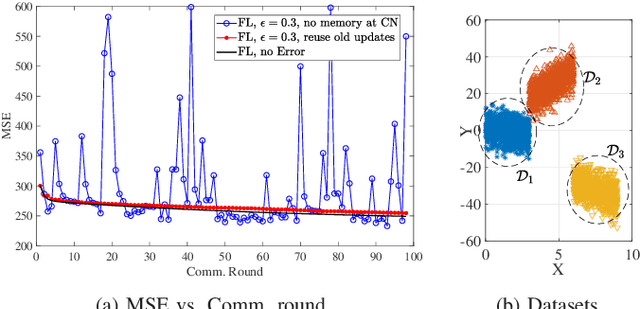

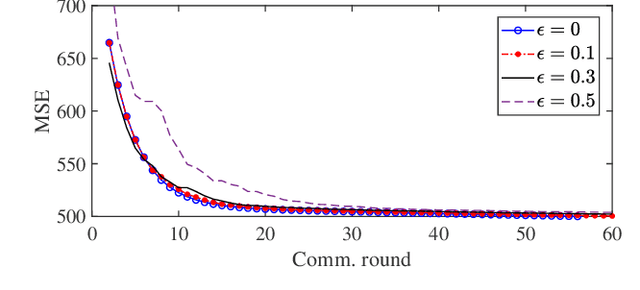

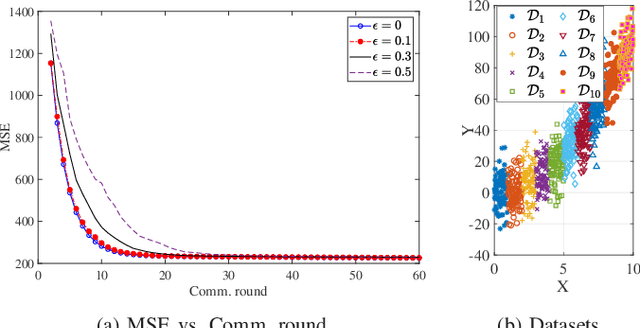

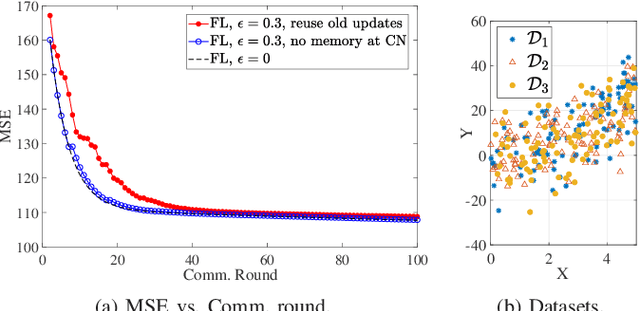

Abstract:In this paper, we consider the federated learning (FL) problem in the presence of communication errors. We model the link between the devices and the central node (CN) by a packet erasure channel, where the local parameters from devices are either erased or received correctly by CN with probability $e$ and $1-e$, respectively. We provide mathematical proof for the convergence of the FL algorithm in the presence of communication errors, where the CN uses past local updates when the fresh updates are not received from some devices. We show via simulations that by using the past local updates, the FL algorithm can converge in the presence of communication errors. We also show that when the dataset is uniformly distributed among devices, the FL algorithm that only uses fresh updates and discards missing updates might converge faster than the FL algorithm that uses past local updates.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge