Antonio Rodà

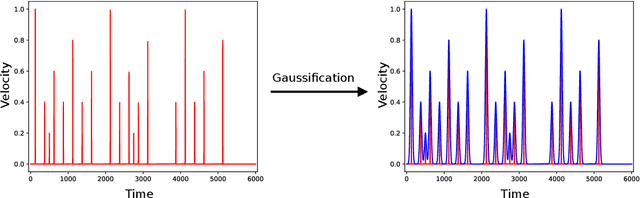

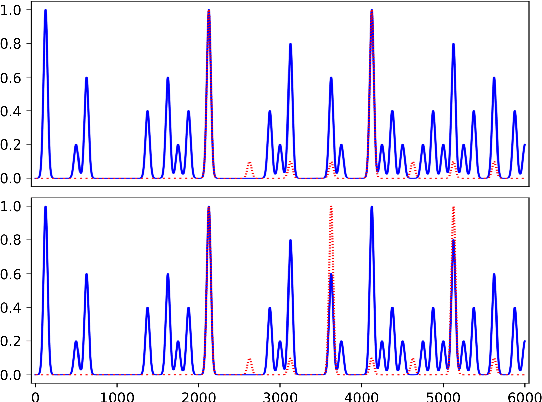

TorchFX: A modern approach to Audio DSP with PyTorch and GPU acceleration

Apr 11, 2025Abstract:The burgeoning complexity and real-time processing demands of audio signals necessitate optimized algorithms that harness the computational prowess of Graphics Processing Units (GPUs). Existing Digital Signal Processing (DSP) libraries often fall short in delivering the requisite efficiency and flexibility, particularly in integrating Artificial Intelligence (AI) models. In response, we introduce TorchFX: a GPU-accelerated Python library for DSP, specifically engineered to facilitate sophisticated audio signal processing. Built atop the PyTorch framework, TorchFX offers an Object-Oriented interface that emulates the usability of torchaudio, enhancing functionality with a novel pipe operator for intuitive filter chaining. This library provides a comprehensive suite of Finite Impulse Response (FIR) and Infinite Impulse Response (IIR) filters, with a focus on multichannel audio files, thus facilitating the integration of DSP and AI-based approaches. Our benchmarking results demonstrate significant efficiency gains over traditional libraries like SciPy, particularly in multichannel contexts. Despite current limitations in GPU compatibility, ongoing developments promise broader support and real-time processing capabilities. TorchFX aims to become a useful tool for the community, contributing to innovation and progress in DSP with GPU acceleration. TorchFX is publicly available on GitHub at https://github.com/matteospanio/torchfx.

A Multimodal Symphony: Integrating Taste and Sound through Generative AI

Mar 04, 2025Abstract:In recent decades, neuroscientific and psychological research has traced direct relationships between taste and auditory perceptions. This article explores multimodal generative models capable of converting taste information into music, building on this foundational research. We provide a brief review of the state of the art in this field, highlighting key findings and methodologies. We present an experiment in which a fine-tuned version of a generative music model (MusicGEN) is used to generate music based on detailed taste descriptions provided for each musical piece. The results are promising: according the participants' ($n=111$) evaluation, the fine-tuned model produces music that more coherently reflects the input taste descriptions compared to the non-fine-tuned model. This study represents a significant step towards understanding and developing embodied interactions between AI, sound, and taste, opening new possibilities in the field of generative AI. We release our dataset, code and pre-trained model at: https://osf.io/xs5jy/.

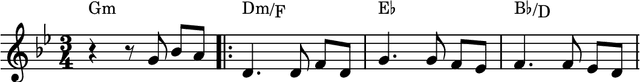

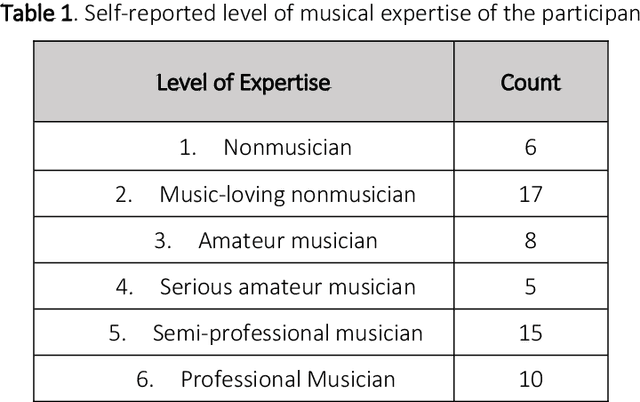

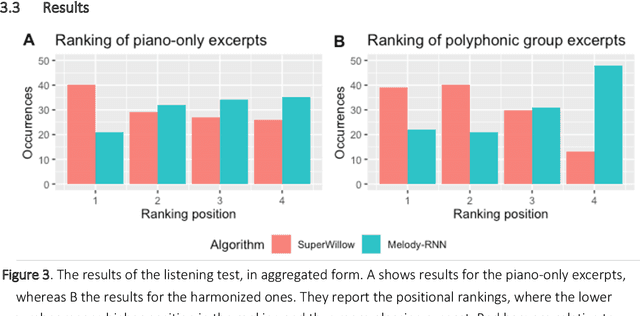

Harmonization and Evaluation; Tweaking the Parameters on Human Listeners

Aug 31, 2022

Abstract:Kansei models were used to study the connotative meaning of music. In multimedia and mixed reality, automatically generated melodies are increasingly being used. It is important to consider whether and what feelings are communicated by this music. Evaluation of computer-generated melodies is not a trivial task. Considered the difficulty of defining useful quantitative metrics of the quality of a generated musical piece, researchers often resort to human evaluation. In these evaluations, often the judges are required to evaluate a set of generated pieces along with some benchmark pieces. The latter are often composed by humans. While this kind of evaluation is relatively common, it is known that care should be taken when designing the experiment, as humans can be influenced by a variety of factors. In this paper, we examine the impact of the presence of harmony in audio files that judges must evaluate, to see whether having an accompaniment can change the evaluation of generated melodies. To do so, we generate melodies with two different algorithms and harmonize them with an automatic tool that we designed for this experiment, and ask more than sixty participants to evaluate the melodies. By using statistical analyses, we show harmonization does impact the evaluation process, by emphasizing the differences among judgements.

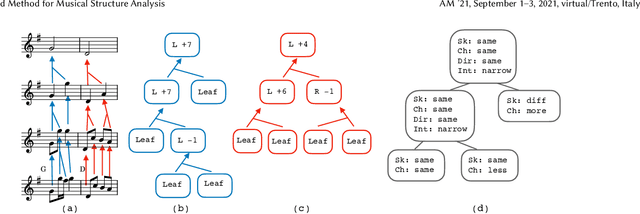

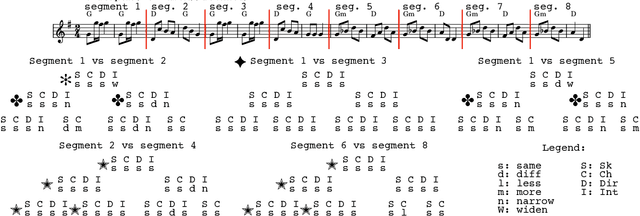

A New Corpus for Computational Music Research and A Novel Method for Musical Structure Analysis

Aug 31, 2022

Abstract:Computational models of music, while providing good descriptions of melodic development, still cannot fully grasp the general structure comprised of repetitions, transpositions, and reuse of melodic material. We present a corpus of strongly structured baroque allemandes, and describe a top-down approach to abstract the shared structure of their musical content using tree representations produced from pairwise differences between the Schenkerian-inspired analyses of each piece, thereby providing a rich hierarchical description of the corpus.

A Real-Time Tempo and Meter Tracking System for Rhythmic Improvis

Aug 31, 2022

Abstract:Music is a form of expression that often requires interaction between players. If one wishes to interact in such a musical way with a computer, it is necessary for the machine to be able to interpret the input given by the human to find its musical meaning. In this work, we propose a system capable of detecting basic rhythmic features that can allow an application to synchronize its output with the rhythm given by the user, without having any prior agreement or requirement on the possible input. The system is described in detail and an evaluation is given through simulation using quantitative metrics. The evaluation shows that the system can detect tempo and meter consistently under certain settings, and could be a solid base for further developments leading to a system robust to rhythmically changing inputs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge