Antoni Bert Chan

ALdataset: a benchmark for pool-based active learning

Oct 16, 2020

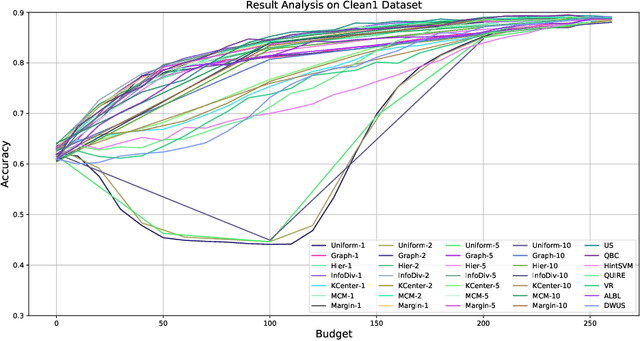

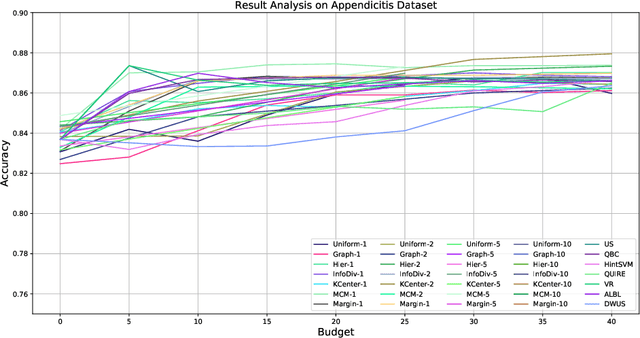

Abstract:Active learning (AL) is a subfield of machine learning (ML) in which a learning algorithm could achieve good accuracy with less training samples by interactively querying a user/oracle to label new data points. Pool-based AL is well-motivated in many ML tasks, where unlabeled data is abundant, but their labels are hard to obtain. Although many pool-based AL methods have been developed, the lack of a comparative benchmarking and integration of techniques makes it difficult to: 1) determine the current state-of-the-art technique; 2) evaluate the relative benefit of new methods for various properties of the dataset; 3) understand what specific problems merit greater attention; and 4) measure the progress of the field over time. To conduct easier comparative evaluation among AL methods, we present a benchmark task for pool-based active learning, which consists of benchmarking datasets and quantitative metrics that summarize overall performance. We present experiment results for various active learning strategies, both recently proposed and classic highly-cited methods, and draw insights from the results.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge