Anouar Kherchouche

Detect and Defense Against Adversarial Examples in Deep Learning using Natural Scene Statistics and Adaptive Denoising

Jul 12, 2021

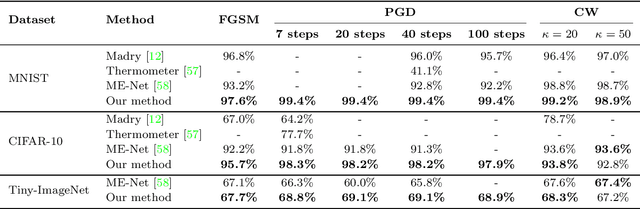

Abstract:Despite the enormous performance of deepneural networks (DNNs), recent studies have shown theirvulnerability to adversarial examples (AEs), i.e., care-fully perturbed inputs designed to fool the targetedDNN. Currently, the literature is rich with many ef-fective attacks to craft such AEs. Meanwhile, many de-fenses strategies have been developed to mitigate thisvulnerability. However, these latter showed their effec-tiveness against specific attacks and does not general-ize well to different attacks. In this paper, we proposea framework for defending DNN classifier against ad-versarial samples. The proposed method is based on atwo-stage framework involving a separate detector anda denoising block. The detector aims to detect AEs bycharacterizing them through the use of natural scenestatistic (NSS), where we demonstrate that these statis-tical features are altered by the presence of adversarialperturbations. The denoiser is based on block matching3D (BM3D) filter fed by an optimum threshold valueestimated by a convolutional neural network (CNN) toproject back the samples detected as AEs into theirdata manifold. We conducted a complete evaluation onthree standard datasets namely MNIST, CIFAR-10 andTiny-ImageNet. The experimental results show that theproposed defense method outperforms the state-of-the-art defense techniques by improving the robustnessagainst a set of attacks under black-box, gray-box and white-box settings. The source code is available at: https://github.com/kherchouche-anouar/2DAE

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge