Anika Schumann

From Time Series to Euclidean Spaces: On Spatial Transformations for Temporal Clustering

Oct 02, 2020

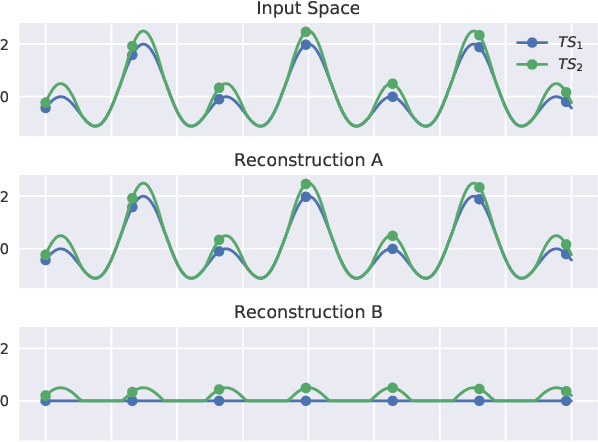

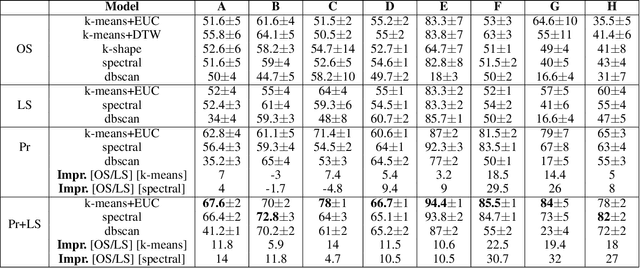

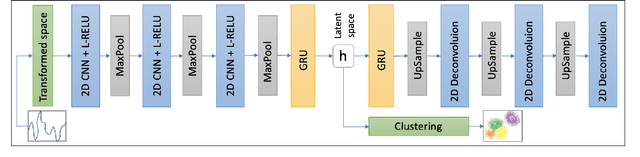

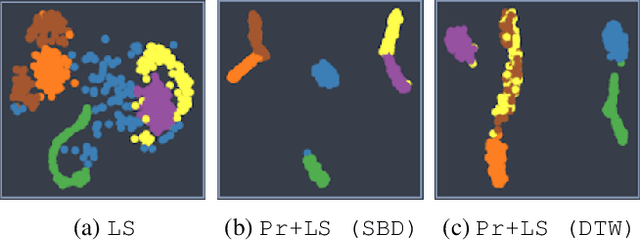

Abstract:Unsupervised clustering of temporal data is both challenging and crucial in machine learning. In this paper, we show that neither traditional clustering methods, time series specific or even deep learning-based alternatives generalise well when both varying sampling rates and high dimensionality are present in the input data. We propose a novel approach to temporal clustering, in which we (1) transform the input time series into a distance-based projected representation by using similarity measures suitable for dealing with temporal data,(2) feed these projections into a multi-layer CNN-GRU autoencoder to generate meaningful domain-aware latent representations, which ultimately (3) allow for a natural separation of clusters beneficial for most important traditional clustering algorithms. We evaluate our approach on time series datasets from various domains and show that it not only outperforms existing methods in all cases, by up to 32%, but is also robust and incurs negligible computation overheads.

Explainable Failure Predictions with RNN Classifiers based on Time Series Data

Jan 20, 2019

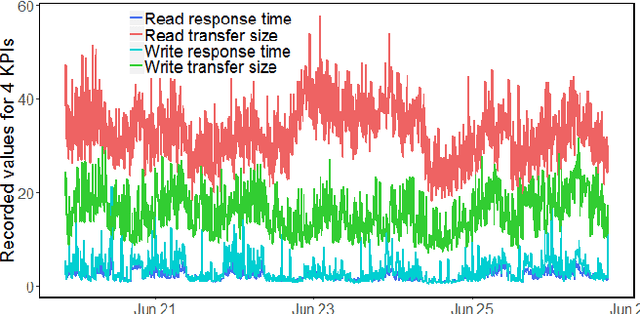

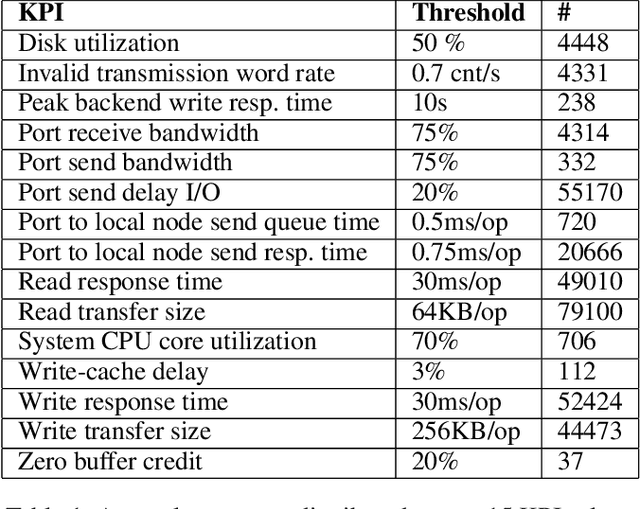

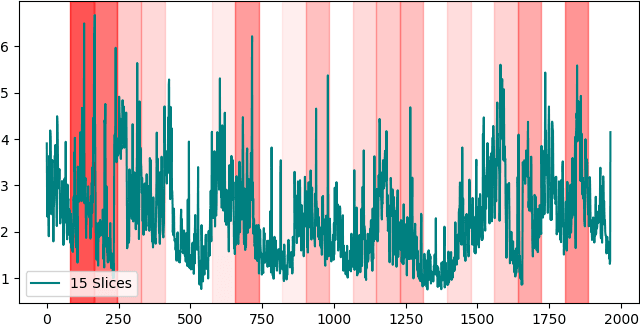

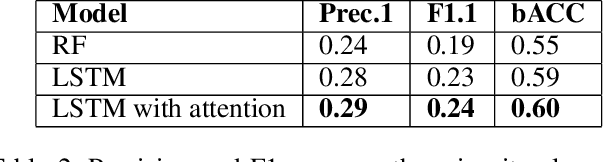

Abstract:Given key performance indicators collected with fine granularity as time series, our aim is to predict and explain failures in storage environments. Although explainable predictive modeling based on spiky telemetry data is key in many domains, current approaches cannot tackle this problem. Deep learning methods suitable for sequence modeling and learning temporal dependencies, such as RNNs, are effective, but opaque from an explainability perspective. Our approach first extracts the anomalous spikes from time series as events and then builds an RNN classifier with attention mechanisms to embed the irregularity and frequency of these events. A preliminary evaluation on real world storage environments shows that our approach can predict failures within a 3-day prediction window with comparable accuracy as traditional RNN-based classifiers. At the same time it can explain the predictions by returning the key anomalous events which led to those failure predictions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge