Aneek Barman Roy

Translation, Sentiment and Voices: A Computational Model to Translate and Analyse Voices from Real-Time Video Calling

Sep 28, 2019

Abstract:With internet quickly becoming an easy access to many, voice calling over internet is slowly gaining momentum. Individuals has been engaging in video communication across the world in different languages. The decade saw the emergence of language translation using neural networks as well. With more data being generated in audio and visual forms, there has become a need and a challenge to analyse such information for many researchers from academia and industry. The availability of video chat corpora is limited as organizations protect user privacy and ensure data security. For this reason, an audio-visual communication system (VidALL) has been developed and audio-speeches were extracted. To understand human nature while answering a video call, an analysis was conducted where polarity and vocal intensity were considered as parameters. Simultaneously, a translation model using a neural approach was developed to translate English sentences to French. Simple RNN-based and Embedded-RNN based models were used for the translation model. BLEU score and target sentence comparators were used to check sentence correctness. Embedded-RNN showed an accuracy of 88.71 percentage and predicted correct sentences. A key finding suggest that polarity is a good estimator to understand human emotion.

A Discussion on Influence of Newspaper Headlines on Social Media

Sep 05, 2019

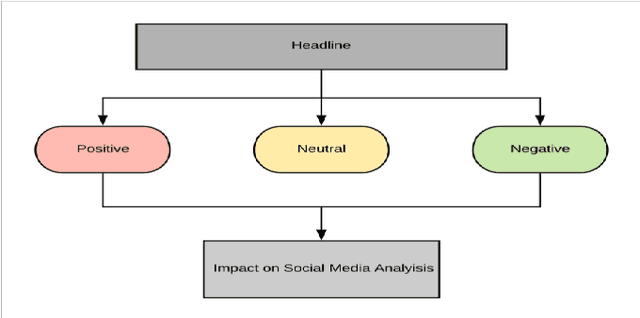

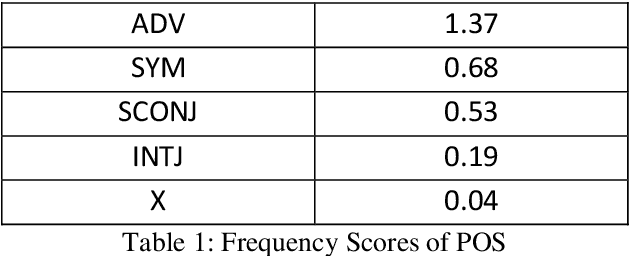

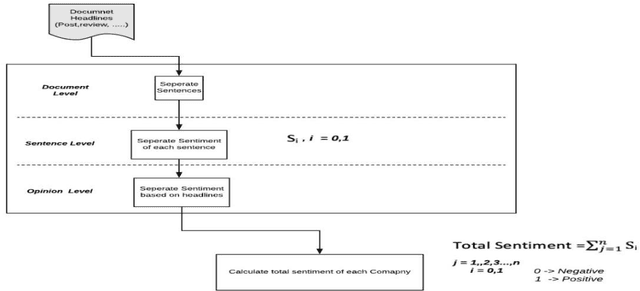

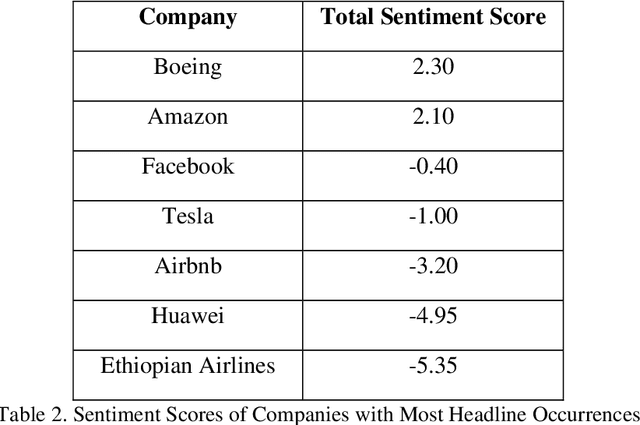

Abstract:Newspaper headlines contribute severely and have an influence on the social media. This work studies the durability of impact of verbs and adjectives on headlines and determine the factors which are responsible for its nature of influence on the social media. Each headline has been categorized into positive, negative or neutral based on its sentiment score. Initial results show that intensity of a sentiment nature is positively correlated with the social media impression. Additionally, verbs and adjectives show a relation with the sentiment scores

Hand Gesture Detection and Conversion to Speech and Text

Nov 29, 2018

Abstract:The hand gestures are one of the typical methods used in sign language. It is very difficult for the hearing-impaired people to communicate with the world. This project presents a solution that will not only automatically recognize the hand gestures but will also convert it into speech and text output so that impaired person can easily communicate with normal people. A camera attached to computer will capture images of hand and the contour feature extraction is used to recognize the hand gestures of the person. Based on the recognized gestures, the recorded soundtrack will be played.

* 5 pages, 5 figures, International Conference on Innovations and Discoveries in Science, Engineering and Technology(ICIDSET) 2018

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge