Andrew Speck

Subspace-Constrained Continuous Methane Leak Monitoring and Optimal Sensor Placement

Aug 03, 2023

Abstract:This work presents a procedure that can quickly identify and isolate methane emission sources leading to expedient remediation. Minimizing the time required to identify a leak and the subsequent time to dispatch repair crews can significantly reduce the amount of methane released into the atmosphere. The procedure developed utilizes permanently installed low-cost methane sensors at an oilfield facility to continuously monitor leaked gas concentration above background levels. The methods developed for optimal sensor placement and leak inversion in consideration of predefined subspaces and restricted zones are presented. In particular, subspaces represent regions comprising one or more equipment items that may leak, and restricted zones define regions in which a sensor may not be placed due to site restrictions by design. Thus, subspaces constrain the inversion problem to specified locales, while restricted zones constrain sensor placement to feasible zones. The development of synthetic wind models, and those based on historical data, are also presented as a means to accommodate optimal sensor placement under wind uncertainty. The wind models serve as realizations for planning purposes, with the aim of maximizing the mean coverage measure for a given number of sensors. Once the optimal design is established, continuous real-time monitoring permits localization and quantification of a methane leak source. The necessary methods, mathematical formulation and demonstrative test results are presented.

Lidar-Monocular Surface Reconstruction Using Line Segments

Apr 06, 2021

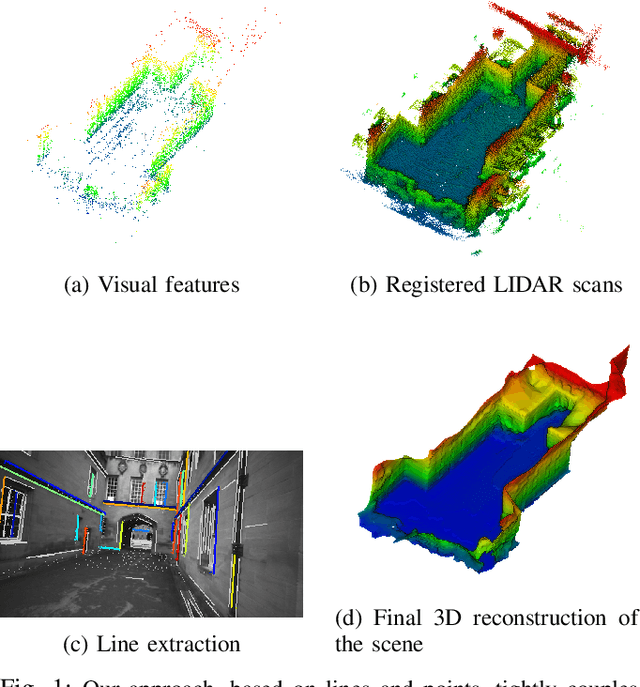

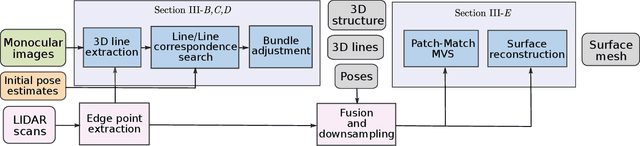

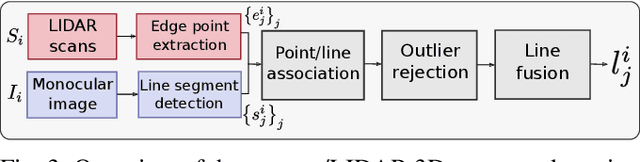

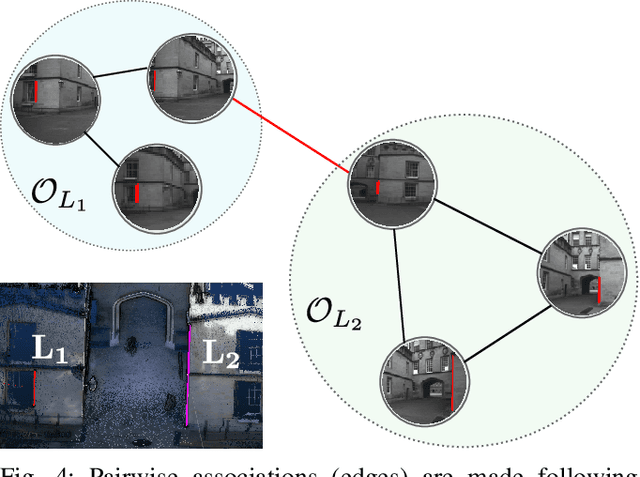

Abstract:Structure from Motion (SfM) often fails to estimate accurate poses in environments that lack suitable visual features. In such cases, the quality of the final 3D mesh, which is contingent on the accuracy of those estimates, is reduced. One way to overcome this problem is to combine data from a monocular camera with that of a LIDAR. This allows fine details and texture to be captured while still accurately representing featureless subjects. However, fusing these two sensor modalities is challenging due to their fundamentally different characteristics. Rather than directly fusing image features and LIDAR points, we propose to leverage common geometric features that are detected in both the LIDAR scans and image data, allowing data from the two sensors to be processed in a higher-level space. In particular, we propose to find correspondences between 3D lines extracted from LIDAR scans and 2D lines detected in images before performing a bundle adjustment to refine poses. We also exploit the detected and optimized line segments to improve the quality of the final mesh. We test our approach on the recently published dataset, Newer College Dataset. We compare the accuracy and the completeness of the 3D mesh to a ground truth obtained with a survey-grade 3D scanner. We show that our method delivers results that are comparable to a state-of-the-art LIDAR survey while not requiring highly accurate ground truth pose estimates.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge