Andreea Birhala

ReflectNet -- A Generative Adversarial Method for Single Image Reflection Suppression

May 11, 2021

Abstract:Taking pictures through glass windows almost always produces undesired reflections that degrade the quality of the photo. The ill-posed nature of the reflection removal problem reached the attention of many researchers for more than decades. The main challenge of this problem is the lack of real training data and the necessity of generating realistic synthetic data. In this paper, we proposed a single image reflection removal method based on context understanding modules and adversarial training to efficiently restore the transmission layer without reflection. We also propose a complex data generation model in order to create a large training set with various type of reflections. Our proposed reflection removal method outperforms state-of-the-art methods in terms of PSNR and SSIM on the SIR benchmark dataset.

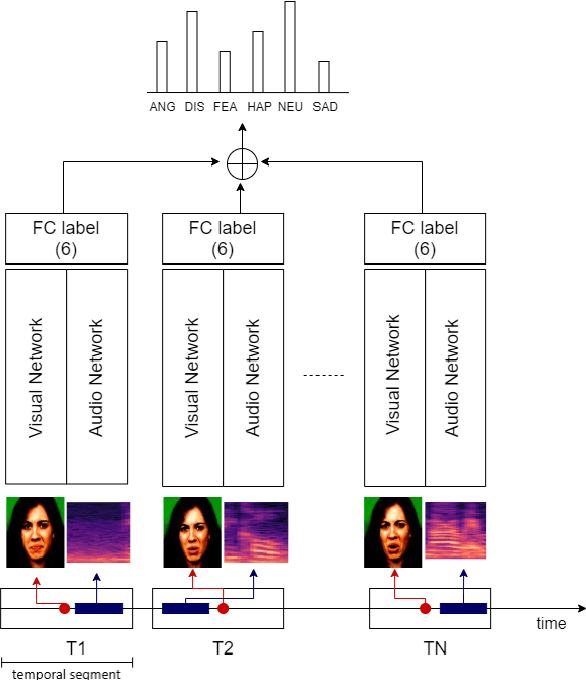

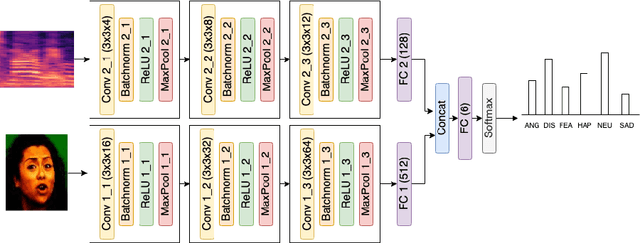

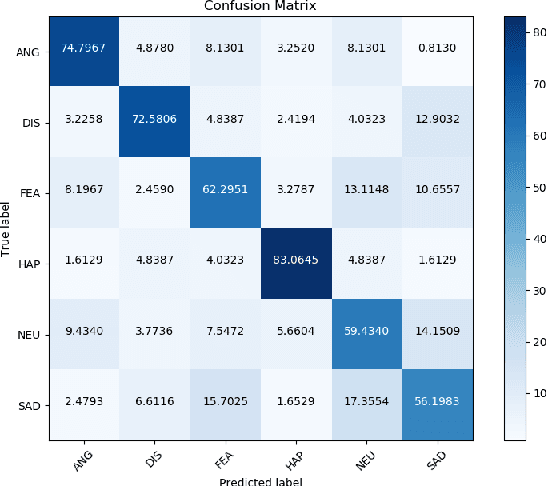

Temporal aggregation of audio-visual modalities for emotion recognition

Jul 08, 2020

Abstract:Emotion recognition has a pivotal role in affective computing and in human-computer interaction. The current technological developments lead to increased possibilities of collecting data about the emotional state of a person. In general, human perception regarding the emotion transmitted by a subject is based on vocal and visual information collected in the first seconds of interaction with the subject. As a consequence, the integration of verbal (i.e., speech) and non-verbal (i.e., image) information seems to be the preferred choice in most of the current approaches towards emotion recognition. In this paper, we propose a multimodal fusion technique for emotion recognition based on combining audio-visual modalities from a temporal window with different temporal offsets for each modality. We show that our proposed method outperforms other methods from the literature and human accuracy rating. The experiments are conducted over the open-access multimodal dataset CREMA-D.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge