Andreas Knopp

Efficient Precoding for LEO Satellites: A Low-Complexity Matrix Inversion Method via Woodbury Matrix Identity and arSVD

Dec 18, 2025Abstract:The increasing deployment of massive active antenna arrays in low Earth orbit (LEO) satellites necessitates computationally efficient and adaptive precoding techniques to mitigate dynamic channel variations and enhance spectral efficiency. Regularized zero-forcing (RZF) precoding is widely used in multi-user MIMO systems; however, its real-time implementation is limited by the computationally intensive inversion of the Gram matrix. In this work, we develop a low-complexity framework that integrates the Woodbury (WB) formula with adaptive randomized singular value decomposition (arSVD) to efficiently update the Gram matrix inverse as the satellite moves along its orbit. By leveraging low-rank perturbations, the WB formula reduces inversion complexity, while arSVD dynamically extracts dominant singular components, further enhancing computational efficiency. Monte Carlo simulations demonstrate that the proposed method achieves computational savings of up to 61\% compared to conventional RZF precoding with full matrix inversion, while incurring only a modest degradation in sum-rate performance. These results demonstrate that WB-arSVD offers a scalable and efficient solution for next-generation satellite communications, facilitating real-time deployment in power-constrained environments.

Shaping Rewards, Shaping Routes: On Multi-Agent Deep Q-Networks for Routing in Satellite Constellation Networks

Aug 04, 2024

Abstract:Effective routing in satellite mega-constellations has become crucial to facilitate the handling of increasing traffic loads, more complex network architectures, as well as the integration into 6G networks. To enhance adaptability as well as robustness to unpredictable traffic demands, and to solve dynamic routing environments efficiently, machine learning-based solutions are being considered. For network control problems, such as optimizing packet forwarding decisions according to Quality of Service requirements and maintaining network stability, deep reinforcement learning techniques have demonstrated promising results. For this reason, we investigate the viability of multi-agent deep Q-networks for routing in satellite constellation networks. We focus specifically on reward shaping and quantifying training convergence for joint optimization of latency and load balancing in static and dynamic scenarios. To address identified drawbacks, we propose a novel hybrid solution based on centralized learning and decentralized control.

LEO-PNT With Starlink: Development of a Burst Detection Algorithm Based on Signal Measurements

Apr 19, 2023

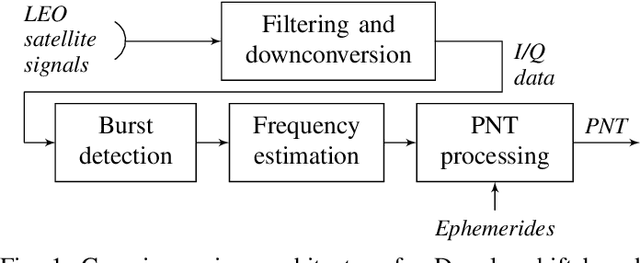

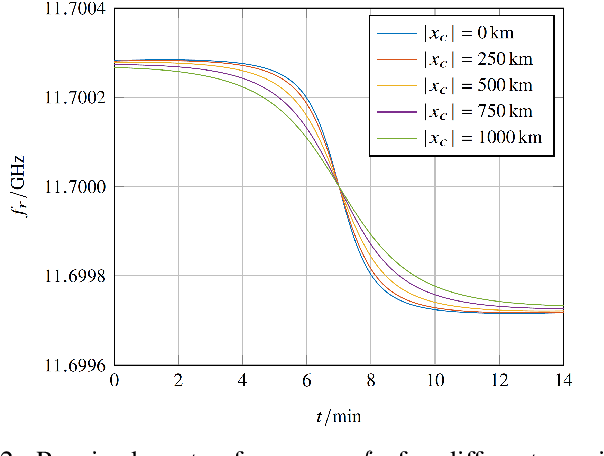

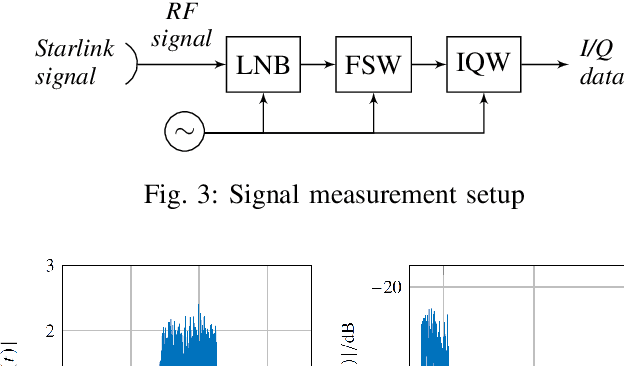

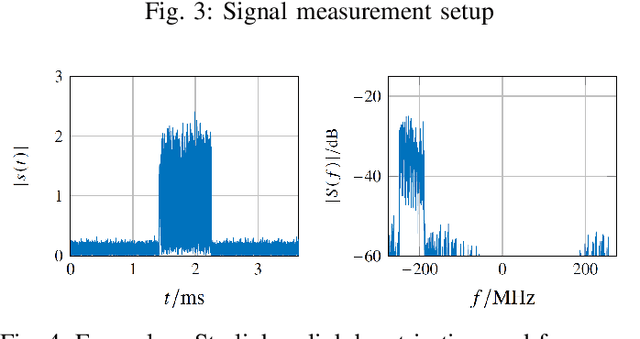

Abstract:Due to the strong dependency of our societies onGlobal Navigation Satellite Systems and their vulnerability to outages, there is an urgent need for additional navigation systems. A possible approach for such an additional system uses the communication signals of the emerging LEO satellite mega-constellations as signals of opportunity. The Doppler shift of those signals is leveraged to calculate positioning, navigation and timing information. Therefore the signals have to be detected and the frequency has to be estimated. In this paper, we present the results of Starlink signal measurements. The results are used to develope a novel correlation-based detection algorithm for Starlink burst signals. The carrier frequency of the detected bursts is measured and the attainable positioning accuracy is estimated. It is shown, that the presented algorithms are applicable for a navigation solution in an operationally relevant setup using an omnidirectional antenna.

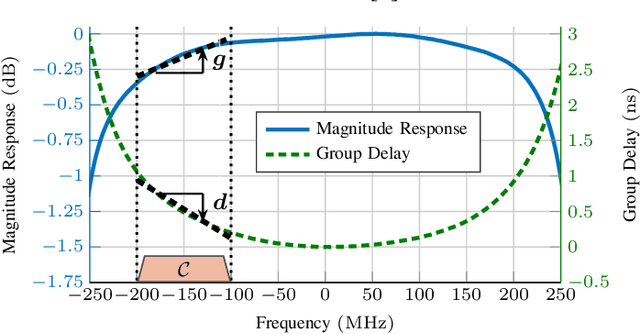

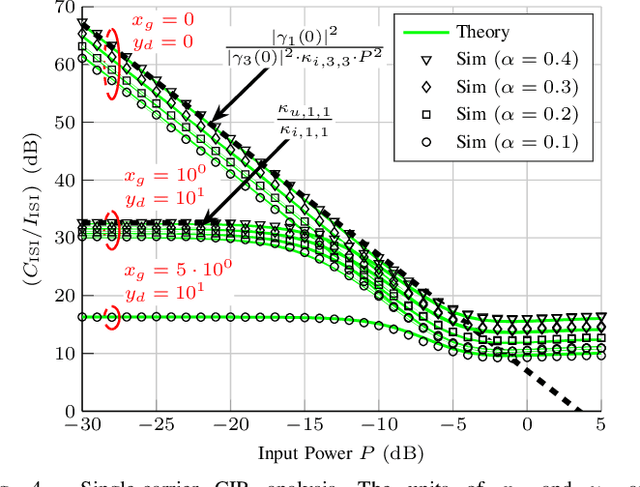

Distortions Characterization for Dynamic Carrier Allocation in Ultra High-Throughput Satellites

May 25, 2021

Abstract:A novel analytical formula for the characterization of linear and nonlinear distortions in future ultra high-throughput communication payloads is proposed in this work. In this context, the carrier-to-interference ratio related to single-carrier and multicarrier signals is derived. Through the analysis of its behavior valuable insights are created, especially regarding the interaction between linear and nonlinear intersymbol interference. Furthermore, the principle of dynamic carrier allocation optimization is highlighted in a realistic scenario. Within the presented framework, it is proven that a significant gain can be achieved even with a limited number of carriers. Finally, a complexity and accuracy analysis emphasizes the practicality of the proposed approach.

Impact of Phase Noise and Oscillator Stability on Ultra-Narrow-Band-IoT Waveforms for Satellite

Jan 15, 2021

Abstract:It has been shown that ultra-narrow-band (uNB) massive machine type communication using very compact devices with direct access to satellites is possible at ultra low rate. This enables global ubiquitous coverage for terminals without terrestrial service in the Internet of Remote Things and provides access to any satellite up to the the geostationary earth orbit. The lower data rate for waveforms providing uNB communication is set by the stability and the phase noise of the applied oscillators. In this paper we analyze the physical layer of two candidate waveforms, which are LoRa and Unipolar Coded Chirp-Spread Spectrum (UCSS) with respect to phase noise and oscillator frequency drifts. It is figured out that UCSS is more robust against linear frequency drifts, which is the main source of error for uNB transmissions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge