Anders Kirk Uhrenholt

Odd-One-Out Representation Learning

Dec 14, 2020

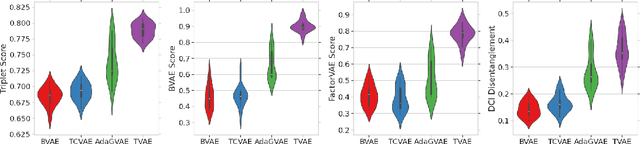

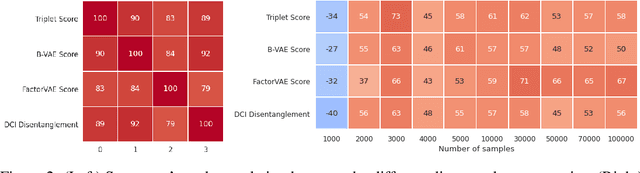

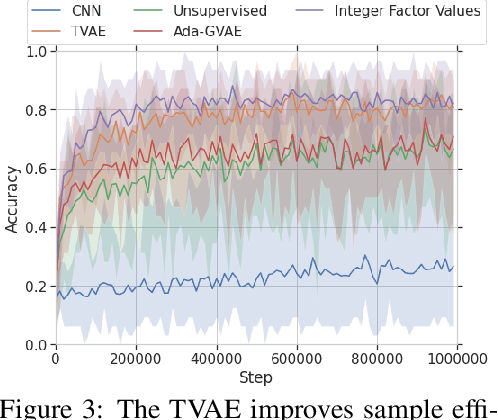

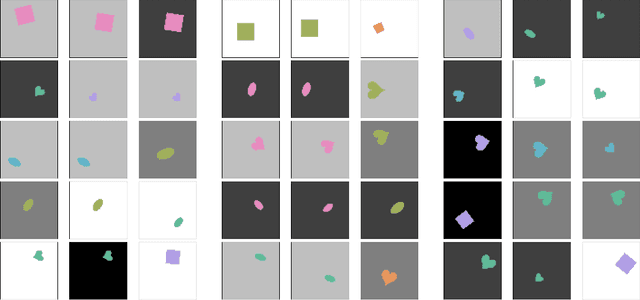

Abstract:The effective application of representation learning to real-world problems requires both techniques for learning useful representations, and also robust ways to evaluate properties of representations. Recent work in disentangled representation learning has shown that unsupervised representation learning approaches rely on fully supervised disentanglement metrics, which assume access to labels for ground-truth factors of variation. In many real-world cases ground-truth factors are expensive to collect, or difficult to model, such as for perception. Here we empirically show that a weakly-supervised downstream task based on odd-one-out observations is suitable for model selection by observing high correlation on a difficult downstream abstract visual reasoning task. We also show that a bespoke metric-learning VAE model which performs highly on this task also out-performs other standard unsupervised and a weakly-supervised disentanglement model across several metrics.

Probabilistic selection of inducing points in sparse Gaussian processes

Oct 31, 2020

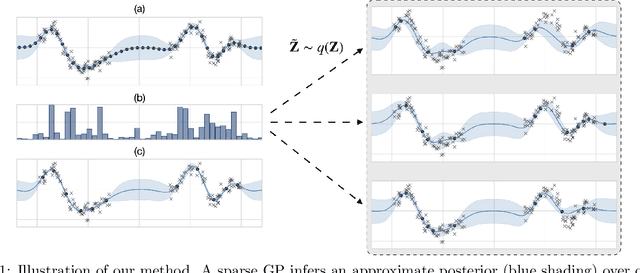

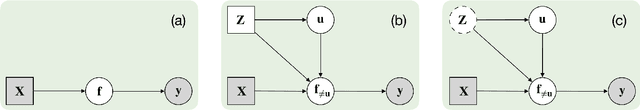

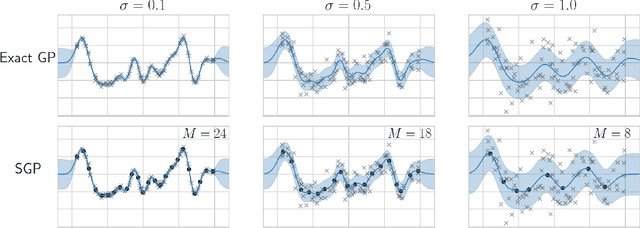

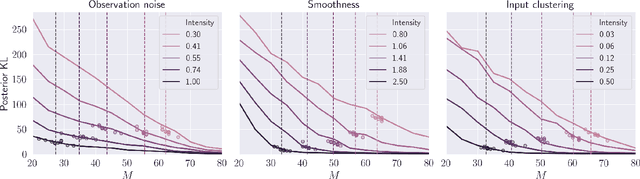

Abstract:Sparse Gaussian processes and various extensions thereof are enabled through inducing points, that simultaneously bottleneck the predictive capacity and act as the main contributor towards model complexity. However, the number of inducing points is generally not associated with uncertainty which prevents us from applying the apparatus of Bayesian reasoning in identifying an appropriate trade-off. In this work we place a point process prior on the inducing points and approximate the associated posterior through stochastic variational inference. By letting the prior encourage a moderate number of inducing points, we enable the model to learn which and how many points to utilise. We experimentally show that fewer inducing points are preferred by the model as the points become less informative, and further demonstrate how the method can be applied in deep Gaussian processes and latent variable modelling.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge