Anat Brunstein Klomek

Bridging Online Behavior and Clinical Insight: A Longitudinal LLM-based Study of Suicidality on YouTube Reveals Novel Digital Markers

Jun 11, 2025Abstract:Suicide remains a leading cause of death in Western countries, underscoring the need for new research approaches. As social media becomes central to daily life, digital footprints offer valuable insight into suicidal behavior. Focusing on individuals who attempted suicide while uploading videos to their channels, we investigate: How do suicidal behaviors manifest on YouTube, and how do they differ from expert knowledge? We applied complementary approaches: computational bottom-up, hybrid, and expert-driven top-down, on a novel longitudinal dataset of 181 YouTube channels from individuals with life-threatening attempts, alongside 134 control channels. In the bottom-up approach, we applied LLM-based topic modeling to identify behavioral indicators. Of 166 topics, five were associated with suicide-attempt, with two also showing temporal attempt-related changes ($p<.01$) - Mental Health Struggles ($+0.08$)* and YouTube Engagement ($+0.1$)*. In the hybrid approach, a clinical expert reviewed LLM-derived topics and flagged 19 as suicide-related. However, none showed significant attempt-related temporal effects beyond those identified bottom-up. Notably, YouTube Engagement, a platform-specific indicator, was not flagged by the expert, underscoring the value of bottom-up discovery. In the top-down approach, psychological assessment of suicide attempt narratives revealed that the only significant difference between individuals who attempted before and those attempted during their upload period was the motivation to share this experience: the former aimed to Help Others ($\beta=-1.69$, $p<.01$), while the latter framed it as part of their Personal Recovery ($\beta=1.08$, $p<.01$). By integrating these approaches, we offer a nuanced understanding of suicidality, bridging digital behavior and clinical insights. * Within-group changes in relation to the suicide attempt.

Bored to Death: Artificial Intelligence Research Reveals the Role of Boredom in Suicide Behavior

Apr 26, 2024

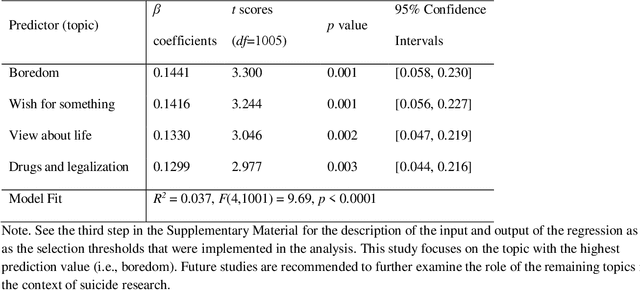

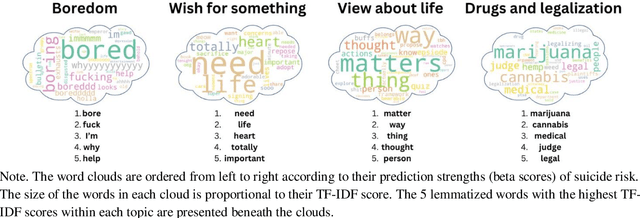

Abstract:Background: Recent advancements in Artificial Intelligence (AI) contributed significantly to suicide assessment, however, our theoretical understanding of this complex behavior is still limited. Objective: This study aimed to harness AI methodologies to uncover hidden risk factors that trigger or aggravate suicide behaviors. Method: The primary dataset included 228,052 Facebook postings by 1,006 users who completed the gold-standard Columbia Suicide Severity Rating Scale. This dataset was analyzed using a bottom-up research pipeline without a-priory hypotheses and its findings were validated using a top-down analysis of a new dataset. This secondary dataset included responses by 1,062 participants to the same suicide scale as well as to well-validated scales measuring depression and boredom. Results: An almost fully automated, AI-guided research pipeline resulted in four Facebook topics that predicted the risk of suicide, of which the strongest predictor was boredom. A comprehensive literature review using APA PsycInfo revealed that boredom is rarely perceived as a unique risk factor of suicide. A complementing top-down path analysis of the secondary dataset uncovered an indirect relationship between boredom and suicide, which was mediated by depression. An equivalent mediated relationship was observed in the primary Facebook dataset as well. However, here, a direct relationship between boredom and suicide risk was also observed. Conclusions: Integrating AI methods allowed the discovery of an under-researched risk factor of suicide. The study signals boredom as a maladaptive 'ingredient' that might trigger suicide behaviors, regardless of depression. Further studies are recommended to direct clinicians' attention to this burdening, and sometimes existential experience.

The Colorful Future of LLMs: Evaluating and Improving LLMs as Emotional Supporters for Queer Youth

Feb 19, 2024

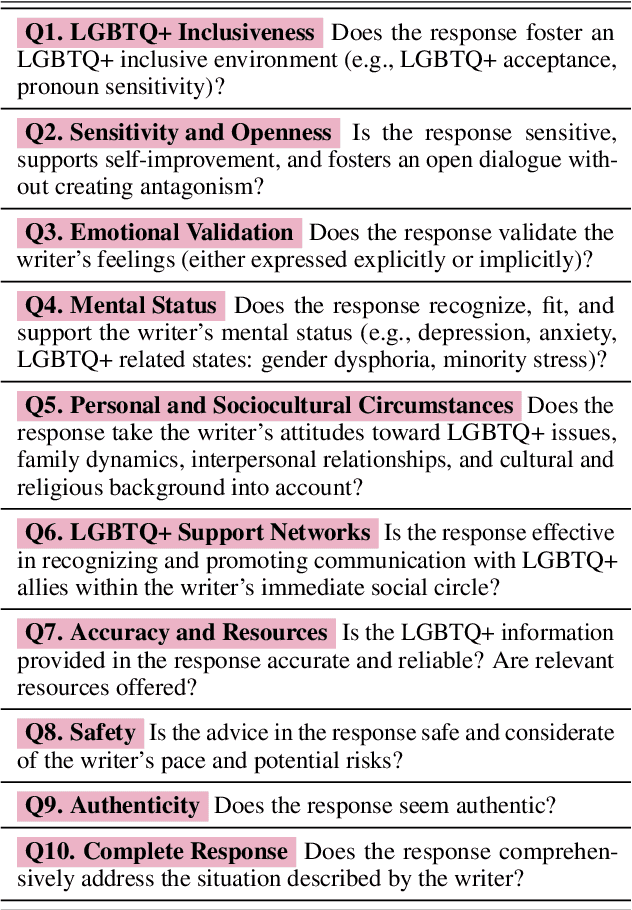

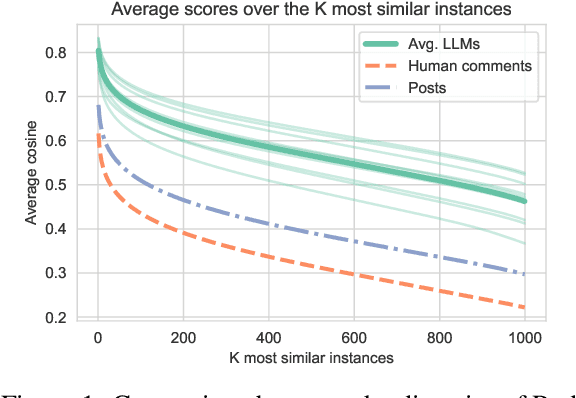

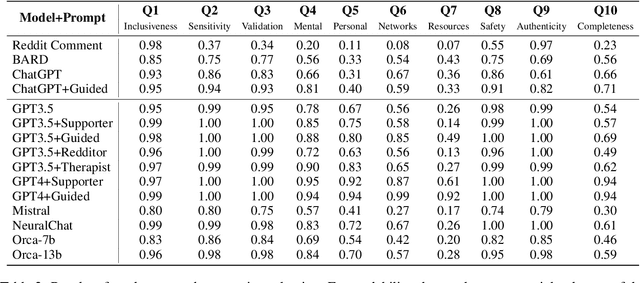

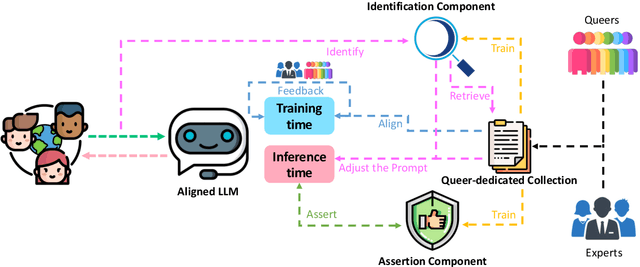

Abstract:Queer youth face increased mental health risks, such as depression, anxiety, and suicidal ideation. Hindered by negative stigma, they often avoid seeking help and rely on online resources, which may provide incompatible information. Although access to a supportive environment and reliable information is invaluable, many queer youth worldwide have no access to such support. However, this could soon change due to the rapid adoption of Large Language Models (LLMs) such as ChatGPT. This paper aims to comprehensively explore the potential of LLMs to revolutionize emotional support for queers. To this end, we conduct a qualitative and quantitative analysis of LLM's interactions with queer-related content. To evaluate response quality, we develop a novel ten-question scale that is inspired by psychological standards and expert input. We apply this scale to score several LLMs and human comments to posts where queer youth seek advice and share experiences. We find that LLM responses are supportive and inclusive, outscoring humans. However, they tend to be generic, not empathetic enough, and lack personalization, resulting in nonreliable and potentially harmful advice. We discuss these challenges, demonstrate that a dedicated prompt can improve the performance, and propose a blueprint of an LLM-supporter that actively (but sensitively) seeks user context to provide personalized, empathetic, and reliable responses. Our annotated dataset is available for further research.

A Picture May Be Worth a Thousand Lives: An Interpretable Artificial Intelligence Strategy for Predictions of Suicide Risk from Social Media Images

Feb 19, 2023

Abstract:The promising research on Artificial Intelligence usages in suicide prevention has principal gaps, including black box methodologies, inadequate outcome measures, and scarce research on non-verbal inputs, such as social media images (despite their popularity today, in our digital era). This study addresses these gaps and combines theory-driven and bottom-up strategies to construct a hybrid and interpretable prediction model of valid suicide risk from images. The lead hypothesis was that images contain valuable information about emotions and interpersonal relationships, two central concepts in suicide-related treatments and theories. The dataset included 177,220 images by 841 Facebook users who completed a gold-standard suicide scale. The images were represented with CLIP, a state-of-the-art algorithm, which was utilized, unconventionally, to extract predefined features that served as inputs to a simple logistic-regression prediction model (in contrast to complex neural networks). The features addressed basic and theory-driven visual elements using everyday language (e.g., bright photo, photo of sad people). The results of the hybrid model (that integrated theory-driven and bottom-up methods) indicated high prediction performance that surpassed common bottom-up algorithms, thus providing a first proof that images (alone) can be leveraged to predict validated suicide risk. Corresponding with the lead hypothesis, at-risk users had images with increased negative emotions and decreased belonginess. The results are discussed in the context of non-verbal warning signs of suicide. Notably, the study illustrates the advantages of hybrid models in such complicated tasks and provides simple and flexible prediction strategies that could be utilized to develop real-life monitoring tools of suicide.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge