Amro Alabsi Aljundi

Boosting Graph Embedding on a Single GPU

Oct 19, 2021

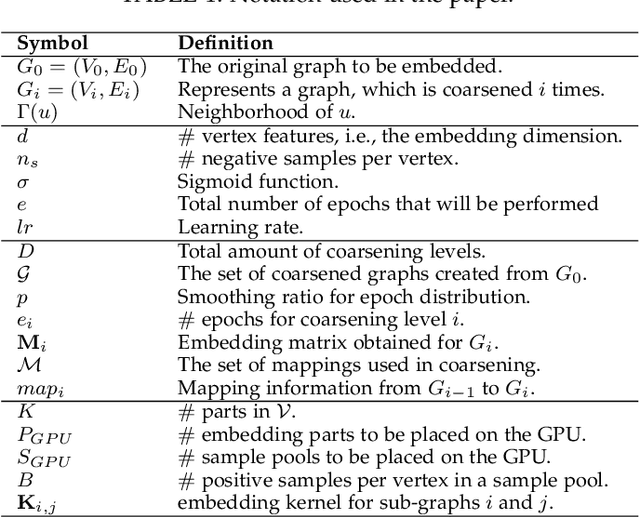

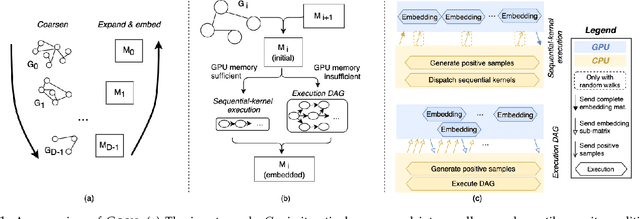

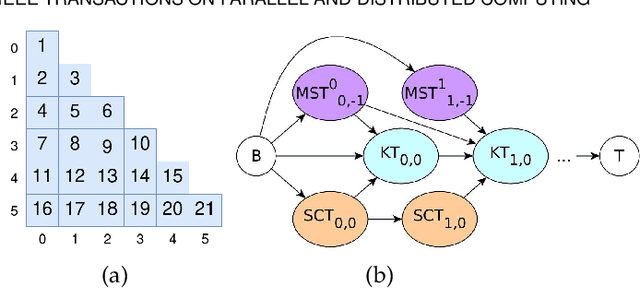

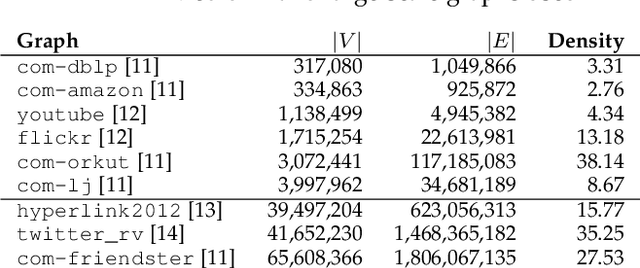

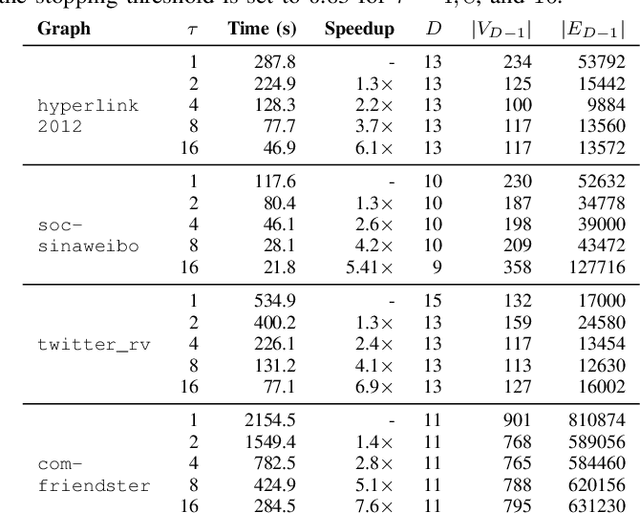

Abstract:Graphs are ubiquitous, and they can model unique characteristics and complex relations of real-life systems. Although using machine learning (ML) on graphs is promising, their raw representation is not suitable for ML algorithms. Graph embedding represents each node of a graph as a d-dimensional vector which is more suitable for ML tasks. However, the embedding process is expensive, and CPU-based tools do not scale to real-world graphs. In this work, we present GOSH, a GPU-based tool for embedding large-scale graphs with minimum hardware constraints. GOSH employs a novel graph coarsening algorithm to enhance the impact of updates and minimize the work for embedding. It also incorporates a decomposition schema that enables any arbitrarily large graph to be embedded with a single GPU. As a result, GOSH sets a new state-of-the-art in link prediction both in accuracy and speed, and delivers high-quality embeddings for node classification at a fraction of the time compared to the state-of-the-art. For instance, it can embed a graph with over 65 million vertices and 1.8 billion edges in less than 30 minutes on a single GPU.

Understanding Coarsening for Embedding Large-Scale Graphs

Sep 10, 2020

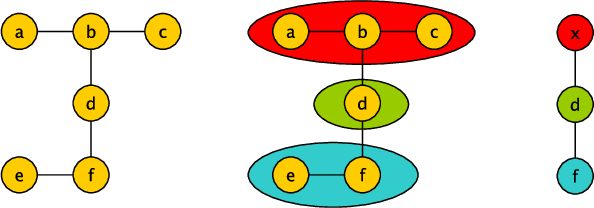

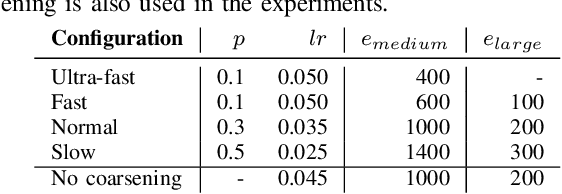

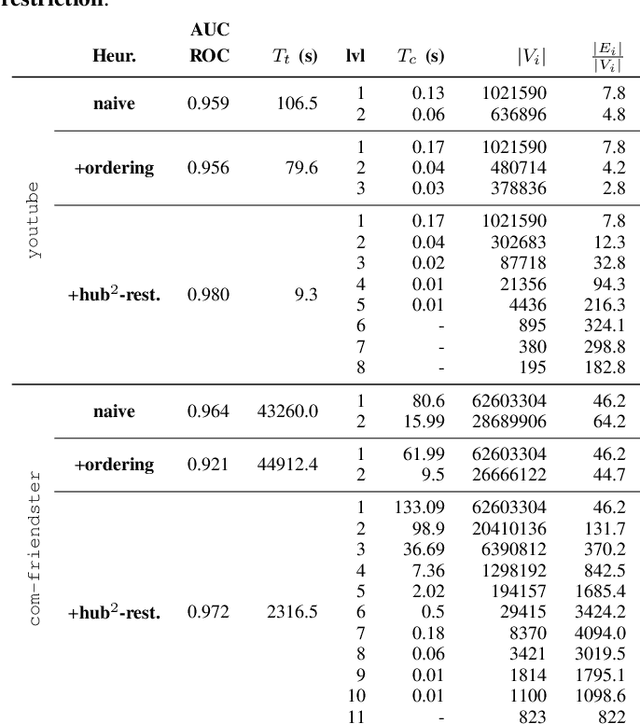

Abstract:A significant portion of the data today, e.g, social networks, web connections, etc., can be modeled by graphs. A proper analysis of graphs with Machine Learning (ML) algorithms has the potential to yield far-reaching insights into many areas of research and industry. However, the irregular structure of graph data constitutes an obstacle for running ML tasks on graphs such as link prediction, node classification, and anomaly detection. Graph embedding is a compute-intensive process of representing graphs as a set of vectors in a d-dimensional space, which in turn makes it amenable to ML tasks. Many approaches have been proposed in the literature to improve the performance of graph embedding, e.g., using distributed algorithms, accelerators, and pre-processing techniques. Graph coarsening, which can be considered a pre-processing step, is a structural approximation of a given, large graph with a smaller one. As the literature suggests, the cost of embedding significantly decreases when coarsening is employed. In this work, we thoroughly analyze the impact of the coarsening quality on the embedding performance both in terms of speed and accuracy. Our experiments with a state-of-the-art, fast graph embedding tool show that there is an interplay between the coarsening decisions taken and the embedding quality.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge