Amir Aly

Exploratory Study: Children's with Autism Awareness of being Imitated by Nao Robot

Mar 07, 2020

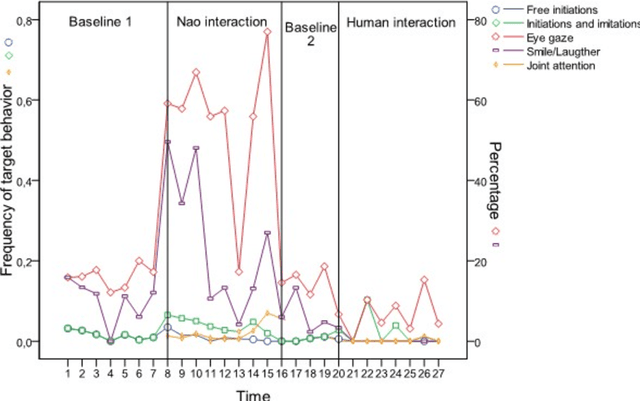

Abstract:This paper presents an exploratory study designed for children with Autism Spectrum Disorders (ASD) that investigates children's awareness of being imitated by a robot in a play/game scenario. The Nao robot imitates all the arm movement behaviors of the child in real-time in dyadic and triadic interactions. Different behavioral criteria (i.e., eye gaze, gaze shifting, initiation and imitation of arm movements, smile/laughter) were analyzed based on the video data of the interaction. The results confirm only parts of the research hypothesis. However, these results are promising for the future directions of this work.

Social Engagement of Children with Autism during Interaction with a Robot

Feb 27, 2020

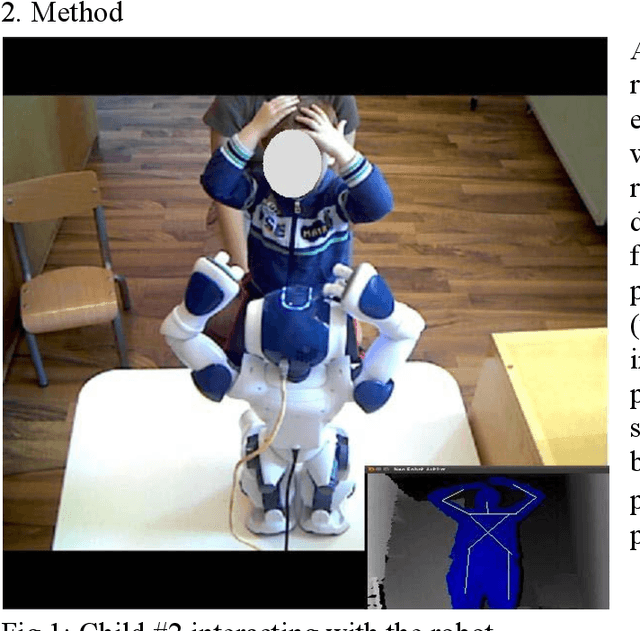

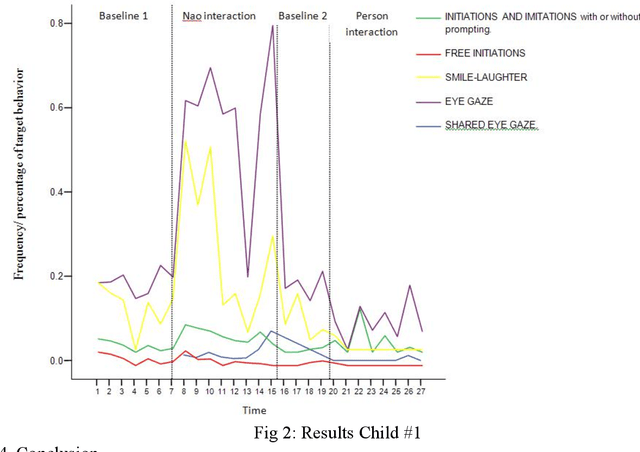

Abstract:Imitation plays an important role in development, being one of the precursors of social cognition. Even though some children with autism imitate spontaneously and other children with autism can learn to imitate, the dynamics of imitation is affected in the large majority of cases. Existing studies from the literature suggest that robots can be used to teach children with autism basic interaction skills like imitation. Based on these findings, in this study, we investigate if children with autism show more social engagement when interacting with an imitative robot (Fig 1) compared to a human partner in a motor imitation task.

Human Posture Recognition and Gesture Imitation with a Humanoid Robot

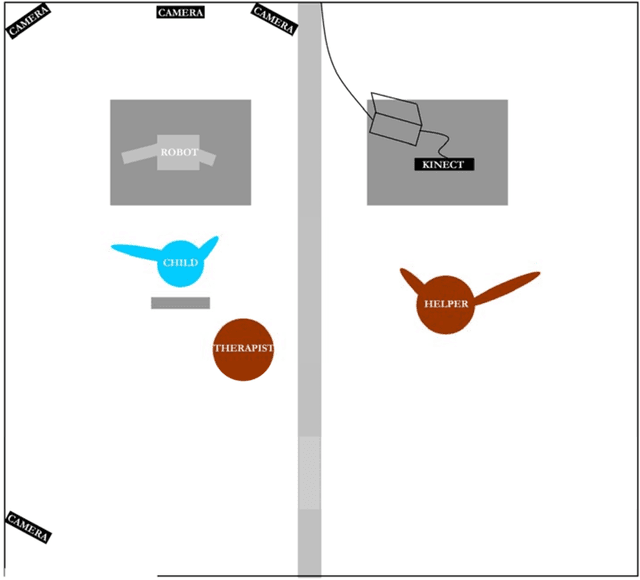

Feb 06, 2020Abstract:Autism is a highly variable neurodevelopmental disorder characterized by impaired social interaction and communication , and by restricted and repetitive behavior. The problematic point concerning this neuro-developmental disorder is its causes which are unknown till now, and therefore it cannot be treated medically. Recently, robots have been involved in the development of the social behavior of autistic children who showed a better interaction with robots than with their peers. One of the striking social impairments that is widely described in autism literature is the deficit of imitating the others. Trying to make use of this point, therapists and robotic researchers have been interested in designing triadic interactional (Human-Robot-Child) imitation games, in which the therapists starts to perform a gesture then the robot imitates it, and then the child tries to do the same, hoping that these games will encourage the autistic child to repeat these new gestures in his daily social life.

Towards Understanding Language through Perception in Situated Human-Robot Interaction: From Word Grounding to Grammar Induction

Dec 12, 2018Abstract:Robots are widely collaborating with human users in diferent tasks that require high-level cognitive functions to make them able to discover the surrounding environment. A difcult challenge that we briefy highlight in this short paper is inferring the latent grammatical structure of language, which includes grounding parts of speech (e.g., verbs, nouns, adjectives, and prepositions) through visual perception, and induction of Combinatory Categorial Grammar (CCG) for phrases. This paves the way towards grounding phrases so as to make a robot able to understand human instructions appropriately during interaction.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge