Alok Joshi

Machine learning for sports betting: should forecasting models be optimised for accuracy or calibration?

Mar 13, 2023Abstract:Sports betting's recent federal legalisation in the USA coincides with the golden age of machine learning. If bettors can leverage data to accurately predict the probability of an outcome, they can recognise when the bookmaker's odds are in their favour. As sports betting is a multi-billion dollar industry in the USA alone, identifying such opportunities could be extremely lucrative. Many researchers have applied machine learning to the sports outcome prediction problem, generally using accuracy to evaluate the performance of forecasting models. We hypothesise that for the sports betting problem, model calibration is more important than accuracy. To test this hypothesis, we train models on NBA data over several seasons and run betting experiments on a single season, using published odds. Evaluating various betting systems, we show that optimising the forecasting model for calibration leads to greater returns than optimising for accuracy, on average (return on investment of $110.42\%$ versus $2.98\%$) and in the best case ($902.01\%$ versus $222.84\%$). These findings suggest that for sports betting (or any forecasting problem where decisions are made based on the predicted probability of each outcome), calibration is a more important metric than accuracy. Sports bettors who wish to increase profits should therefore optimise their forecasting model for calibration.

Genetic Algorithmic Parameter Optimisation of a Recurrent Spiking Neural Network Model

Mar 30, 2020

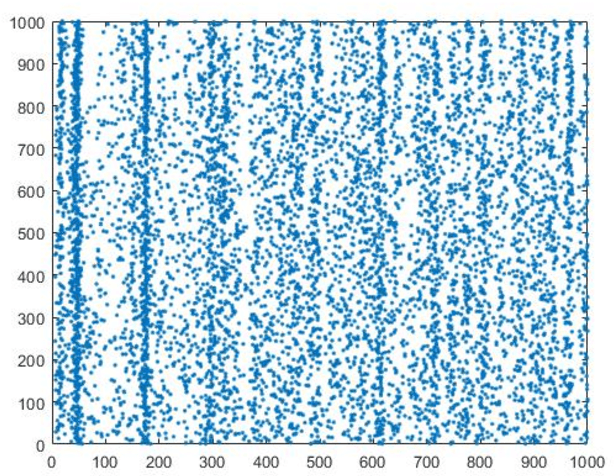

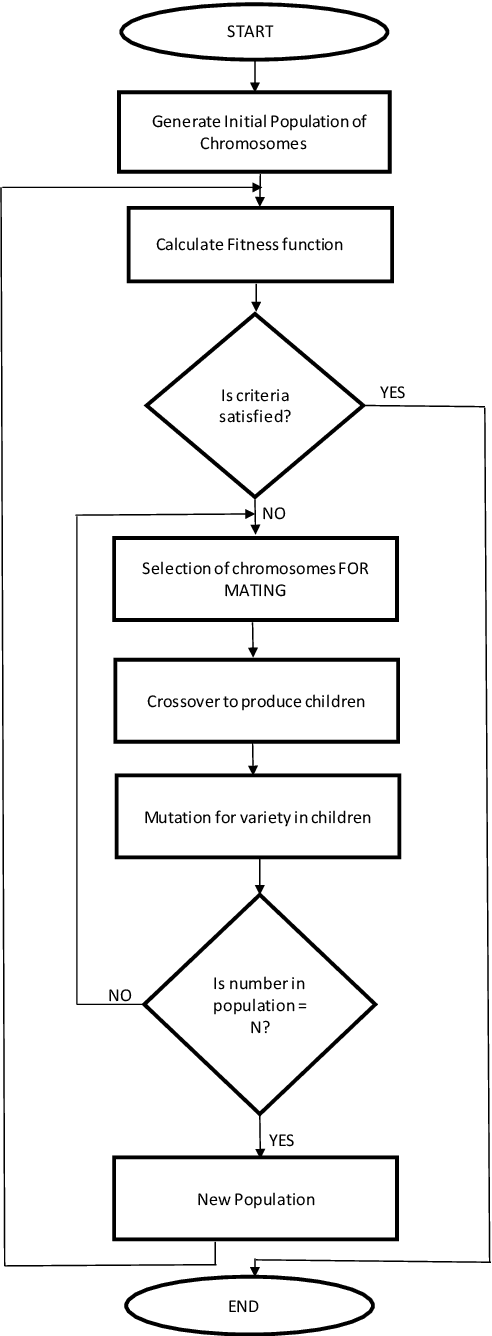

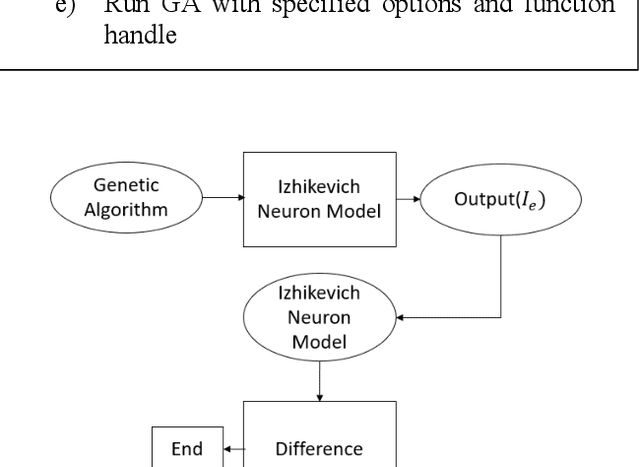

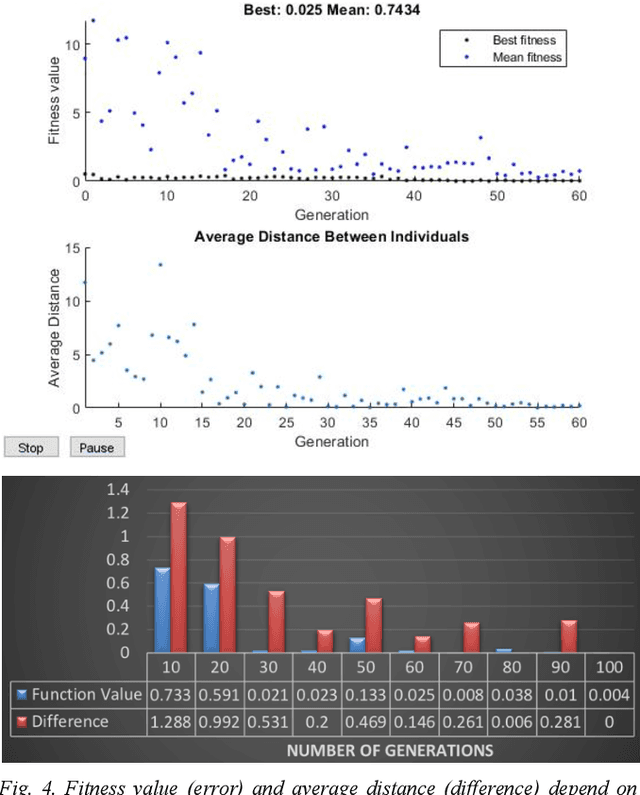

Abstract:Neural networks are complex algorithms that loosely model the behaviour of the human brain. They play a significant role in computational neuroscience and artificial intelligence. The next generation of neural network models is based on the spike timing activity of neurons: spiking neural networks (SNNs). However, model parameters in SNNs are difficult to search and optimise. Previous studies using genetic algorithm (GA) optimisation of SNNs were focused mainly on simple, feedforward, or oscillatory networks, but not much work has been done on optimising cortex-like recurrent SNNs. In this work, we investigated the use of GAs to search for optimal parameters in recurrent SNNs to reach targeted neuronal population firing rates, e.g. as in experimental observations. We considered a cortical column based SNN comprising 1000 Izhikevich spiking neurons for computational efficiency and biologically realism. The model parameters explored were the neuronal biased input currents. First, we found for this particular SNN, the optimal parameter values for targeted population averaged firing activities, and the convergence of algorithm by ~100 generations. We then showed that the GA optimal population size was within ~16-20 while the crossover rate that returned the best fitness value was ~0.95. Overall, we have successfully demonstrated the feasibility of implementing GA to optimise model parameters in a recurrent cortical based SNN.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge