Allen Huang

FinBERT: A Pretrained Language Model for Financial Communications

Jul 09, 2020

Abstract:Contextual pretrained language models, such as BERT (Devlin et al., 2019), have made significant breakthrough in various NLP tasks by training on large scale of unlabeled text re-sources.Financial sector also accumulates large amount of financial communication text.However, there is no pretrained finance specific language models available. In this work,we address the need by pretraining a financial domain specific BERT models, FinBERT, using a large scale of financial communication corpora. Experiments on three financial sentiment classification tasks confirm the advantage of FinBERT over generic domain BERT model. The code and pretrained models are available at https://github.com/yya518/FinBERT. We hope this will be useful for practitioners and researchers working on financial NLP tasks.

Constitutional Precedent of Amicus Briefs

Jul 07, 2016

Abstract:We investigate shared language between U.S. Supreme Court majority opinions and interest groups' corresponding amicus briefs. Specifically, we evaluate whether language that originated in an amicus brief acquired legal precedent status by being cited in the Court's opinion. Using plagiarism detection software, automated querying of a large legal database, and manual analysis, we establish seven instances where interest group amici were able to formulate constitutional case law, setting binding legal precedent. We discuss several such instances for their implications in the Supreme Court's creation of case law.

Deep Learning for Music

Jun 15, 2016

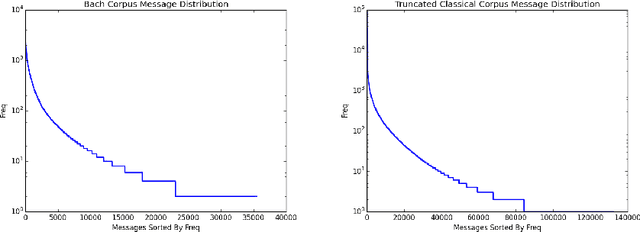

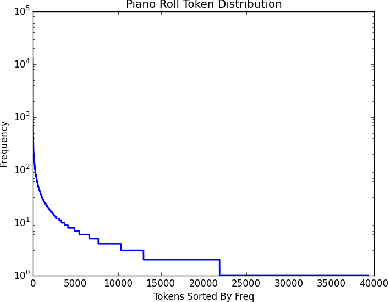

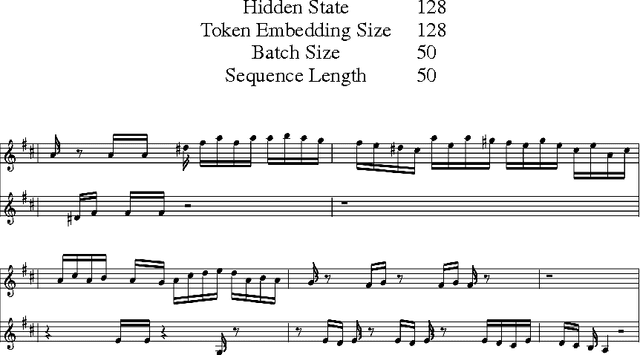

Abstract:Our goal is to be able to build a generative model from a deep neural network architecture to try to create music that has both harmony and melody and is passable as music composed by humans. Previous work in music generation has mainly been focused on creating a single melody. More recent work on polyphonic music modeling, centered around time series probability density estimation, has met some partial success. In particular, there has been a lot of work based off of Recurrent Neural Networks combined with Restricted Boltzmann Machines (RNN-RBM) and other similar recurrent energy based models. Our approach, however, is to perform end-to-end learning and generation with deep neural nets alone.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge