Ali Foroughi pour

On the Consistency of Optimal Bayesian Feature Selection in the Presence of Correlations

Feb 01, 2020

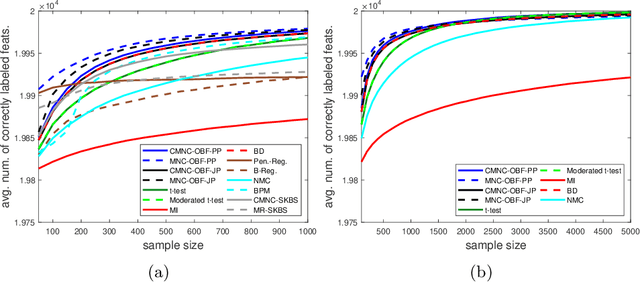

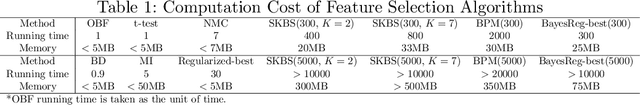

Abstract:Optimal Bayesian feature selection (OBFS) is a multivariate supervised screening method designed from the ground up for biomarker discovery. In this work, we prove that Gaussian OBFS is strongly consistent under mild conditions, and provide rates of convergence for key posteriors in the framework. These results are of enormous importance, since they identify precisely what features are selected by OBFS asymptotically, characterize the relative rates of convergence for posteriors on different types of features, provide conditions that guarantee convergence, justify the use of OBFS when its internal assumptions are invalid, and set the stage for understanding the asymptotic behavior of other algorithms based on the OBFS framework.

Theory of Optimal Bayesian Feature Filtering

Sep 09, 2019

Abstract:Optimal Bayesian feature filtering (OBF) is a supervised screening method designed for biomarker discovery. In this article, we prove two major theoretical properties of OBF. First, optimal Bayesian feature selection under a general family of Bayesian models reduces to filtering if and only if the underlying Bayesian model assumes all features are mutually independent. Therefore, OBF is optimal if and only if one assumes all features are mutually independent, and OBF is the only filter method that is optimal under at least one model in the general Bayesian framework. Second, OBF under independent Gaussian models is consistent under very mild conditions, including cases where the data is non-Gaussian with correlated features. This result provides conditions where OBF is guaranteed to identify the correct feature set given enough data, and it justifies the use of OBF in non-design settings where its assumptions are invalid.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge