Alhasan Abdellatif

A Deep-Learning Iterative Stacked Approach for Prediction of Reactive Dissolution in Porous Media

Mar 11, 2025

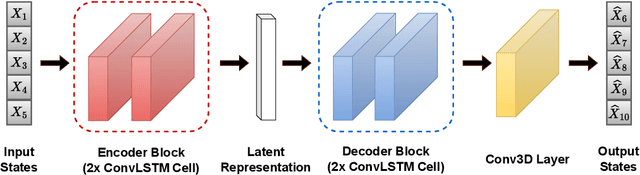

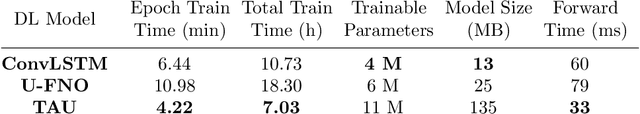

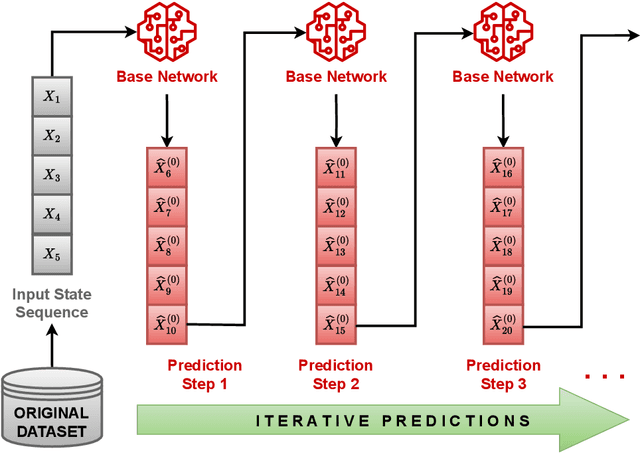

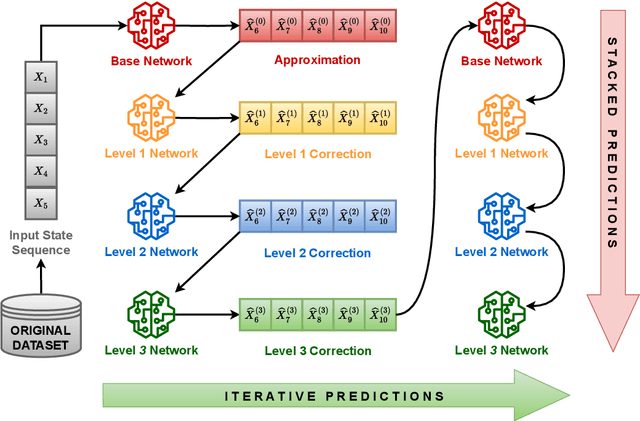

Abstract:Simulating reactive dissolution of solid minerals in porous media has many subsurface applications, including carbon capture and storage (CCS), geothermal systems and oil & gas recovery. As traditional direct numerical simulators are computationally expensive, it is of paramount importance to develop faster and more efficient alternatives. Deep-learning-based solutions, most of them built upon convolutional neural networks (CNNs), have been recently designed to tackle this problem. However, these solutions were limited to approximating one field over the domain (e.g. velocity field). In this manuscript, we present a novel deep learning approach that incorporates both temporal and spatial information to predict the future states of the dissolution process at a fixed time-step horizon, given a sequence of input states. The overall performance, in terms of speed and prediction accuracy, is demonstrated on a numerical simulation dataset, comparing its prediction results against state-of-the-art approaches, also achieving a speedup around $10^4$ over traditional numerical simulators.

Generating Infinite-Resolution Texture using GANs with Patch-by-Patch Paradigm

Sep 05, 2023

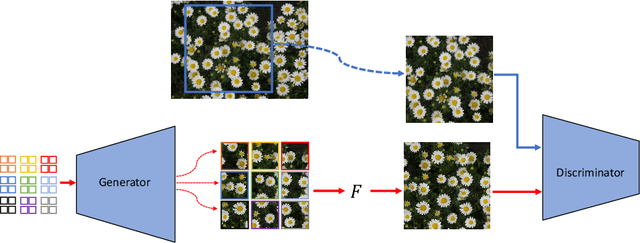

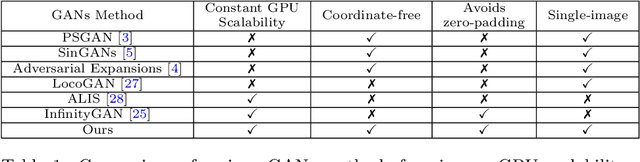

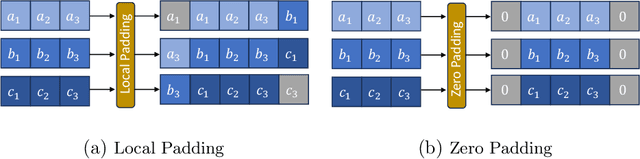

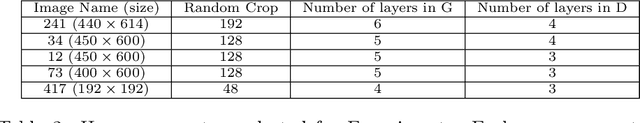

Abstract:In this paper, we introduce a novel approach for generating texture images of infinite resolutions using Generative Adversarial Networks (GANs) based on a patch-by-patch paradigm. Existing texture synthesis techniques often rely on generating a large-scale texture using a one-forward pass to the generating model, this limits the scalability and flexibility of the generated images. In contrast, the proposed approach trains GANs models on a single texture image to generate relatively small patches that are locally correlated and can be seamlessly concatenated to form a larger image while using a constant GPU memory footprint. Our method learns the local texture structure and is able to generate arbitrary-size textures, while also maintaining coherence and diversity. The proposed method relies on local padding in the generator to ensure consistency between patches and utilizes spatial stochastic modulation to allow for local variations and diversity within the large-scale image. Experimental results demonstrate superior scalability compared to existing approaches while maintaining visual coherence of generated textures.

Generation of non-stationary stochastic fields using Generative Adversarial Networks with limited training data

May 11, 2022

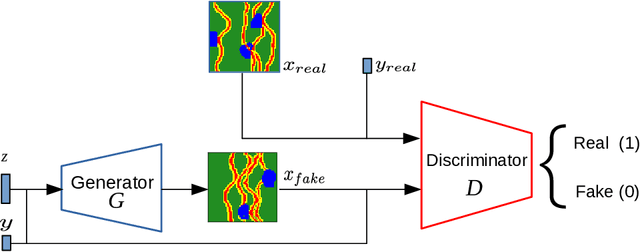

Abstract:In the context of generating geological facies conditioned on observed data, samples corresponding to all possible conditions are not generally available in the training set and hence the generation of these realizations depends primary on the generalization capability of the trained generative model. The problem becomes more complex when applied on non-stationary fields. In this work, we investigate the problem of training Generative Adversarial Networks (GANs) models against a dataset of geological channelized patterns that has a few non-stationary spatial modes and examine the training and self-conditioning settings that improve the generalization capability at new spatial modes that were never seen in the given training set. The developed training method allowed for effective learning of the correlation between the spatial conditions (i.e. non-stationary maps) and the realizations implicitly without using additional loss terms or solving a costly optimization problem at the realization generation phase. Our models, trained on real and artificial datasets were able to generate geologically-plausible realizations beyond the training samples with a strong correlation with the target maps.

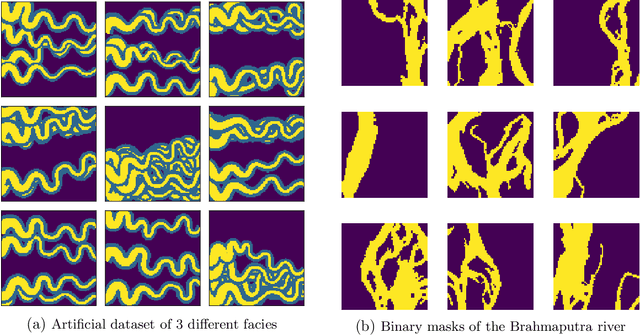

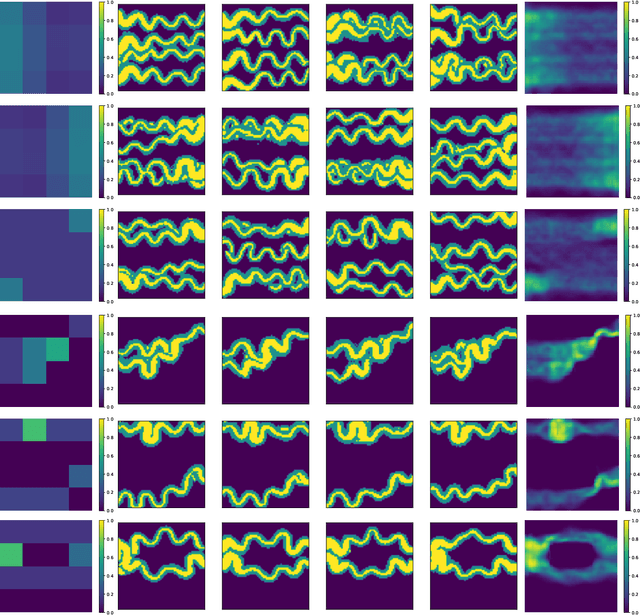

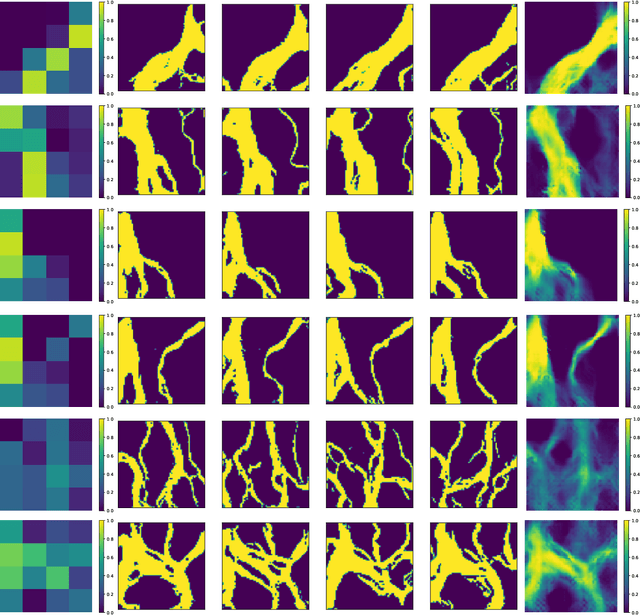

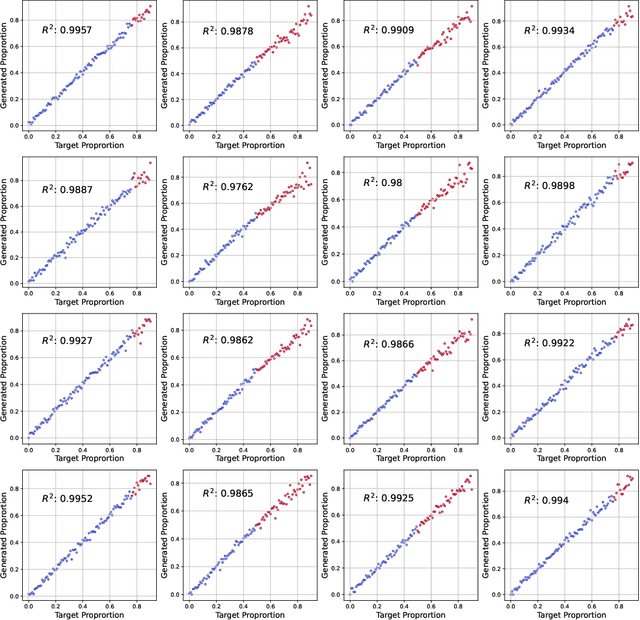

Generating unrepresented proportions of geological facies using Generative Adversarial Networks

Mar 17, 2022

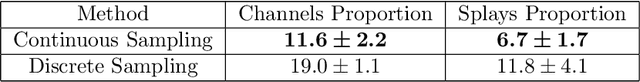

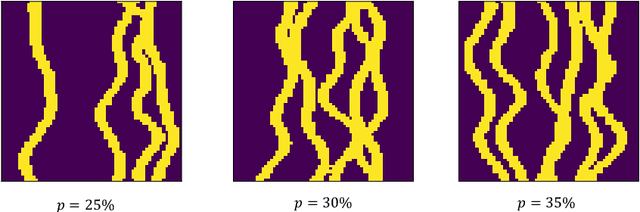

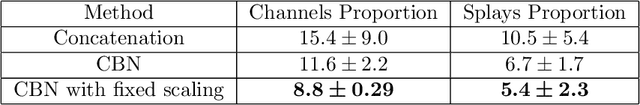

Abstract:In this work, we investigate the capacity of Generative Adversarial Networks (GANs) in interpolating and extrapolating facies proportions in a geological dataset. The new generated realizations with unrepresented (aka. missing) proportions are assumed to belong to the same original data distribution. Specifically, we design a conditional GANs model that can drive the generated facies toward new proportions not found in the training set. The presented study includes an investigation of various training settings and model architectures. In addition, we devised new conditioning routines for an improved generation of the missing samples. The presented numerical experiments on images of binary and multiple facies showed good geological consistency as well as strong correlation with the target conditions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge