Alfredo Pulvirenti

MPXGAT: An Attention based Deep Learning Model for Multiplex Graphs Embedding

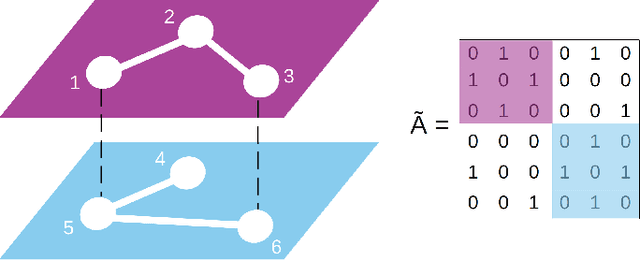

Mar 28, 2024Abstract:Graph representation learning has rapidly emerged as a pivotal field of study. Despite its growing popularity, the majority of research has been confined to embedding single-layer graphs, which fall short in representing complex systems with multifaceted relationships. To bridge this gap, we introduce MPXGAT, an innovative attention-based deep learning model tailored to multiplex graph embedding. Leveraging the robustness of Graph Attention Networks (GATs), MPXGAT captures the structure of multiplex networks by harnessing both intra-layer and inter-layer connections. This exploitation facilitates accurate link prediction within and across the network's multiple layers. Our comprehensive experimental evaluation, conducted on various benchmark datasets, confirms that MPXGAT consistently outperforms state-of-the-art competing algorithms.

MultiSAGE: a multiplex embedding algorithm for inter-layer link prediction

Jun 24, 2022

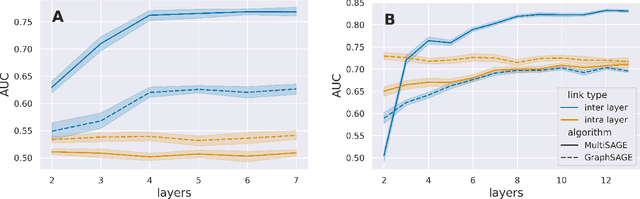

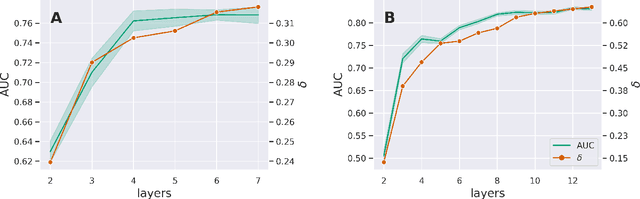

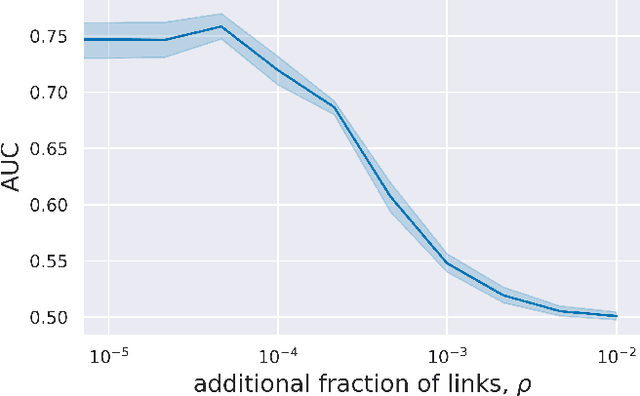

Abstract:Research on graph representation learning has received great attention in recent years. However, most of the studies so far have focused on the embedding of single-layer graphs. The few studies dealing with the problem of representation learning of multilayer structures rely on the strong hypothesis that the inter-layer links are known, and this limits the range of possible applications. Here we propose MultiSAGE, a generalization of the GraphSAGE algorithm that allows to embed multiplex networks. We show that MultiSAGE is capable to reconstruct both the intra-layer and the inter-layer connectivity, outperforming GraphSAGE, which has been designed for simple graphs. Next, through a comprehensive experimental analysis, we shed light also on the performance of the embedding, both in simple and in multiplex networks, showing that either the density of the graph or the randomness of the links strongly influences the quality of the embedding.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge