Alexis Kirke

Artificially Synthesising Data for Audio Classification and Segmentation to Improve Speech and Music Detection in Radio Broadcast

Feb 19, 2021

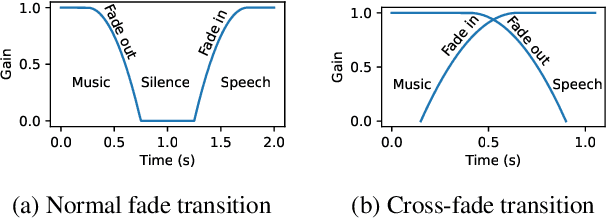

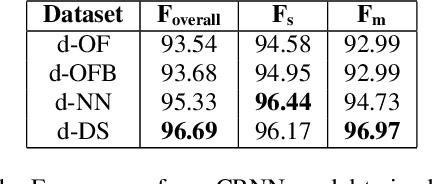

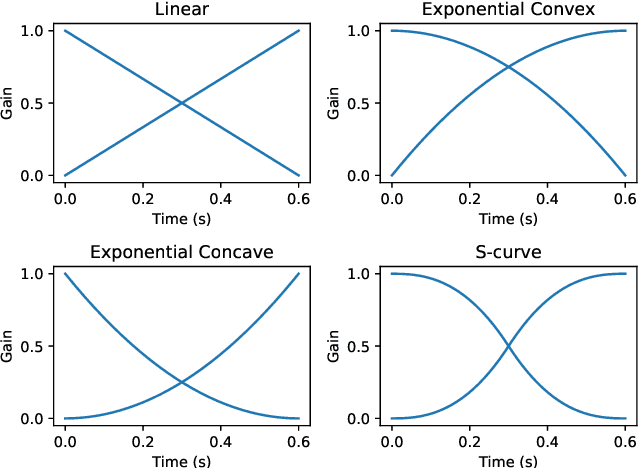

Abstract:Segmenting audio into homogeneous sections such as music and speech helps us understand the content of audio. It is useful as a pre-processing step to index, store, and modify audio recordings, radio broadcasts and TV programmes. Deep learning models for segmentation are generally trained on copyrighted material, which cannot be shared. Annotating these datasets is time-consuming and expensive and therefore, it significantly slows down research progress. In this study, we present a novel procedure that artificially synthesises data that resembles radio signals. We replicate the workflow of a radio DJ in mixing audio and investigate parameters like fade curves and audio ducking. We trained a Convolutional Recurrent Neural Network (CRNN) on this synthesised data and outperformed state-of-the-art algorithms for music-speech detection. This paper demonstrates the data synthesis procedure as a highly effective technique to generate large datasets to train deep neural networks for audio segmentation.

Application of Grover's Algorithm on the ibmqx4 Quantum Computer to Rule-based Algorithmic Music Composition

Feb 02, 2019

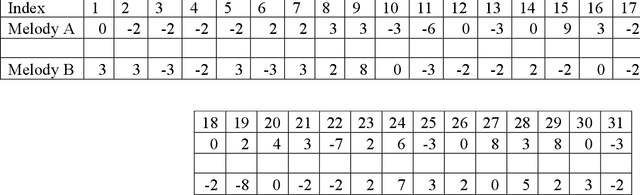

Abstract:Previous research on quantum computing/mechanics and the arts has usually been in simulation. The small amount of work done in hardware or with actual physical systems has not utilized any of the advantages of quantum computation: the main advantage being the potential speed increase of quantum algorithms. This paper introduces a way of utilizing Grover's algorithm - which has been shown to provide a quadratic speed-up over its classical equivalent - in algorithmic rule-based music composition. The system introduced - qgMuse - is simple but scalable. It lays some groundwork for new ways of addressing a significant problem in computer music research: unstructured random search for desired music features. Example melodies are composed using qgMuse using the ibmqx4 quantum hardware, and the paper concludes with discussion on how such an approach can grow with the improvement of quantum computer hardware and software.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge