Alex Monràs

Inductive supervised quantum learning

May 13, 2017

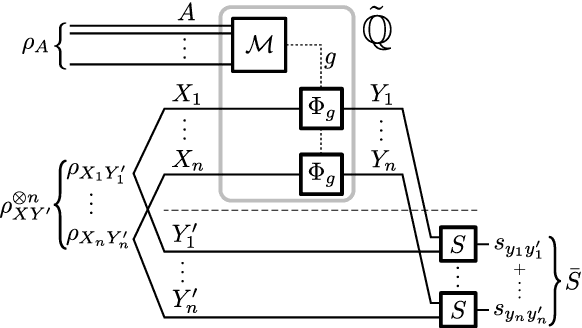

Abstract:In supervised learning, an inductive learning algorithm extracts general rules from observed training instances, then the rules are applied to test instances. We show that this splitting of training and application arises naturally, in the classical setting, from a simple independence requirement with a physical interpretation of being non-signalling. Thus, two seemingly different definitions of inductive learning happen to coincide. This follows from the properties of classical information that break down in the quantum setup. We prove a quantum de Finetti theorem for quantum channels, which shows that in the quantum case, the equivalence holds in the asymptotic setting, that is, for large number of test instances. This reveals a natural analogy between classical learning protocols and their quantum counterparts, justifying a similar treatment, and allowing to inquire about standard elements in computational learning theory, such as structural risk minimization and sample complexity.

* 6+10 pages

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge