Alex Huang

THETA: Triangulated Hand-State Estimation for Teleoperation and Automation in Robotic Hand Control

Jan 12, 2026Abstract:The teleoperation of robotic hands is limited by the high costs of depth cameras and sensor gloves, commonly used to estimate hand relative joint positions (XYZ). We present a novel, cost-effective approach using three webcams for triangulation-based tracking to approximate relative joint angles (theta) of human fingers. We also introduce a modified DexHand, a low-cost robotic hand from TheRobotStudio, to demonstrate THETA's real-time application. Data collection involved 40 distinct hand gestures using three 640x480p webcams arranged at 120-degree intervals, generating over 48,000 RGB images. Joint angles were manually determined by measuring midpoints of the MCP, PIP, and DIP finger joints. Captured RGB frames were processed using a DeepLabV3 segmentation model with a ResNet-50 backbone for multi-scale hand segmentation. The segmented images were then HSV-filtered and fed into THETA's architecture, consisting of a MobileNetV2-based CNN classifier optimized for hierarchical spatial feature extraction and a 9-channel input tensor encoding multi-perspective hand representations. The classification model maps segmented hand views into discrete joint angles, achieving 97.18% accuracy, 98.72% recall, F1 Score of 0.9274, and a precision of 0.8906. In real-time inference, THETA captures simultaneous frames, segments hand regions, filters them, and compiles a 9-channel tensor for classification. Joint-angle predictions are relayed via serial to an Arduino, enabling the DexHand to replicate hand movements. Future research will increase dataset diversity, integrate wrist tracking, and apply computer vision techniques such as OpenAI-Vision. THETA potentially ensures cost-effective, user-friendly teleoperation for medical, linguistic, and manufacturing applications.

On Visual Hallmarks of Robustness to Adversarial Malware

May 09, 2018

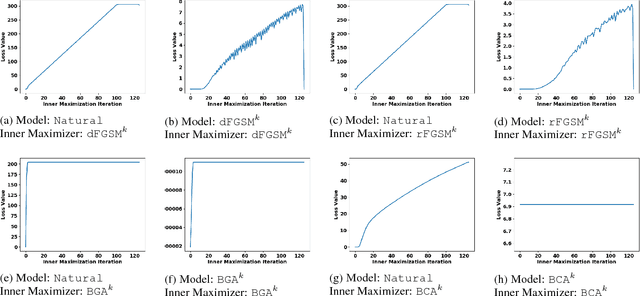

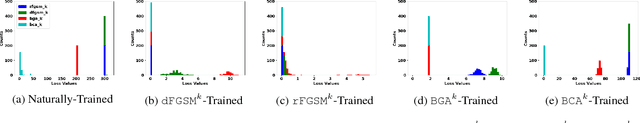

Abstract:A central challenge of adversarial learning is to interpret the resulting hardened model. In this contribution, we ask how robust generalization can be visually discerned and whether a concise view of the interactions between a hardened decision map and input samples is possible. We first provide a means of visually comparing a hardened model's loss behavior with respect to the adversarial variants generated during training versus loss behavior with respect to adversarial variants generated from other sources. This allows us to confirm that the association of observed flatness of a loss landscape with generalization that is seen with naturally trained models extends to adversarially hardened models and robust generalization. To complement these means of interpreting model parameter robustness we also use self-organizing maps to provide a visual means of superimposing adversarial and natural variants on a model's decision space, thus allowing the model's global robustness to be comprehensively examined.

Adversarial Deep Learning for Robust Detection of Binary Encoded Malware

Mar 25, 2018

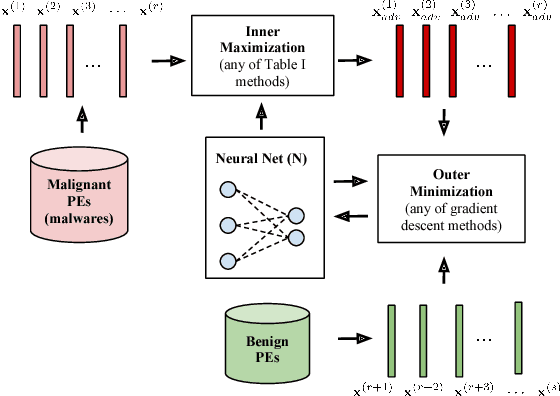

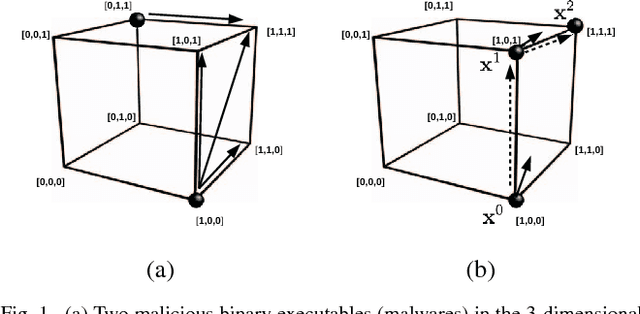

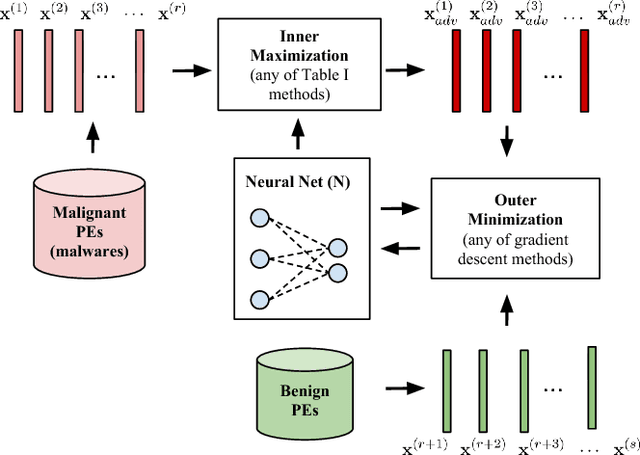

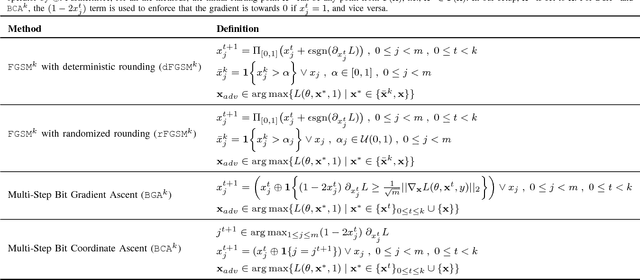

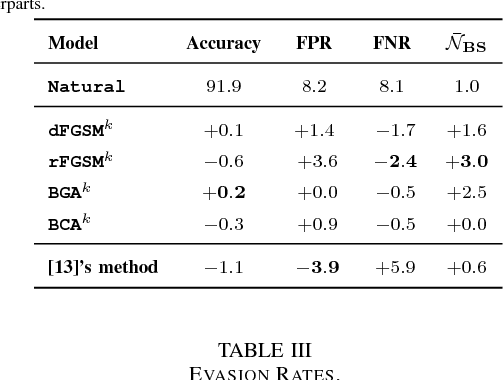

Abstract:Malware is constantly adapting in order to avoid detection. Model based malware detectors, such as SVM and neural networks, are vulnerable to so-called adversarial examples which are modest changes to detectable malware that allows the resulting malware to evade detection. Continuous-valued methods that are robust to adversarial examples of images have been developed using saddle-point optimization formulations. We are inspired by them to develop similar methods for the discrete, e.g. binary, domain which characterizes the features of malware. A specific extra challenge of malware is that the adversarial examples must be generated in a way that preserves their malicious functionality. We introduce methods capable of generating functionally preserved adversarial malware examples in the binary domain. Using the saddle-point formulation, we incorporate the adversarial examples into the training of models that are robust to them. We evaluate the effectiveness of the methods and others in the literature on a set of Portable Execution~(PE) files. Comparison prompts our introduction of an online measure computed during training to assess general expectation of robustness.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge