Aleksey Golovinskiy

Large-Scale 3D Scene Classification With Multi-View Volumetric CNN

Dec 26, 2017

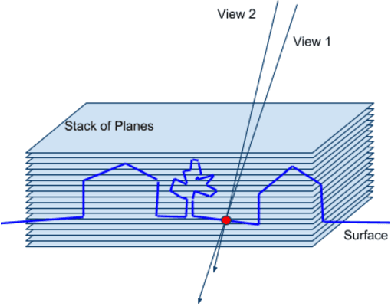

Abstract:We introduce a method to classify imagery using a convo- lutional neural network (CNN) on multi-view image pro- jections. The power of our method comes from using pro- jections of multiple images at multiple depth planes near the reconstructed surface. This enables classification of categories whose salient aspect is appearance change un- der different viewpoints, such as water, trees, and other materials with complex reflection/light response proper- ties. Our method does not require boundary labelling in images and works on pixel-level classification with a small (few pixels) context, which simplifies the cre- ation of a training set. We demonstrate this application on large-scale aerial imagery collections, and extend the per-pixel classification to robustly create a consistent 2D classification which can be used to fill the gaps in non- reconstructible water regions. We also apply our method to classify tree regions. In both cases, the training data can quickly be generated using a small number of manually- created polygons on a map. We show that even with a very simple and standard network our CNN outperforms the state-of-the-art image classification, the Inception-V3 model retrained from a large collection of aerial images.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge