Aleem Khaliq

Domain-Adversarial Training of Self-Attention Based Networks for Land Cover Classification using Multi-temporal Sentinel-2 Satellite Imagery

Apr 01, 2021

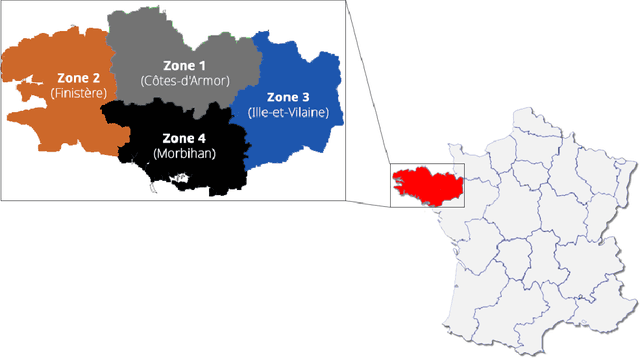

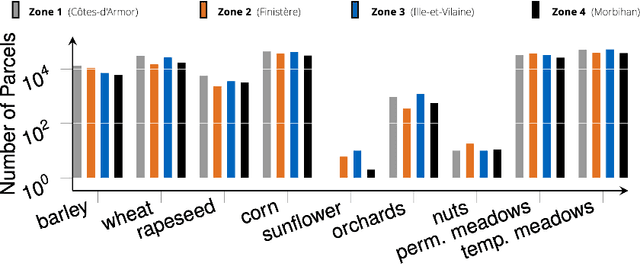

Abstract:The increasing availability of large-scale remote sensing labeled data has prompted researchers to develop increasingly precise and accurate data-driven models for land cover and crop classification (LC&CC). Moreover, with the introduction of self-attention and introspection mechanisms, deep learning approaches have shown promising results in processing long temporal sequences in the multi-spectral domain with a contained computational request. Nevertheless, most practical applications cannot rely on labeled data, and in the field, surveys are a time consuming solution that poses strict limitations to the number of collected samples. Moreover, atmospheric conditions and specific geographical region characteristics constitute a relevant domain gap that does not allow direct applicability of a trained model on the available dataset to the area of interest. In this paper, we investigate adversarial training of deep neural networks to bridge the domain discrepancy between distinct geographical zones. In particular, we perform a thorough analysis of domain adaptation applied to challenging multi-spectral, multi-temporal data, accurately highlighting the advantages of adapting state-of-the-art self-attention based models for LC&CC to different target zones where labeled data are not available. Extensive experimentation demonstrated significant performance and generalization gain in applying domain-adversarial training to source and target regions with marked dissimilarities between the distribution of extracted features.

Multi-image Super Resolution of Remotely Sensed Images using Residual Feature Attention Deep Neural Networks

Jul 08, 2020

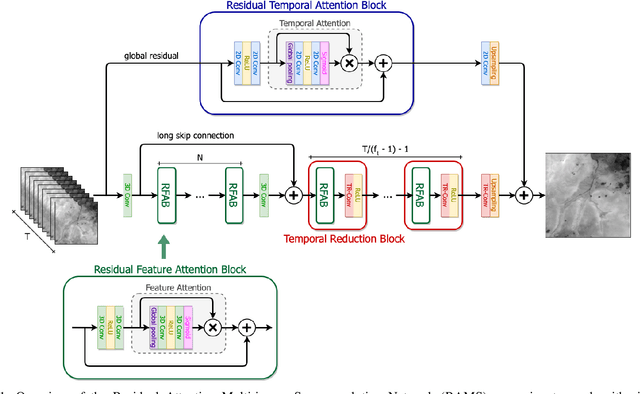

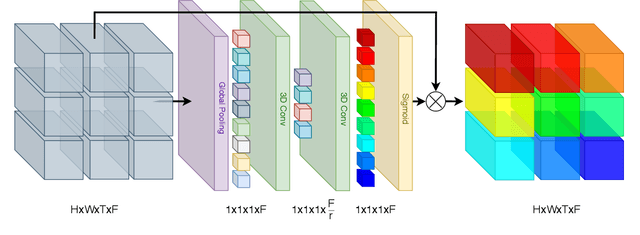

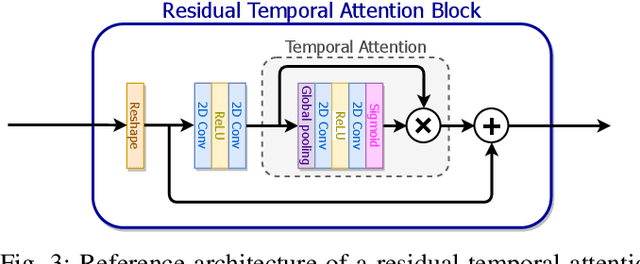

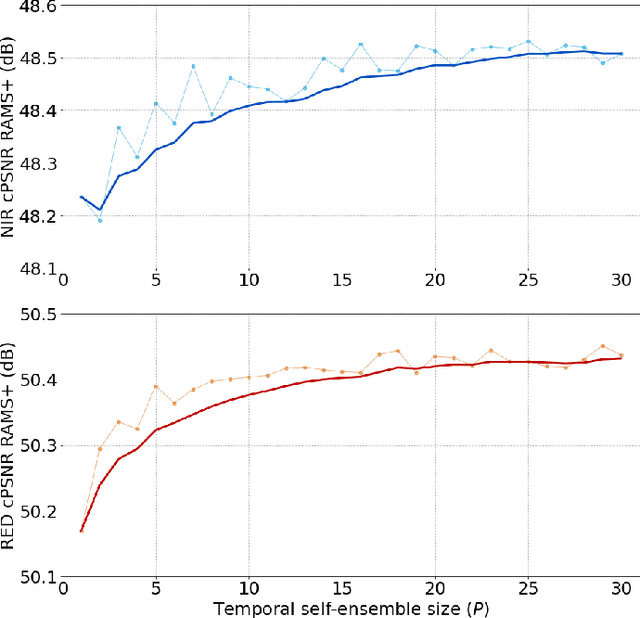

Abstract:Convolutional Neural Networks (CNNs) have been consistently proved state-of-the-art results in image Super-Resolution (SR), representing an exceptional opportunity for the remote sensing field to extract further information and knowledge from captured data. However, most of the works published in the literature have been focusing on the Single-Image Super-Resolution problem so far. At present, satellite based remote sensing platforms offer huge data availability with high temporal resolution and low spatial resolution. In this context, the presented research proposes a novel residual attention model (RAMS) that efficiently tackles the multi-image super-resolution task, simultaneously exploiting spatial and temporal correlations to combine multiple images. We introduce the mechanism of visual feature attention with 3D convolutions in order to obtain an aware data fusion and information extraction of the multiple low-resolution images, transcending limitations of the local region of convolutional operations. Moreover, having multiple inputs with the same scene, our representation learning network makes extensive use of nestled residual connections to let flow redundant low-frequency signals and focus the computation on more important high-frequency components. Extensive experimentation and evaluations against other available solutions, either for single or multi-image super-resolution, have demonstrated that the proposed deep learning-based solution can be considered state-of-the-art for Multi-Image Super-Resolution for remote sensing applications.

Improvement in Land Cover and Crop Classification based on Temporal Features Learning from Sentinel-2 Data Using Recurrent-Convolutional Neural Network (R-CNN)

May 05, 2020

Abstract:The increasing spatial and temporal resolution of globally available satellite images, such as provided by Sentinel-2, creates new possibilities for researchers to use freely available multi-spectral optical images, with decametric spatial resolution and more frequent revisits for remote sensing applications such as land cover and crop classification (LC&CC), agricultural monitoring and management, environment monitoring. Existing solutions dedicated to cropland mapping can be categorized based on per-pixel based and object-based. However, it is still challenging when more classes of agricultural crops are considered at a massive scale. In this paper, a novel and optimal deep learning model for pixel-based LC&CC is developed and implemented based on Recurrent Neural Networks (RNN) in combination with Convolutional Neural Networks (CNN) using multi-temporal sentinel-2 imagery of central north part of Italy, which has diverse agricultural system dominated by economic crop types. The proposed methodology is capable of automated feature extraction by learning time correlation of multiple images, which reduces manual feature engineering and modeling crop phenological stages. Fifteen classes, including major agricultural crops, were considered in this study. We also tested other widely used traditional machine learning algorithms for comparison such as support vector machine SVM, random forest (RF), Kernal SVM, and gradient boosting machine, also called XGBoost. The overall accuracy achieved by our proposed Pixel R-CNN was 96.5%, which showed considerable improvements in comparison with existing mainstream methods. This study showed that Pixel R-CNN based model offers a highly accurate way to assess and employ time-series data for multi-temporal classification tasks.

UAV and Machine Learning Based Refinement of a Satellite-Driven Vegetation Index for Precision Agriculture

Apr 29, 2020

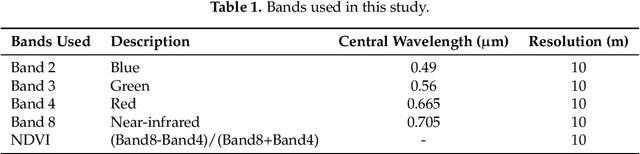

Abstract:Precision agriculture is considered to be a fundamental approach in pursuing a low-input, high-efficiency, and sustainable kind of agriculture when performing site-specific management practices. To achieve this objective, a reliable and updated description of the local status of crops is required. Remote sensing, and in particular satellite-based imagery, proved to be a valuable tool in crop mapping, monitoring, and diseases assessment. However, freely available satellite imagery with low or moderate resolutions showed some limits in specific agricultural applications, e.g., where crops are grown by rows. Indeed, in this framework, the satellite's output could be biased by intra-row covering, giving inaccurate information about crop status. This paper presents a novel satellite imagery refinement framework, based on a deep learning technique which exploits information properly derived from high resolution images acquired by unmanned aerial vehicle (UAV) airborne multispectral sensors. To train the convolutional neural network, only a single UAV-driven dataset is required, making the proposed approach simple and cost-effective. A vineyard in Serralunga d'Alba (Northern Italy) was chosen as a case study for validation purposes. Refined satellite-driven normalized difference vegetation index (NDVI) maps, acquired in four different periods during the vine growing season, were shown to better describe crop status with respect to raw datasets by correlation analysis and ANOVA. In addition, using a K-means based classifier, 3-class vineyard vigor maps were profitably derived from the NDVI maps, which are a valuable tool for growers.

Real-Time Apple Detection System Using Embedded Systems With Hardware Accelerators: An Edge AI Application

Apr 28, 2020

Abstract:Real-time apple detection in orchards is one of the most effective ways of estimating apple yields, which helps in managing apple supplies more effectively. Traditional detection methods used highly computational machine learning algorithms with intensive hardware set up, which are not suitable for infield real-time apple detection due to their weight and power constraints. In this study, a real-time embedded solution inspired from "Edge AI" is proposed for apple detection with the implementation of YOLOv3-tiny algorithm on various embedded platforms such as Raspberry Pi 3 B+ in combination with Intel Movidius Neural Computing Stick (NCS), Nvidia's Jetson Nano and Jetson AGX Xavier. Data set for training were compiled using acquired images during field survey of apple orchard situated in the north region of Italy, and images used for testing were taken from widely used google data set by filtering out the images containing apples in different scenes to ensure the robustness of the algorithm. The proposed study adapts YOLOv3-tiny architecture to detect small objects. It shows the feasibility of deployment of the customized model on cheap and power-efficient embedded hardware without compromising mean average detection accuracy (83.64%) and achieved frame rate up to 30 fps even for the difficult scenarios such as overlapping apples, complex background, less exposure of apple due to leaves and branches. Furthermore, the proposed embedded solution can be deployed on the unmanned ground vehicles to detect, count, and measure the size of the apples in real-time to help the farmers and agronomists in their decision making and management skills.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge