Akshit Gupta

Using street view imagery and deep generative modeling for estimating the health of urban forests

Apr 20, 2025Abstract:Healthy urban forests comprising of diverse trees and shrubs play a crucial role in mitigating climate change. They provide several key advantages such as providing shade for energy conservation, and intercepting rainfall to reduce flood runoff and soil erosion. Traditional approaches for monitoring the health of urban forests require instrumented inspection techniques, often involving a high amount of human labor and subjective evaluations. As a result, they are not scalable for cities which lack extensive resources. Recent approaches involving multi-spectral imaging data based on terrestrial sensing and satellites, are constrained respectively with challenges related to dedicated deployments and limited spatial resolutions. In this work, we propose an alternative approach for monitoring the urban forests using simplified inputs: street view imagery, tree inventory data and meteorological conditions. We propose to use image-to-image translation networks to estimate two urban forest health parameters, namely, NDVI and CTD. Finally, we aim to compare the generated results with ground truth data using an onsite campaign utilizing handheld multi-spectral and thermal imaging sensors. With the advent and expansion of street view imagery platforms such as Google Street View and Mapillary, this approach should enable effective management of urban forests for the authorities in cities at scale.

A Comprehensive Survey of AI-Driven Advancements and Techniques in Automated Program Repair and Code Generation

Nov 12, 2024

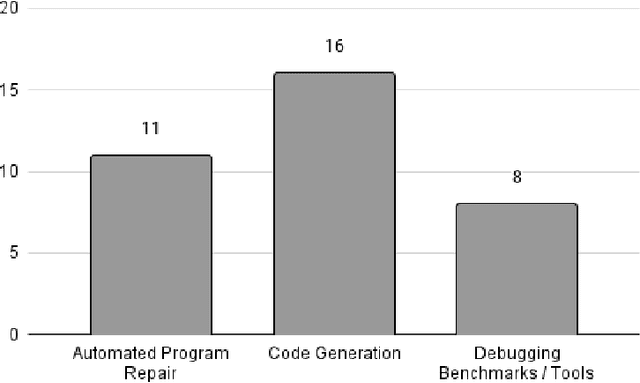

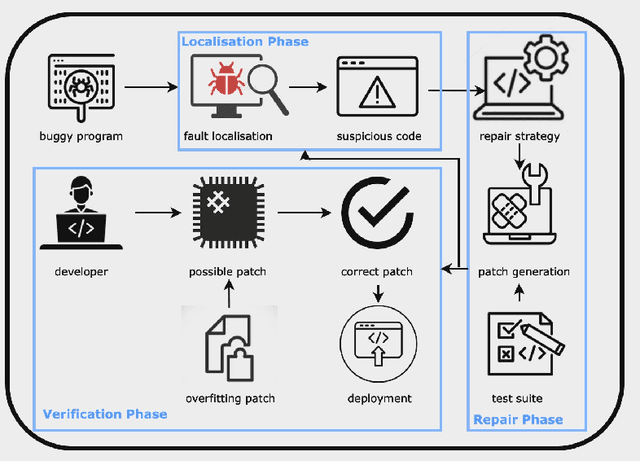

Abstract:Bug fixing and code generation have been core research topics in software development for many years. The recent explosive growth in Large Language Models has completely transformed these spaces, putting in reach incredibly powerful tools for both. In this survey, 27 recent papers have been reviewed and split into two groups: one dedicated to Automated Program Repair (APR) and LLM integration and the other to code generation using LLMs. The first group consists of new methods for bug detection and repair, which include locating semantic errors, security vulnerabilities, and runtime failure bugs. The place of LLMs in reducing manual debugging efforts is emphasized in this work by APR toward context-aware fixes, with innovations that boost accuracy and efficiency in automatic debugging. The second group dwells on code generation, providing an overview of both general-purpose LLMs fine-tuned for programming and task-specific models. It also presents methods to improve code generation, such as identifier-aware training, fine-tuning at the instruction level, and incorporating semantic code structures. This survey work contrasts the methodologies in APR and code generation to identify trends such as using LLMs, feedback loops to enable iterative code improvement and open-source models. It also discusses the challenges of achieving functional correctness and security and outlines future directions for research in LLM-based software development.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge