Akram S. Awad

Distributionally Robust Domain Adaptation

Oct 30, 2022

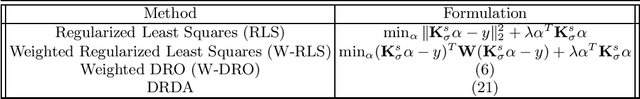

Abstract:Domain Adaptation (DA) has recently received significant attention due to its potential to adapt a learning model across source and target domains with mismatched distributions. Since DA methods rely exclusively on the given source and target domain samples, they generally yield models that are vulnerable to noise and unable to adapt to unseen samples from the target domain, which calls for DA methods that guarantee the robustness and generalization of the learned models. In this paper, we propose DRDA, a distributionally robust domain adaptation method. DRDA leverages a distributionally robust optimization (DRO) framework to learn a robust decision function that minimizes the worst-case target domain risk and generalizes to any sample from the target domain by transferring knowledge from a given labeled source domain sample. We utilize the Maximum Mean Discrepancy (MMD) metric to construct an ambiguity set of distributions that provably contains the source and target domain distributions with high probability. Hence, the risk is shown to upper bound the out-of-sample target domain loss. Our experimental results demonstrate that our formulation outperforms existing robust learning approaches.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge