Aikaterina Manoli

Mental Models of Autonomy and Sentience Shape Reactions to AI

Dec 09, 2025Abstract:Narratives about artificial intelligence (AI) entangle autonomy, the capacity to self-govern, with sentience, the capacity to sense and feel. AI agents that perform tasks autonomously and companions that recognize and express emotions may activate mental models of autonomy and sentience, respectively, provoking distinct reactions. To examine this possibility, we conducted three pilot studies (N = 374) and four preregistered vignette experiments describing an AI as autonomous, sentient, both, or neither (N = 2,702). Activating a mental model of sentience increased general mind perception (cognition and emotion) and moral consideration more than autonomy, but autonomy increased perceived threat more than sentience. Sentience also increased perceived autonomy more than vice versa. Based on a within-paper meta-analysis, sentience changed reactions more than autonomy on average. By disentangling different mental models of AI, we can study human-AI interaction with more precision to better navigate the detailed design of anthropomorphized AI and prompting interfaces.

The AI Double Standard: Humans Judge All AIs for the Actions of One

Dec 08, 2024Abstract:Robots and other artificial intelligence (AI) systems are widely perceived as moral agents responsible for their actions. As AI proliferates, these perceptions may become entangled via the moral spillover of attitudes towards one AI to attitudes towards other AIs. We tested how the seemingly harmful and immoral actions of an AI or human agent spill over to attitudes towards other AIs or humans in two preregistered experiments. In Study 1 (N = 720), we established the moral spillover effect in human-AI interaction by showing that immoral actions increased attributions of negative moral agency (i.e., acting immorally) and decreased attributions of positive moral agency (i.e., acting morally) and moral patiency (i.e., deserving moral concern) to both the agent (a chatbot or human assistant) and the group to which they belong (all chatbot or human assistants). There was no significant difference in the spillover effects between the AI and human contexts. In Study 2 (N = 684), we tested whether spillover persisted when the agent was individuated with a name and described as an AI or human, rather than specifically as a chatbot or personal assistant. We found that spillover persisted in the AI context but not in the human context, possibly because AIs were perceived as more homogeneous due to their outgroup status relative to humans. This asymmetry suggests a double standard whereby AIs are judged more harshly than humans when one agent morally transgresses. With the proliferation of diverse, autonomous AI systems, HCI research and design should account for the fact that experiences with one AI could easily generalize to perceptions of all AIs and negative HCI outcomes, such as reduced trust.

What Do People Think about Sentient AI?

Jul 11, 2024

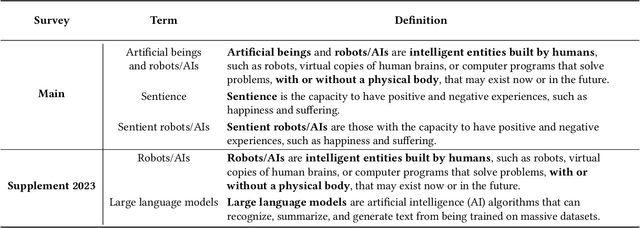

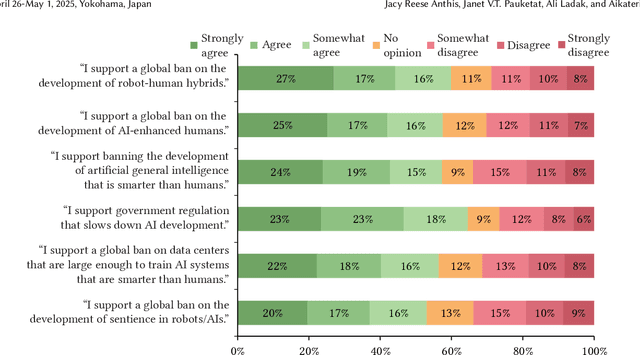

Abstract:With rapid advances in machine learning, many people in the field have been discussing the rise of digital minds and the possibility of artificial sentience. Future developments in AI capabilities and safety will depend on public opinion and human-AI interaction. To begin to fill this research gap, we present the first nationally representative survey data on the topic of sentient AI: initial results from the Artificial Intelligence, Morality, and Sentience (AIMS) survey, a preregistered and longitudinal study of U.S. public opinion that began in 2021. Across one wave of data collection in 2021 and two in 2023 (total \textit{N} = 3,500), we found mind perception and moral concern for AI well-being in 2021 were higher than predicted and significantly increased in 2023: for example, 71\% agree sentient AI deserve to be treated with respect, and 38\% support legal rights. People have become more threatened by AI, and there is widespread opposition to new technologies: 63\% support a ban on smarter-than-human AI, and 69\% support a ban on sentient AI. Expected timelines are surprisingly short and shortening with a median forecast of sentient AI in only five years and artificial general intelligence in only two years. We argue that, whether or not AIs become sentient, the discussion itself may overhaul human-computer interaction and shape the future trajectory of AI technologies, including existential risks and opportunities.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge