Ahmed Zgaren

Automatic counting of planting microsites via local visual detection and global count estimation

Nov 01, 2023Abstract:In forest industry, mechanical site preparation by mounding is widely used prior to planting operations. One of the main problems when planning planting operations is the difficulty in estimating the number of mounds present on a planting block, as their number may greatly vary depending on site characteristics. This estimation is often carried out through field surveys by several forestry workers. However, this procedure is prone to error and slowness. Motivated by recent advances in UAV imagery and artificial intelligence, we propose a fully automated framework to estimate the number of mounds on a planting block. Using computer vision and machine learning, we formulate the counting task as a supervised learning problem using two prediction models. A local detection model is firstly used to detect visible mounds based on deep features, while a global prediction function is subsequently applied to provide a final estimation based on block-level features. To evaluate the proposed method, we constructed a challenging UAV dataset representing several plantation blocks with different characteristics. The performed experiments demonstrated the robustness of the proposed method, which outperforms manual methods in precision, while significantly reducing time and cost.

Automatic counting of mounds on UAV images: combining instance segmentation and patch-level correction

Sep 06, 2022

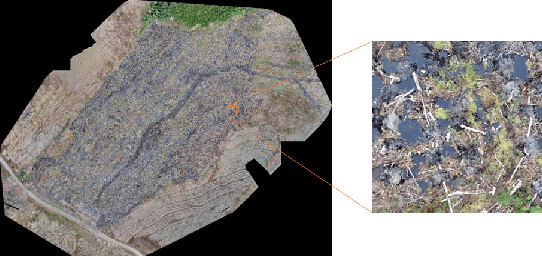

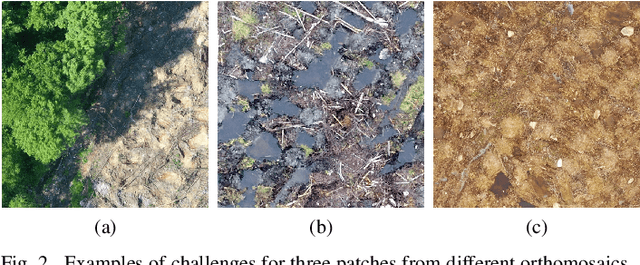

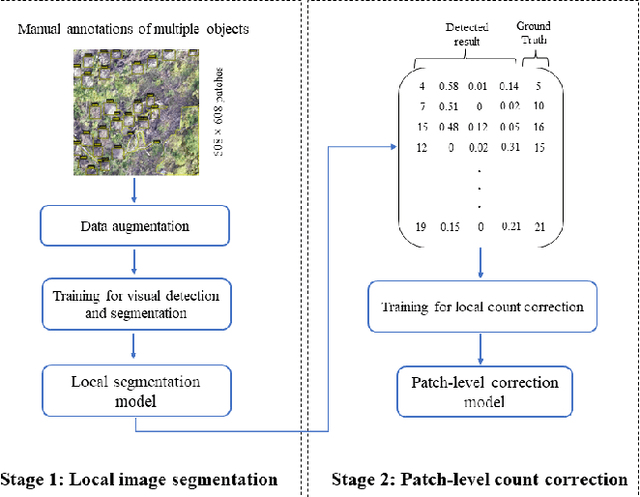

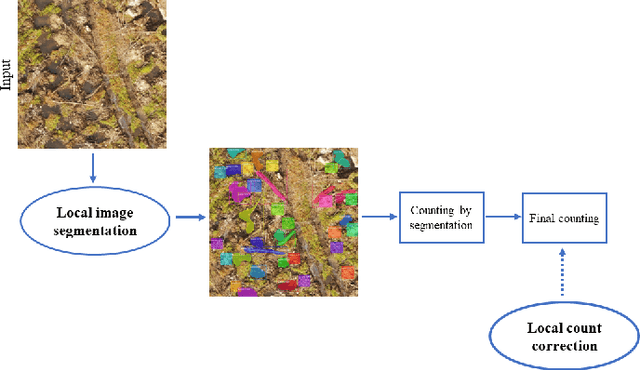

Abstract:Site preparation by mounding is a commonly used silvicultural treatment that improves tree growth conditions by mechanically creating planting microsites called mounds. Following site preparation, the next critical step is to count the number of mounds, which provides forest managers with a precise estimate of the number of seedlings required for a given plantation block. Counting the number of mounds is generally conducted through manual field surveys by forestry workers, which is costly and prone to errors, especially for large areas. To address this issue, we present a novel framework exploiting advances in Unmanned Aerial Vehicle (UAV) imaging and computer vision to accurately estimate the number of mounds on a planting block. The proposed framework comprises two main components. First, we exploit a visual recognition method based on a deep learning algorithm for multiple object detection by pixel-based segmentation. This enables a preliminary count of visible mounds, as well as other frequently seen objects (e.g. trees, debris, accumulation of water), to be used to characterize the planting block. Second, since visual recognition could limited by several perturbation factors (e.g. mound erosion, occlusion), we employ a machine learning estimation function that predicts the final number of mounds based on the local block properties extracted in the first stage. We evaluate the proposed framework on a new UAV dataset representing numerous planting blocks with varying features. The proposed method outperformed manual counting methods in terms of relative counting precision, indicating that it has the potential to be advantageous and efficient in difficult situations.

Coarse-to-Fine Object Tracking Using Deep Features and Correlation Filters

Dec 23, 2020

Abstract:During the last years, deep learning trackers achieved stimulating results while bringing interesting ideas to solve the tracking problem. This progress is mainly due to the use of learned deep features obtained by training deep convolutional neural networks (CNNs) on large image databases. But since CNNs were originally developed for image classification, appearance modeling provided by their deep layers might be not enough discriminative for the tracking task. In fact,such features represent high-level information, that is more related to object category than to a specific instance of the object. Motivated by this observation, and by the fact that discriminative correlation filters(DCFs) may provide a complimentary low-level information, we presenta novel tracking algorithm taking advantage of both approaches. We formulate the tracking task as a two-stage procedure. First, we exploit the generalization ability of deep features to coarsely estimate target translation, while ensuring invariance to appearance change. Then, we capitalize on the discriminative power of correlation filters to precisely localize the tracked object. Furthermore, we designed an update control mechanism to learn appearance change while avoiding model drift. We evaluated the proposed tracker on object tracking benchmarks. Experimental results show the robustness of our algorithm, which performs favorably against CNN and DCF-based trackers. Code is available at: https://github.com/AhmedZgaren/Coarse-to-fine-Tracker

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge