Adriano Vinhas

Evolutionary algorithms meet self-supervised learning: a comprehensive survey

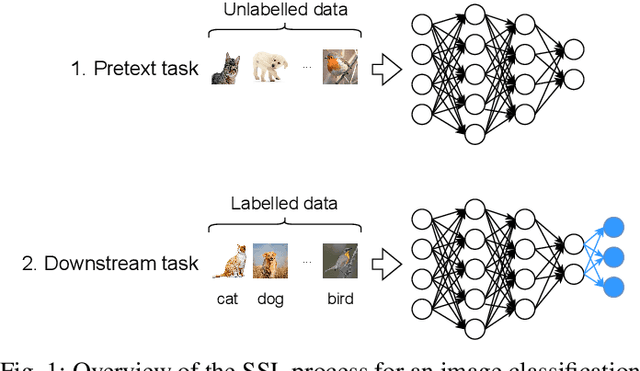

Apr 09, 2025Abstract:The number of studies that combine Evolutionary Machine Learning and self-supervised learning has been growing steadily in recent years. Evolutionary Machine Learning has been shown to help automate the design of machine learning algorithms and to lead to more reliable solutions. Self-supervised learning, on the other hand, has produced good results in learning useful features when labelled data is limited. This suggests that the combination of these two areas can help both in shaping evolutionary processes and in automating the design of deep neural networks, while also reducing the need for labelled data. Still, there are no detailed reviews that explain how Evolutionary Machine Learning and self-supervised learning can be used together. To help with this, we provide an overview of studies that bring these areas together. Based on this growing interest and the range of existing works, we suggest a new sub-area of research, which we call Evolutionary Self-Supervised Learning and introduce a taxonomy for it. Finally, we point out some of the main challenges and suggest directions for future research to help Evolutionary Self-Supervised Learning grow and mature as a field.

Towards evolution of Deep Neural Networks through contrastive Self-Supervised learning

Jun 20, 2024

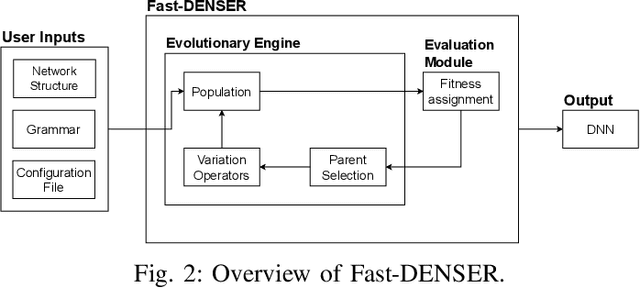

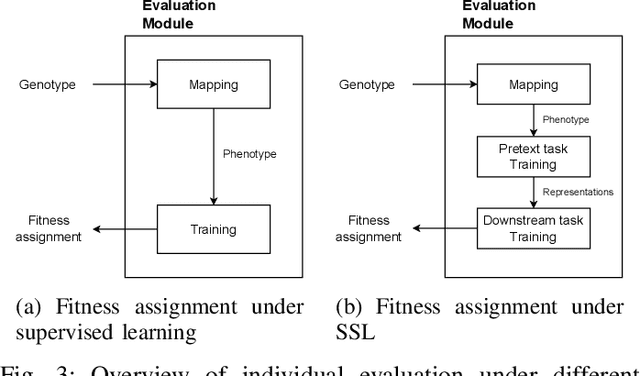

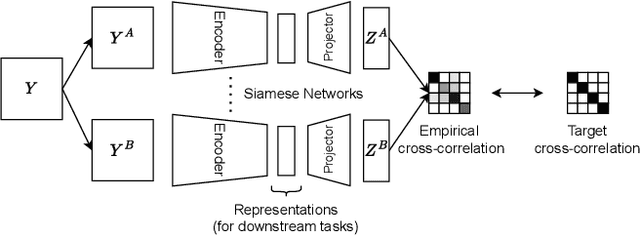

Abstract:Deep Neural Networks (DNNs) have been successfully applied to a wide range of problems. However, two main limitations are commonly pointed out. The first one is that they require long time to design. The other is that they heavily rely on labelled data, which can sometimes be costly and hard to obtain. In order to address the first problem, neuroevolution has been proved to be a plausible option to automate the design of DNNs. As for the second problem, self-supervised learning has been used to leverage unlabelled data to learn representations. Our goal is to study how neuroevolution can help self-supervised learning to bridge the gap to supervised learning in terms of performance. In this work, we propose a framework that is able to evolve deep neural networks using self-supervised learning. Our results on the CIFAR-10 dataset show that it is possible to evolve adequate neural networks while reducing the reliance on labelled data. Moreover, an analysis to the structure of the evolved networks suggests that the amount of labelled data fed to them has less effect on the structure of networks that learned via self-supervised learning, when compared to individuals that relied on supervised learning.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge