Aditya Rana

Scene Understanding for Autonomous Driving

May 11, 2021

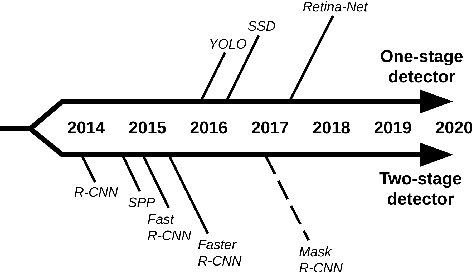

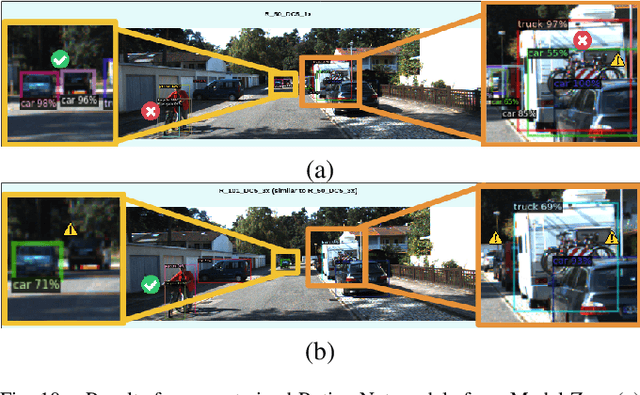

Abstract:To detect and segment objects in images based on their content is one of the most active topics in the field of computer vision. Nowadays, this problem can be addressed using Deep Learning architectures such as Faster R-CNN or YOLO, among others. In this paper, we study the behaviour of different configurations of RetinaNet, Faster R-CNN and Mask R-CNN presented in Detectron2. First, we evaluate qualitatively and quantitatively (AP) the performance of the pre-trained models on KITTI-MOTS and MOTSChallenge datasets. We observe a significant improvement in performance after fine-tuning these models on the datasets of interest and optimizing hyperparameters. Finally, we run inference in unusual situations using out of context datasets, and present interesting results that help us understanding better the networks.

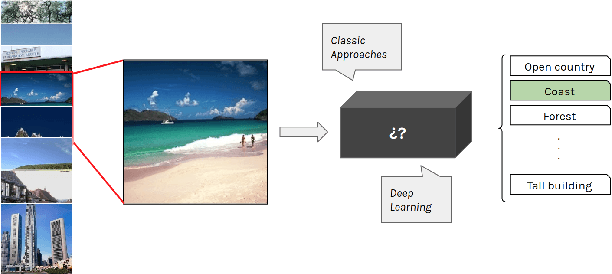

Image Classification with Classic and Deep Learning Techniques

May 11, 2021

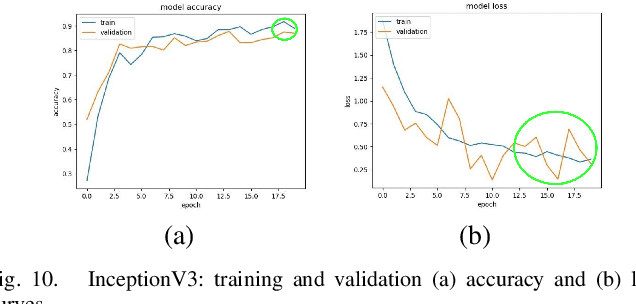

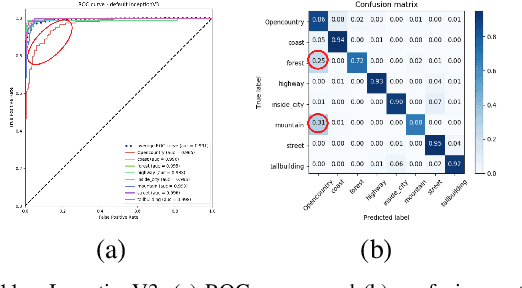

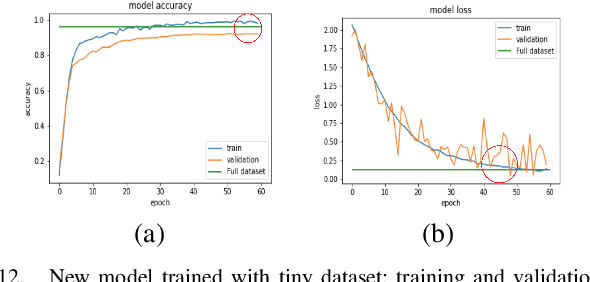

Abstract:To classify images based on their content is one of the most studied topics in the field of computer vision. Nowadays, this problem can be addressed using modern techniques such as Convolutional Neural Networks (CNN), but over the years different classical methods have been developed. In this report, we implement an image classifier using both classic computer vision and deep learning techniques. Specifically, we study the performance of a Bag of Visual Words classifier using Support Vector Machines, a Multilayer Perceptron, an existing architecture named InceptionV3 and our own CNN, TinyNet, designed from scratch. We evaluate each of the cases in terms of accuracy and loss, and we obtain results that vary between 0.6 and 0.96 depending on the model and configuration used.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge