Aditi Kalyani

Assessing the Quality of MT Systems for Hindi to English Translation

Apr 15, 2014

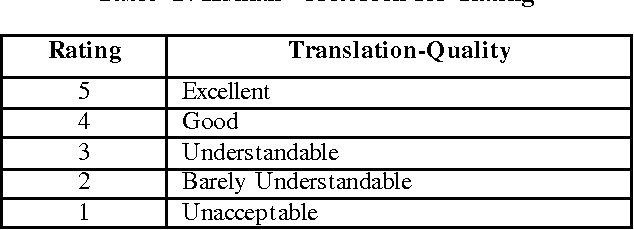

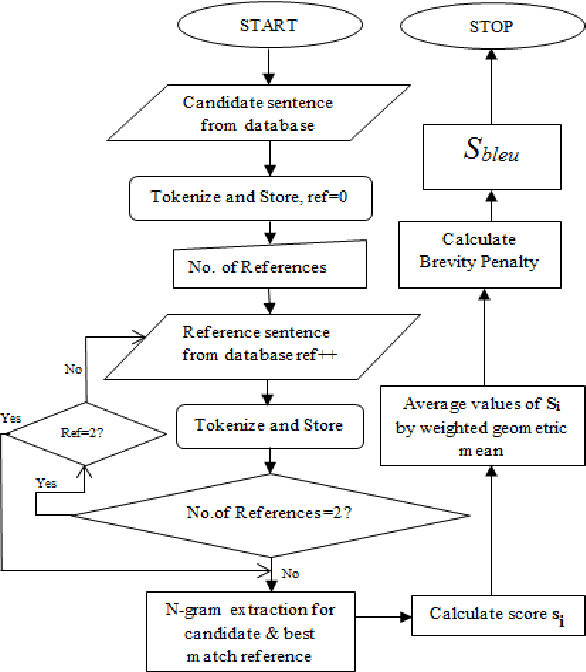

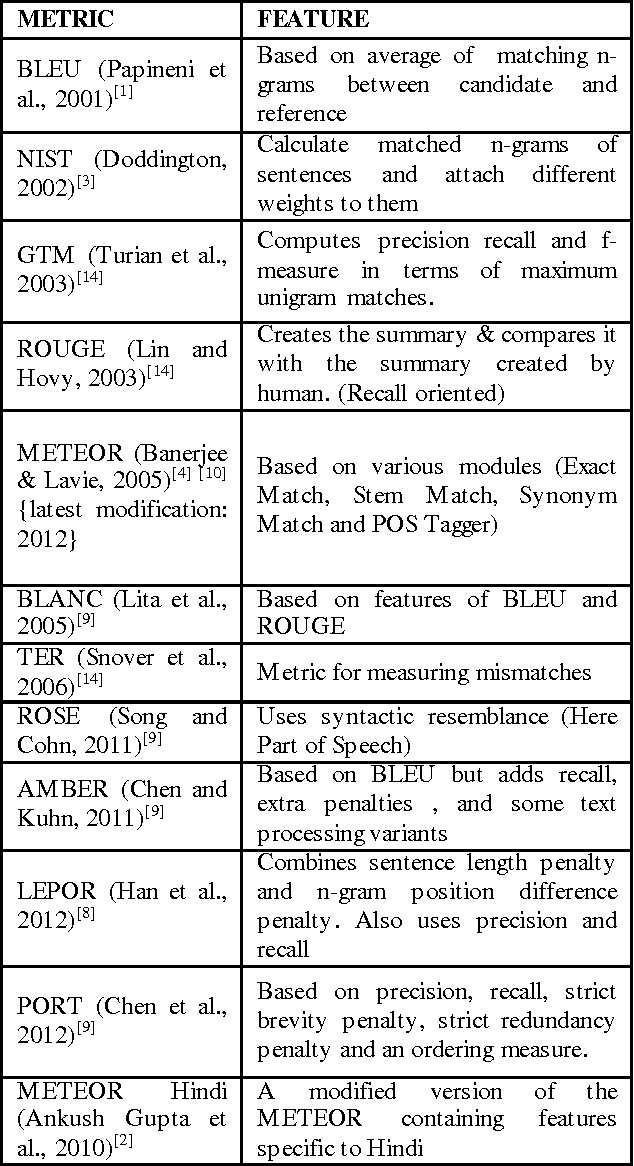

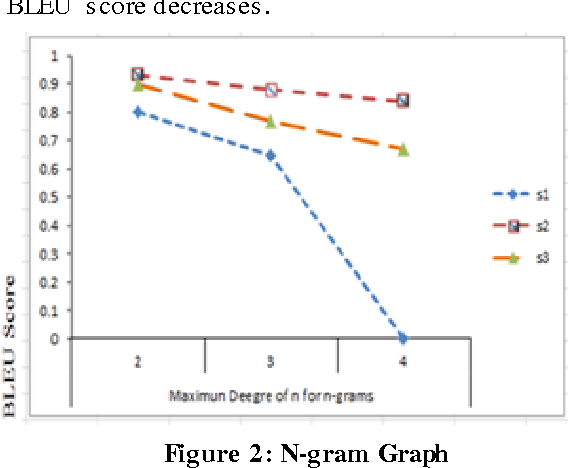

Abstract:Evaluation plays a vital role in checking the quality of MT output. It is done either manually or automatically. Manual evaluation is very time consuming and subjective, hence use of automatic metrics is done most of the times. This paper evaluates the translation quality of different MT Engines for Hindi-English (Hindi data is provided as input and English is obtained as output) using various automatic metrics like BLEU, METEOR etc. Further the comparison automatic evaluation results with Human ranking have also been given.

Evaluation and Ranking of Machine Translated Output in Hindi Language using Precision and Recall Oriented Metrics

Apr 07, 2014

Abstract:Evaluation plays a crucial role in development of Machine translation systems. In order to judge the quality of an existing MT system i.e. if the translated output is of human translation quality or not, various automatic metrics exist. We here present the implementation results of different metrics when used on Hindi language along with their comparisons, illustrating how effective are these metrics on languages like Hindi (free word order language).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge