Adhitya Thirumala

Clickbait Classification and Spoiling Using Natural Language Processing

Jun 16, 2023Abstract:Clickbait is the practice of engineering titles to incentivize readers to click through to articles. Such titles with sensationalized language reveal as little information as possible. Occasionally, clickbait will be intentionally misleading, so natural language processing (NLP) can scan the article and answer the question posed by the clickbait title, or spoil it. We tackle two tasks: classifying the clickbait into one of 3 types (Task 1), and spoiling the clickbait (Task 2). For Task 1, we propose two binary classifiers to determine the final spoiler type. For Task 2, we experiment with two approaches: using a question-answering model to identify the span of text of the spoiler, and using a large language model (LLM) to generate the spoiler. Because the spoiler is contained in the article, we frame the second task as a question-answering approach for identifying the starting and ending positions of the spoiler. We created models for Task 1 that were better than the baselines proposed by the dataset authors and engineered prompts for Task 2 that did not perform as well as the baselines proposed by the dataset authors due to the evaluation metric performing worse when the output text is from a generative model as opposed to an extractive model.

Extractive Question Answering on Queries in Hindi and Tamil

Sep 27, 2022

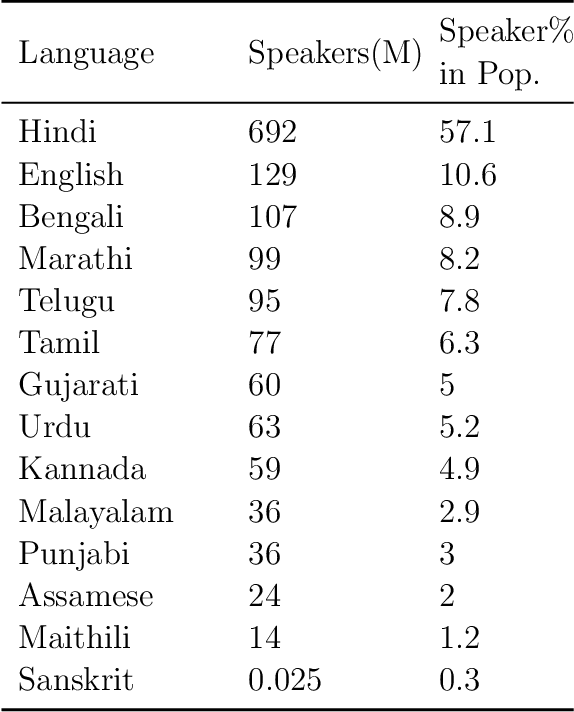

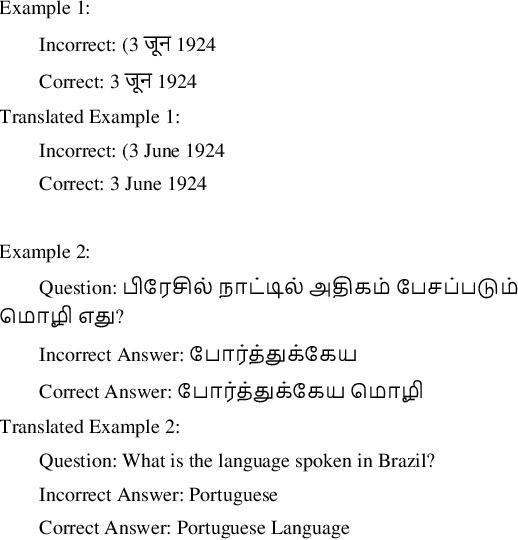

Abstract:Indic languages like Hindi and Tamil are underrepresented in the natural language processing (NLP) field compared to languages like English. Due to this underrepresentation, performance on NLP tasks (such as search algorithms) in Indic languages are inferior to their English counterparts. This difference disproportionately affects those who come from lower socioeconomic statuses because they consume the most Internet content in local languages. The goal of this project is to build an NLP model that performs better than pre-existing models for the task of extractive question-answering (QA) on a public dataset in Hindi and Tamil. Extractive QA is an NLP task where answers to questions are extracted from a corresponding body of text. To build the best solution, we used three different models. The first model is an unmodified cross-lingual version of the NLP model RoBERTa, known as XLM-RoBERTa, that is pretrained on 100 languages. The second model is based on the pretrained RoBERTa model with an extra classification head for the question answering, but we used a custom Indic tokenizer, then optimized hyperparameters and fine tuned on the Indic dataset. The third model is based on XLM-RoBERTa, but with extra finetuning and training on the Indic dataset. We hypothesize the third model will perform best because of the variety of languages the XLM-RoBERTa model has been pretrained on and the additional finetuning on the Indic dataset. This hypothesis was proven wrong because the paired RoBERTa models performed the best as the training data used was most specific to the task performed as opposed to the XLM-RoBERTa models which had much data that was not in either Hindi or Tamil.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge