Adam Sadilek

Machine-learned epidemiology: real-time detection of foodborne illness at scale

Dec 05, 2018

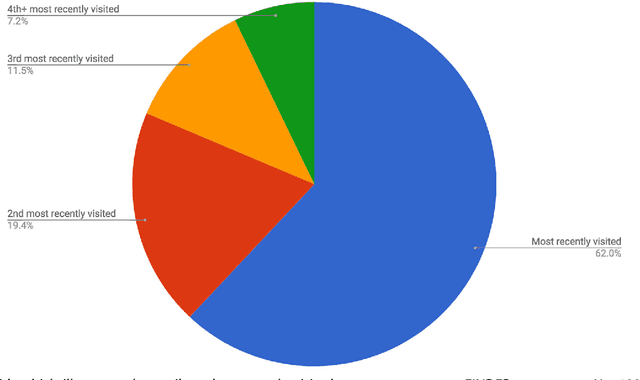

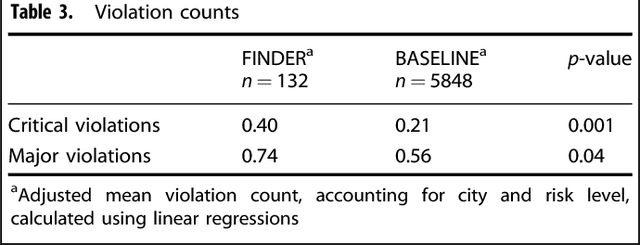

Abstract:Machine learning has become an increasingly powerful tool for solving complex problems, and its application in public health has been underutilized. The objective of this study is to test the efficacy of a machine-learned model of foodborne illness detection in a real-world setting. To this end, we built FINDER, a machine-learned model for real-time detection of foodborne illness using anonymous and aggregated web search and location data. We computed the fraction of people who visited a particular restaurant and later searched for terms indicative of food poisoning to identify potentially unsafe restaurants. We used this information to focus restaurant inspections in two cities and demonstrated that FINDER improves the accuracy of health inspections; restaurants identified by FINDER are 3.1 times as likely to be deemed unsafe during the inspection as restaurants identified by existing methods. Additionally, FINDER enables us to ascertain previously intractable epidemiological information, for example, in 38% of cases the restaurant potentially causing food poisoning was not the last one visited, which may explain the lower precision of complaint-based inspections. We found that FINDER is able to reliably identify restaurants that have an active lapse in food safety, allowing for implementation of corrective actions that would prevent the potential spread of foodborne illness.

Location-Based Reasoning about Complex Multi-Agent Behavior

Jan 18, 2014

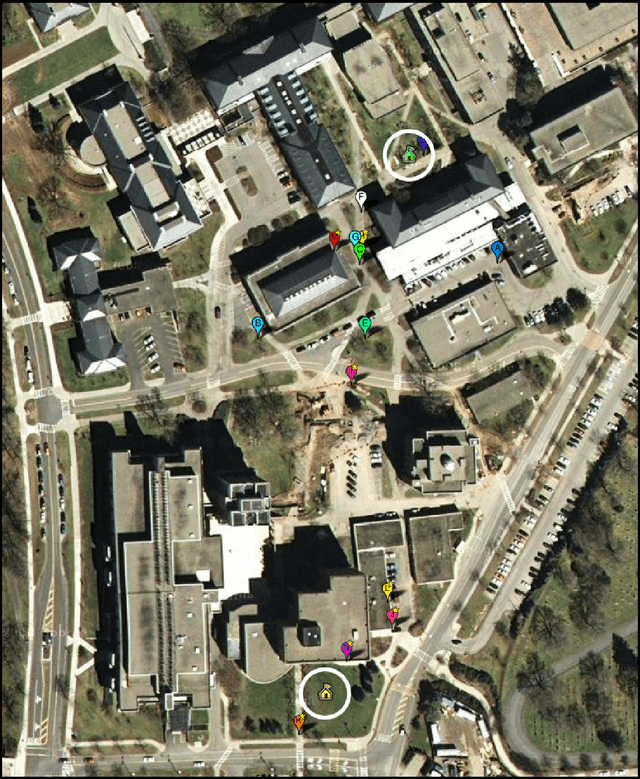

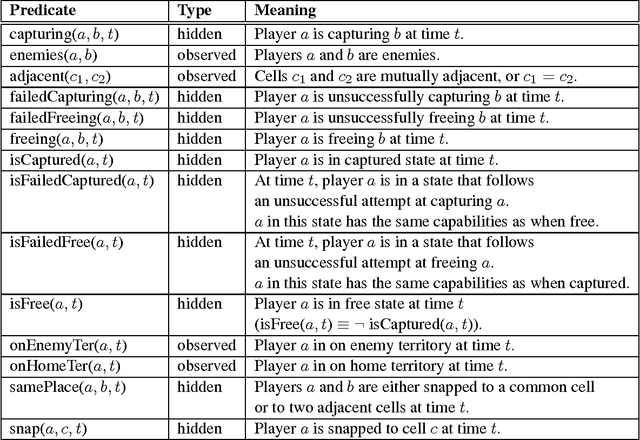

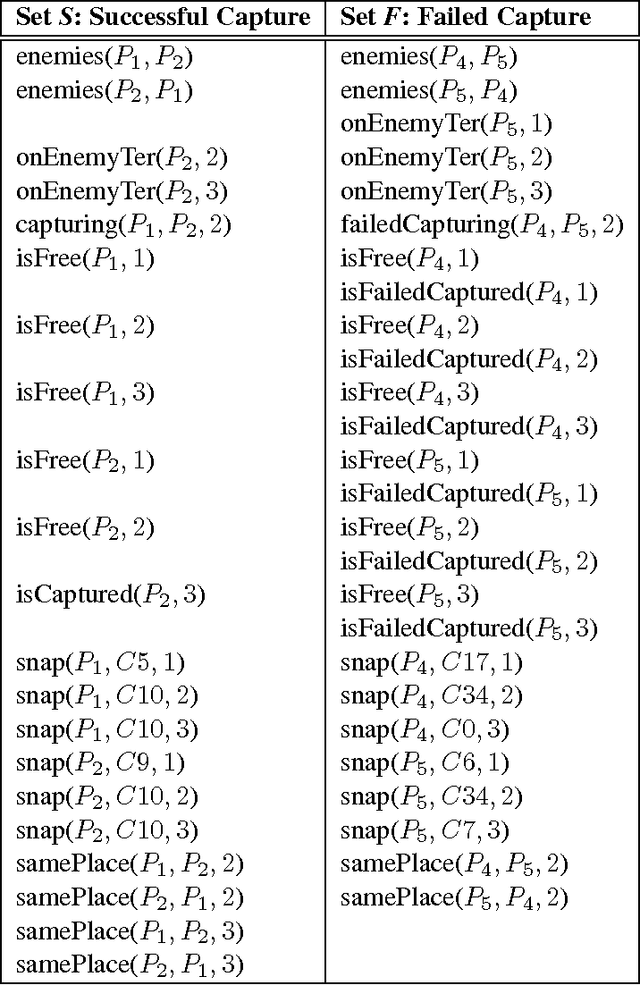

Abstract:Recent research has shown that surprisingly rich models of human activity can be learned from GPS (positional) data. However, most effort to date has concentrated on modeling single individuals or statistical properties of groups of people. Moreover, prior work focused solely on modeling actual successful executions (and not failed or attempted executions) of the activities of interest. We, in contrast, take on the task of understanding human interactions, attempted interactions, and intentions from noisy sensor data in a fully relational multi-agent setting. We use a real-world game of capture the flag to illustrate our approach in a well-defined domain that involves many distinct cooperative and competitive joint activities. We model the domain using Markov logic, a statistical-relational language, and learn a theory that jointly denoises the data and infers occurrences of high-level activities, such as a player capturing an enemy. Our unified model combines constraints imposed by the geometry of the game area, the motion model of the players, and by the rules and dynamics of the game in a probabilistically and logically sound fashion. We show that while it may be impossible to directly detect a multi-agent activity due to sensor noise or malfunction, the occurrence of the activity can still be inferred by considering both its impact on the future behaviors of the people involved as well as the events that could have preceded it. Further, we show that given a model of successfully performed multi-agent activities, along with a set of examples of failed attempts at the same activities, our system automatically learns an augmented model that is capable of recognizing success and failure, as well as goals of peoples actions with high accuracy. We compare our approach with other alternatives and show that our unified model, which takes into account not only relationships among individual players, but also relationships among activities over the entire length of a game, although more computationally costly, is significantly more accurate. Finally, we demonstrate that explicitly modeling unsuccessful attempts boosts performance on other important recognition tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge