Adam J. Hepworth

Human-centred test and evaluation of military AI

Dec 02, 2024Abstract:The REAIM 2024 Blueprint for Action states that AI applications in the military domain should be ethical and human-centric and that humans must remain responsible and accountable for their use and effects. Developing rigorous test and evaluation, verification and validation (TEVV) frameworks will contribute to robust oversight mechanisms. TEVV in the development and deployment of AI systems needs to involve human users throughout the lifecycle. Traditional human-centred test and evaluation methods from human factors need to be adapted for deployed AI systems that require ongoing monitoring and evaluation. The language around AI-enabled systems should be shifted to inclusion of the human(s) as a component of the system. Standards and requirements supporting this adjusted definition are needed, as are metrics and means to evaluate them. The need for dialogue between technologists and policymakers on human-centred TEVV will be evergreen, but dialogue needs to be initiated with an objective in mind for it to be productive. Development of TEVV throughout system lifecycle is critical to support this evolution including the issue of human scalability and impact on scale of achievable testing. Communication between technical and non technical communities must be improved to ensure operators and policy-makers understand risk assumed by system use and to better inform research and development. Test and evaluation in support of responsible AI deployment must include the effect of the human to reflect operationally realised system performance. Means of communicating the results of TEVV to those using and making decisions regarding the use of AI based systems will be key in informing risk based decisions regarding use.

Onto4MAT: A Swarm Shepherding Ontology for Generalised Multi-Agent Teaming

Mar 24, 2022

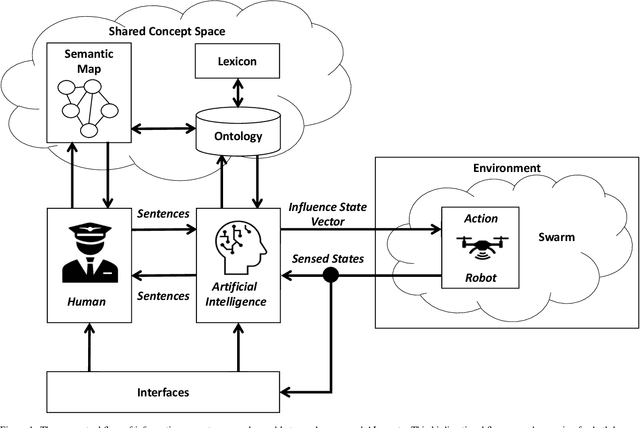

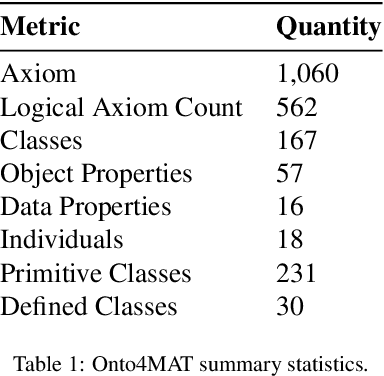

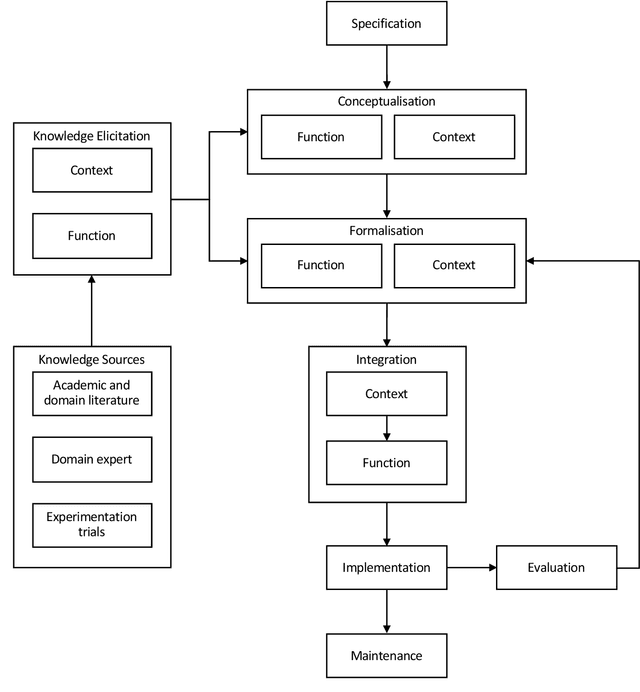

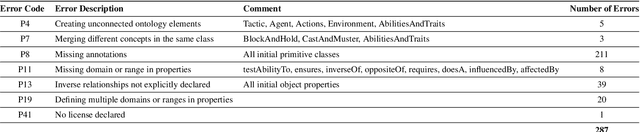

Abstract:Research in multi-agent teaming has increased substantially over recent years, with knowledge-based systems to support teaming processes typically focused on delivering functional (communicative) solutions for a team to act meaningfully in response to direction. Enabling humans to effectively interact and team with a swarm of autonomous cognitive agents is an open research challenge in Human-Swarm Teaming research, partially due to the focus on developing the enabling architectures to support these systems. Typically, bi-directional transparency and shared semantic understanding between agents has not prioritised a designed mechanism in Human-Swarm Teaming, potentially limiting how a human and a swarm team can share understanding and information\textemdash data through concepts and contexts\textemdash to achieve a goal. To address this, we provide a formal knowledge representation design that enables the swarm Artificial Intelligence to reason about its environment and system, ultimately achieving a shared goal. We propose the Ontology for Generalised Multi-Agent Teaming, Onto4MAT, to enable more effective teaming between humans and teams through the biologically-inspired approach of shepherding.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge