Acong Zhang

Steering Graph Neural Networks with Pinning Control

Mar 02, 2023

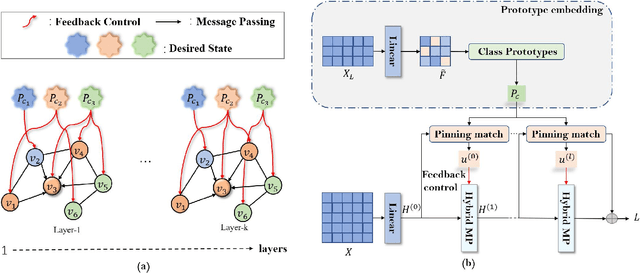

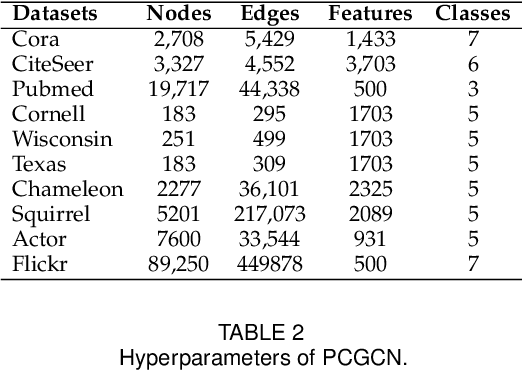

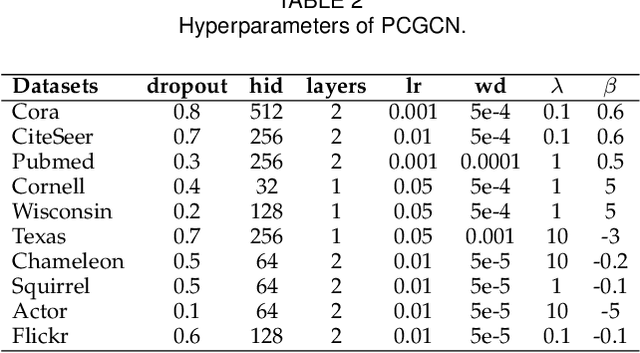

Abstract:In the semi-supervised setting where labeled data are largely limited, it remains to be a big challenge for message passing based graph neural networks (GNNs) to learn feature representations for the nodes with the same class label that is distributed discontinuously over the graph. To resolve the discontinuous information transmission problem, we propose a control principle to supervise representation learning by leveraging the prototypes (i.e., class centers) of labeled data. Treating graph learning as a discrete dynamic process and the prototypes of labeled data as "desired" class representations, we borrow the pinning control idea from automatic control theory to design learning feedback controllers for the feature learning process, attempting to minimize the differences between message passing derived features and the class prototypes in every round so as to generate class-relevant features. Specifically, we equip every node with an optimal controller in each round through learning the matching relationships between nodes and the class prototypes, enabling nodes to rectify the aggregated information from incompatible neighbors in a graph with strong heterophily. Our experiments demonstrate that the proposed PCGCN model achieves better performances than deep GNNs and other competitive heterophily-oriented methods, especially when the graph has very few labels and strong heterophily.

Building Shortcuts between Distant Nodes with Biaffine Mapping for Graph Convolutional Networks

Feb 20, 2023

Abstract:Multiple recent studies show a paradox in graph convolutional networks (GCNs), that is, shallow architectures limit the capability of learning information from high-order neighbors, while deep architectures suffer from over-smoothing or over-squashing. To enjoy the simplicity of shallow architectures and overcome their limits of neighborhood extension, in this work, we introduce Biaffine technique to improve the expressiveness of graph convolutional networks with a shallow architecture. The core design of our method is to learn direct dependency on long-distance neighbors for nodes, with which only one-hop message passing is capable of capturing rich information for node representation. Besides, we propose a multi-view contrastive learning method to exploit the representations learned from long-distance dependencies. Extensive experiments on nine graph benchmark datasets suggest that the shallow biaffine graph convolutional networks (BAGCN) significantly outperforms state-of-the-art GCNs (with deep or shallow architectures) on semi-supervised node classification. We further verify the effectiveness of biaffine design in node representation learning and the performance consistency on different sizes of training data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge