Abe Kazemzadeh

Summer

Emotion Twenty Questions Dialog System for Lexical Emotional Intelligence

Oct 05, 2022

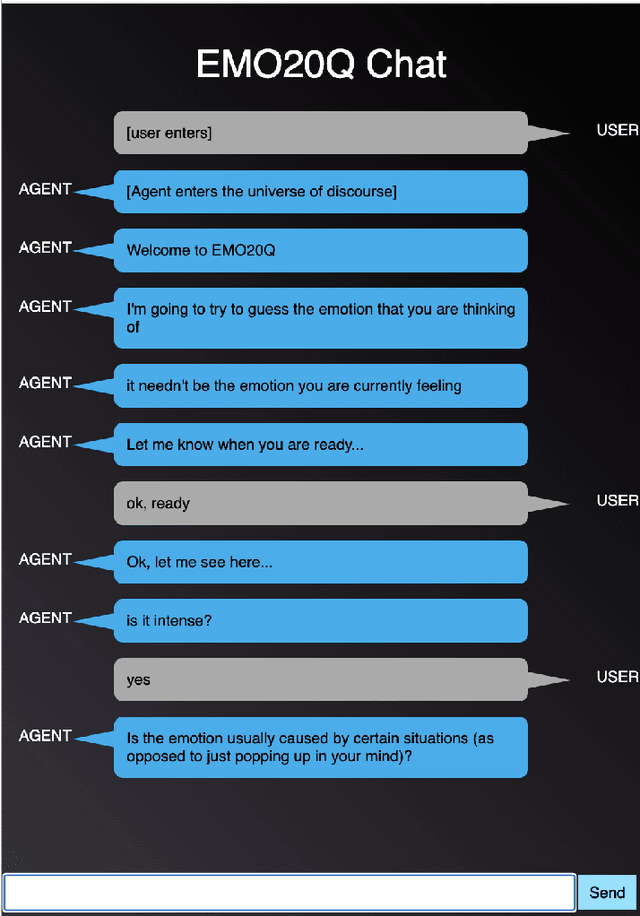

Abstract:This paper presents a web-based demonstration of Emotion Twenty Questions (EMO20Q), a dialog game whose purpose is to study how people describe emotions. EMO20Q can also be used to develop artificially intelligent dialog agents that can play the game. In previous work, an EMO20Q agent used a sequential Bayesian machine learning model and could play the question-asking role. Newer transformer-based neural machine learning models have made it possible to develop an agent for the question-answering role. This demo paper describes the recent developments in the question-answering role of the EMO20Q game, which requires the agent to respond to more open-ended inputs. Furthermore, we also describe the design of the system, including the web-based front-end, agent architecture and programming, and updates to earlier software used. The demo system will be available to collect pilot data during the ACII conference and this data will be used to inform future experiments and system design.

BERT-Assisted Semantic Annotation Correction for Emotion-Related Questions

Apr 02, 2022

Abstract:Annotated data have traditionally been used to provide the input for training a supervised machine learning (ML) model. However, current pre-trained ML models for natural language processing (NLP) contain embedded linguistic information that can be used to inform the annotation process. We use the BERT neural language model to feed information back into an annotation task that involves semantic labelling of dialog behavior in a question-asking game called Emotion Twenty Questions (EMO20Q). First we describe the background of BERT, the EMO20Q data, and assisted annotation tasks. Then we describe the methods for fine-tuning BERT for the purpose of checking the annotated labels. To do this, we use the paraphrase task as a way to check that all utterances with the same annotation label are classified as paraphrases of each other. We show this method to be an effective way to assess and revise annotations of textual user data with complex, utterance-level semantic labels.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge