Aaron Hein

An Investigation in Optimal Encoding of Protein Primary Sequence for Structure Prediction by Artificial Neural Networks

Aug 02, 2020

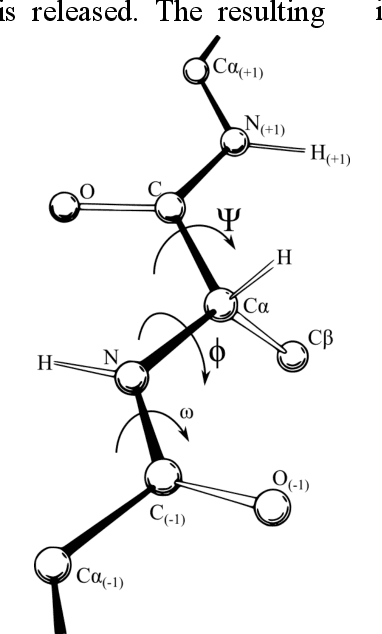

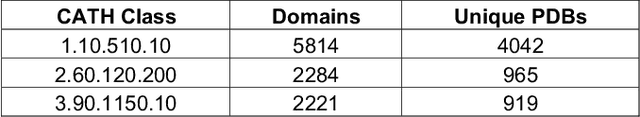

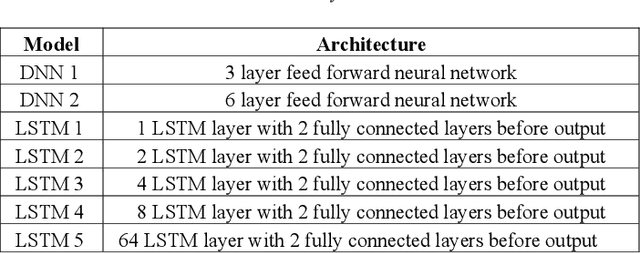

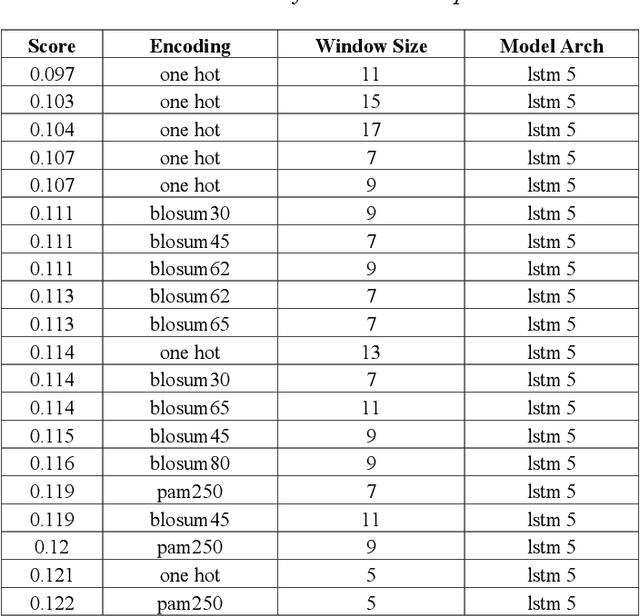

Abstract:Machine learning and the use of neural networks has increased precipitously over the past few years primarily due to the ever-increasing accessibility to data and the growth of computation power. It has become increasingly easy to harness the power of machine learning for predictive tasks. Protein structure prediction is one area where neural networks are becoming increasingly popular and successful. Although very powerful, the use of ANN require selection of most appropriate input/output encoding, architecture, and class to produce the optimal results. In this investigation we have explored and evaluated the effect of several conventional and newly proposed input encodings and selected an optimal architecture. We considered 11 variations of input encoding, 11 alternative window sizes, and 7 different architectures. In total, we evaluated 2,541 permutations in application to the training and testing of more than 10,000 protein structures over the course of 3 months. Our investigations concluded that one-hot encoding, the use of LSTMs, and window sizes of 9, 11, and 15 produce the optimal outcome. Through this optimization, we were able to improve the quality of protein structure prediction by predicting the {\phi} dihedrals to within 14{\deg} - 16{\deg} and {\psi} dihedrals to within 23{\deg}- 25{\deg}. This is a notable improvement compared to previously similar investigations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge