Éloi Brassard-Gourdeau

Using Sentiment Information for Preemptive Detection of Toxic Comments in Online Conversations

Jun 17, 2020

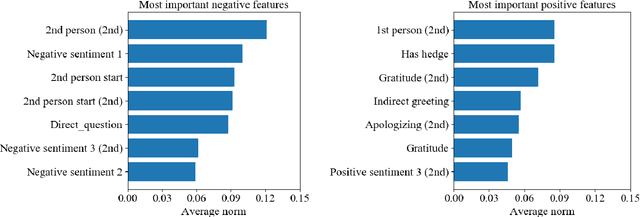

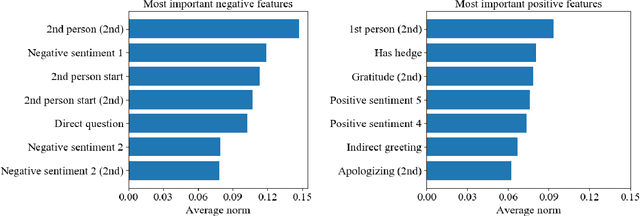

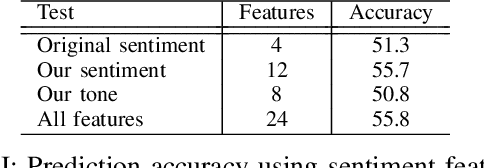

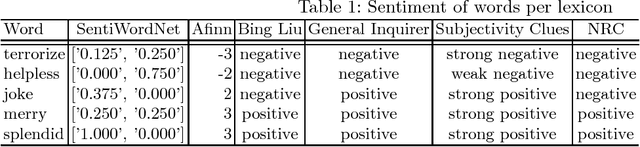

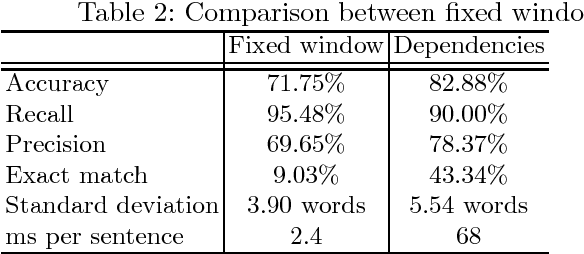

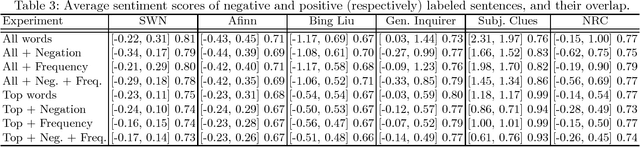

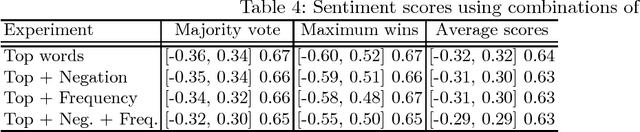

Abstract:The challenge of automatic detection of toxic comments online has been the subject of a lot of research recently, but the focus has been mostly on detecting it in individual messages after they have been posted. Some authors have tried to predict if a conversation will derail into toxicity using the features of the first few messages. In this paper, we combine that approach with previous work on toxicity detection using sentiment information, and show how the sentiments expressed in the first messages of a conversation can help predict upcoming toxicity. Our results show that adding sentiment features does help improve the accuracy of toxicity prediction, and also allow us to make important observations on the general task of preemptive toxicity detection.

Impact of Sentiment Detection to Recognize Toxic and Subversive Online Comments

Dec 04, 2018

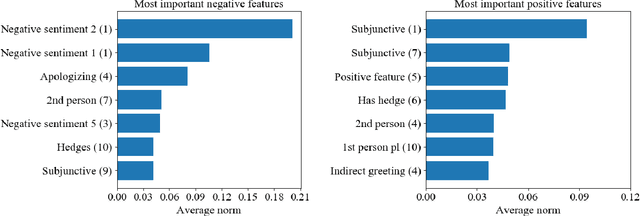

Abstract:The presence of toxic content has become a major problem for many online communities. Moderators try to limit this problem by implementing more and more refined comment filters, but toxic users are constantly finding new ways to circumvent them. Our hypothesis is that while modifying toxic content and keywords to fool filters can be easy, hiding sentiment is harder. In this paper, we explore various aspects of sentiment detection and their correlation to toxicity, and use our results to implement a toxicity detection tool. We then test how adding the sentiment information helps detect toxicity in three different real-world datasets, and incorporate subversion to these datasets to simulate a user trying to circumvent the system. Our results show sentiment information has a positive impact on toxicity detection against a subversive user.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge