Ádám Fodor

Enhancing Apparent Personality Trait Analysis with Cross-Modal Embeddings

May 06, 2024

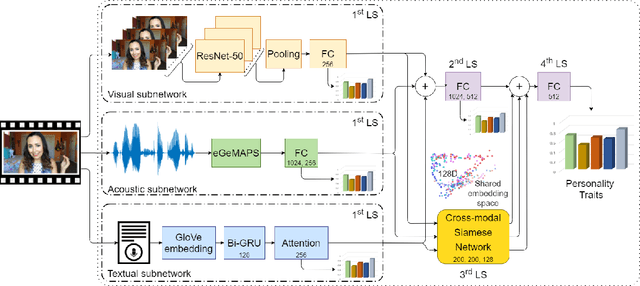

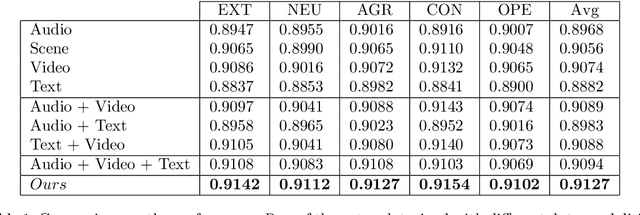

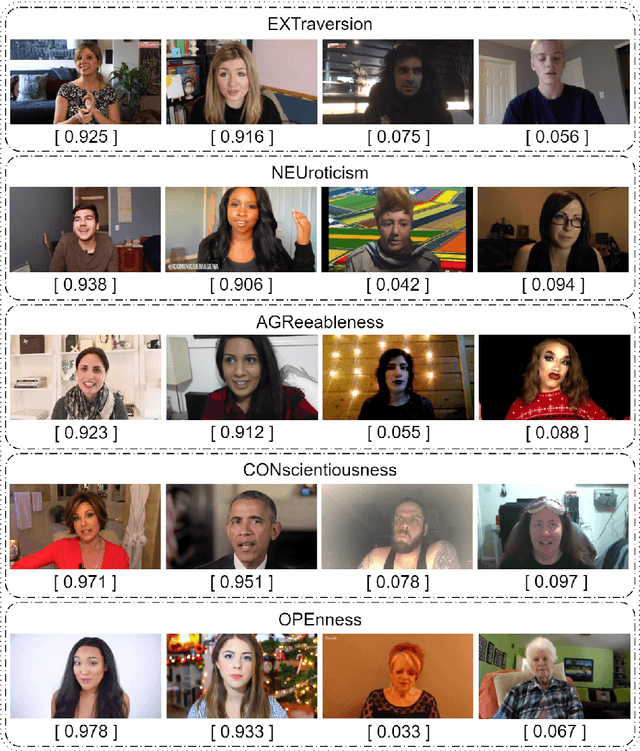

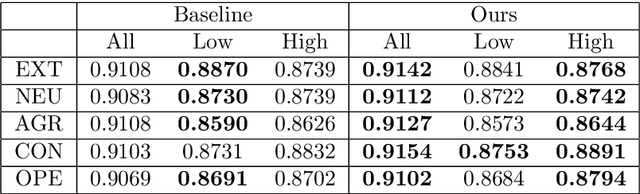

Abstract:Automatic personality trait assessment is essential for high-quality human-machine interactions. Systems capable of human behavior analysis could be used for self-driving cars, medical research, and surveillance, among many others. We present a multimodal deep neural network with a Siamese extension for apparent personality trait prediction trained on short video recordings and exploiting modality invariant embeddings. Acoustic, visual, and textual information are utilized to reach high-performance solutions in this task. Due to the highly centralized target distribution of the analyzed dataset, the changes in the third digit are relevant. Our proposed method addresses the challenge of under-represented extreme values, achieves 0.0033 MAE average improvement, and shows a clear advantage over the baseline multimodal DNN without the introduced module.

* 14 pages, 4 figures

Perceived personality state estimation in dyadic and small group interaction with deep learning methods

Nov 09, 2022Abstract:Dyadic and small group collaboration is an evolutionary advantageous behaviour and the need for such collaboration is a regular occurrence in day to day life. In this paper we estimate the perceived personality traits of individuals in dyadic and small groups over thin-slices of interaction on four multimodal datasets. We find that our transformer based predictive model performs similarly to human annotators tasked with predicting the perceived big-five personality traits of participants. Using this model we analyse the estimated perceived personality traits of individuals performing tasks in small groups and dyads. Permutation analysis shows that in the case of small groups undergoing collaborative tasks, the perceived personality of group members clusters, this is also observed for dyads in a collaborative problem solving task, but not in dyads under non-collaborative task settings. Additionally, we find that the group level average perceived personality traits provide a better predictor of group performance than the group level average self-reported personality traits.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge