Words as Art Materials: Generating Paintings with Sequential GANs

Paper and Code

Jul 08, 2020

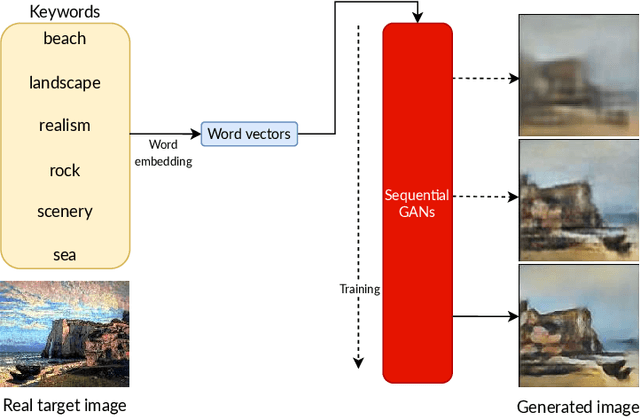

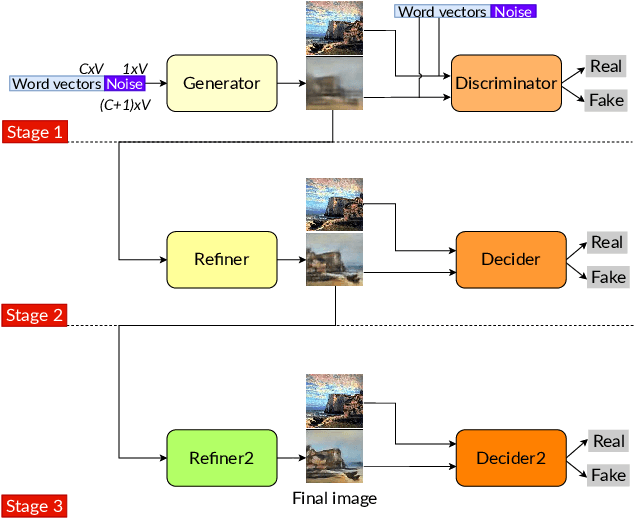

Converting text descriptions into images using Generative Adversarial Networks has become a popular research area. Visually appealing images have been generated successfully in recent years. Inspired by these studies, we investigated the generation of artistic images on a large variance dataset. This dataset includes images with variations, for example, in shape, color, and content. These variations in images provide originality which is an important factor for artistic essence. One major characteristic of our work is that we used keywords as image descriptions, instead of sentences. As the network architecture, we proposed a sequential Generative Adversarial Network model. The first stage of this sequential model processes the word vectors and creates a base image whereas the next stages focus on creating high-resolution artistic-style images without working on word vectors. To deal with the unstable nature of GANs, we proposed a mixture of techniques like Wasserstein loss, spectral normalization, and minibatch discrimination. Ultimately, we were able to generate painting images, which have a variety of styles. We evaluated our results by using the Fr\'echet Inception Distance score and conducted a user study with 186 participants.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge