Word Embeddings and Their Use In Sentence Classification Tasks

Paper and Code

Oct 26, 2016

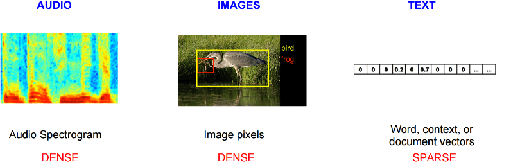

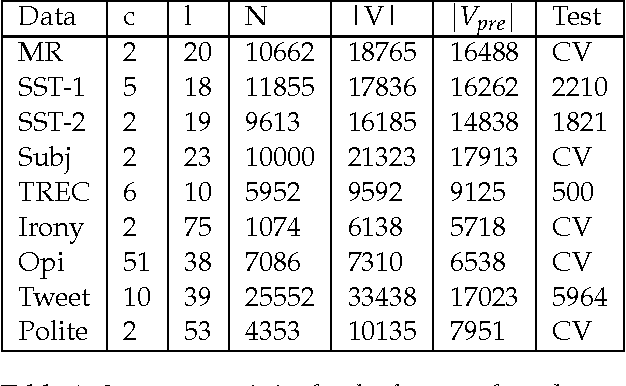

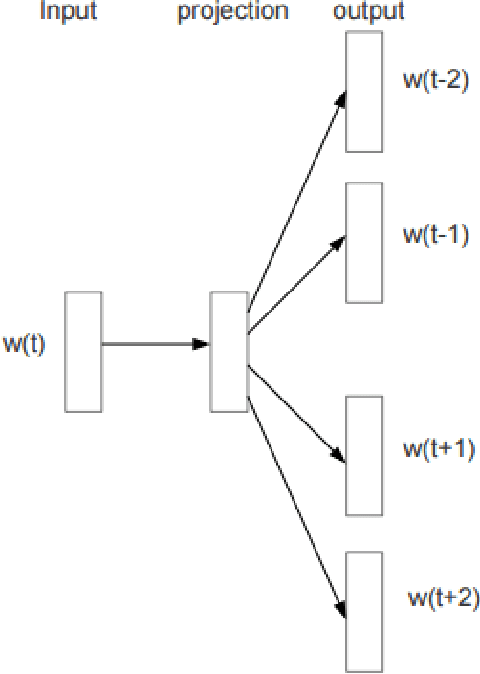

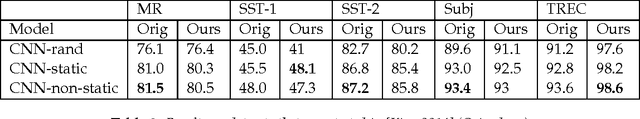

This paper have two parts. In the first part we discuss word embeddings. We discuss the need for them, some of the methods to create them, and some of their interesting properties. We also compare them to image embeddings and see how word embedding and image embedding can be combined to perform different tasks. In the second part we implement a convolutional neural network trained on top of pre-trained word vectors. The network is used for several sentence-level classification tasks, and achieves state-of-art (or comparable) results, demonstrating the great power of pre-trainted word embeddings over random ones.

* 13 pages, 10 figures

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge