Whither the Priors for (Vocal) Interactivity?

Paper and Code

Mar 16, 2022

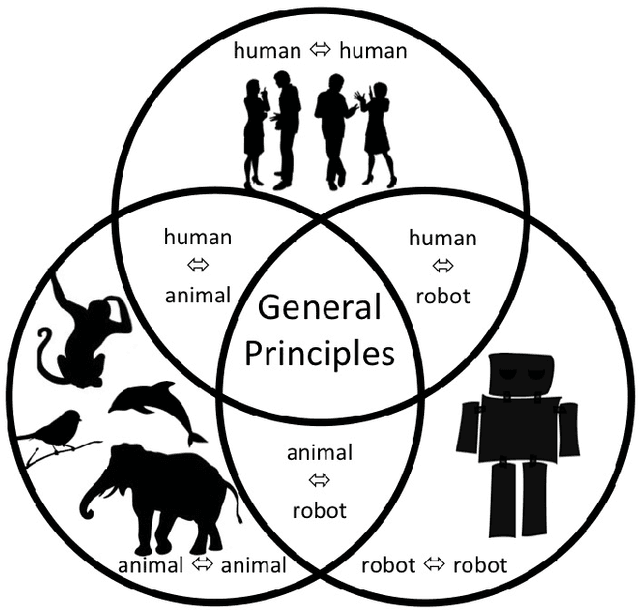

Voice-based communication is often cited as one of the most `natural' ways in which humans and robots might interact, and the recent availability of accurate automatic speech recognition and intelligible speech synthesis has enabled researchers to integrate advanced off-the-shelf spoken language technology components into their robot platforms. Despite this, the resulting interactions are anything but `natural'. It transpires that simply giving a robot a voice doesn't mean that a user will know how (or when) to talk to it, and the resulting `conversations' tend to be stilted, one-sided and short. On the surface, these difficulties might appear to be fairly trivial consequences of users' unfamiliarity with robots (and \emph{vice versa}), and that any problems would be mitigated by long-term use by the human, coupled with `deep learning' by the robot. However, it is argued here that such communication failures are indicative of a deeper malaise: a fundamental lack of basic principles -- \emph{priors} -- underpinning not only speech-based interaction in particular, but (vocal) interactivity in general. This is evidenced not only by the fact that contemporary spoken language systems already require training data sets that are orders-of-magnitude greater than that experienced by a young child, but also by the lack of design principles for creating effective communicative human-robot interaction. This short position paper identifies some of the key areas where theoretical insights might help overcome these shortfalls.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge