When does Diversity Help Generalization in Classification Ensembles?

Paper and Code

Oct 30, 2019

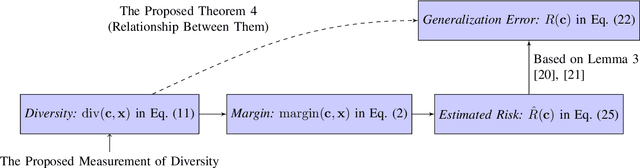

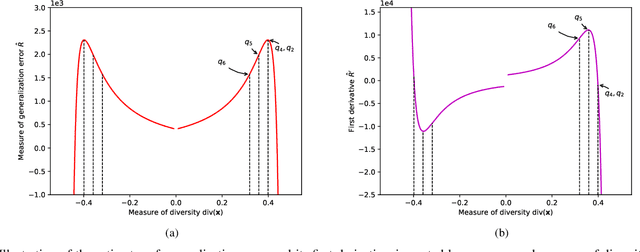

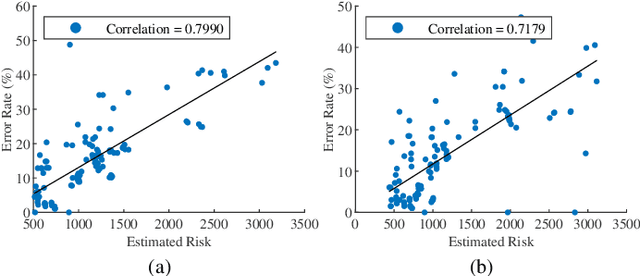

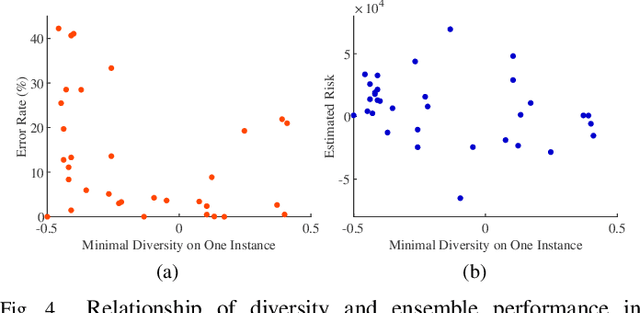

Ensembles, as a widely used and effective technique in the machine learning community, succeed within a key element--"diversity." The relationship between diversity and generalization, unfortunately, is not entirely understood and remains an open research issue. To reveal the effect of diversity on the generalization of classification ensembles, we investigate three issues on diversity, i.e., the measurement of diversity, the relationship between the proposed diversity and generalization error, and the utilization of this relationship for ensemble pruning. In the diversity measurement, we measure diversity by error decomposition inspired by regression ensembles, which decomposes the error of classification ensembles into accuracy and diversity. Then we formulate the relationship between the measured diversity and ensemble performance through the theorem of margin and generalization, and observe that the generalization error is reduced effectively only when the measured diversity is increased in a few specific ranges, while in other ranges larger diversity is less beneficial to increase generalization of an ensemble. Besides, we propose a pruning method based on diversity management to utilize this relationship, which could increase diversity appropriately and shrink the size of the ensemble with non-decreasing performance. The experiments validate the effectiveness of this proposed relationship between the proposed diversity and the ensemble generalization error.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge